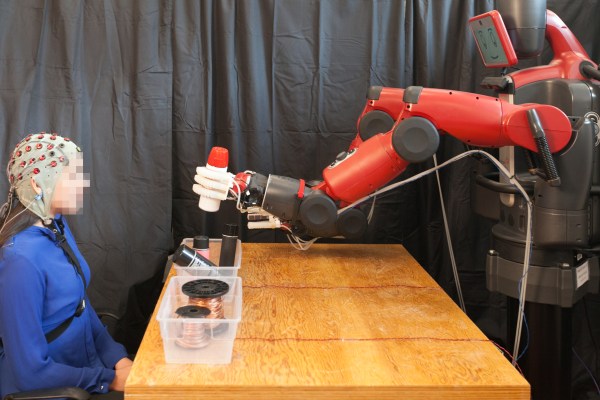

In a new video from MIT’s CSAIL department, a smirking industrial robot from Rethink picks up spray paint cans and spools of wire, dropping them in the properly labeled bin. The robot hesitates briefly, before accidentally making the wrong choice, only to self-correct and drop the can of paint where it belongs. The correction comes courtesy of an observer in an EEG cap, who simply notices that something is off.

“When you put an EEG cap on a user, it measures signals using 48 sensors,” CSAIL Director Daniela Rus tells TechCrunch. “Most of the signals are very difficult to interpret. It’s very noisy. But one signal is much more easy to detect than the others.”

The signal is known as “error potential,” a strong reaction emitted in the brain, when an individual notices something wrong. It’s strong, it’s sudden and it’s relatively easy for the machinery to detect and distinguish among the cacophony of brain waves, making it an ideal candidate for CSAIL’s system.

“Error potential is a very natural reaction,” adds Rus. “This is quite a different paradigm to what we use today, which is asking the human to program the robot in the robot’s language. We’re trying to get the robots to adapt to the human language rather than getting the human to adapt to the robot’s language. “

The scenario depicted in the video is a simple mockup of an assembly line, wherein the robot is performing the primary task, supervised by a human, who can override movement without having to code a correction or hitting a big red button.

“We want to have two-way communication,” CSAIL research scientist Stephanie Gil explains. “Being able to read the EG signals of the human and using that as a control signal to the robot will have an effect on the robot’s choice. Whether or not the robot makes the right choice will have an effect on the human’s reactions. That’s a natural two-way communication or a conversation between humans or robots.”

In the early stage described by the team in an accompanying research paper, the interaction only operates with binary distinctions (paint vs. wire). It also only operates in real-time (around 10 to 30 milliseconds), but upcoming iterations could utilize a form of learning, assigning more meaning to the mistakes and correcting them for future choices.

The team is also looking into additional potential applications, including interactions for those who can’t communicate verbally, as well as other technologies designed to operate autonomously, but still requiring some level of human interaction to help avoid potential hazards.

“You can imagine this being used in a place where a human is in a supervisory role, watching robots work and detecting where they make mistakes,” says CSAIL PhD candidate Joseph DelPreto, “or maybe in a self-driving car where the car can do most of the work, but the human can still be in control and let it know when it’s doing something wrong. “