Apple’s looking into some very intriguing things in a couple of new patent applications spotted by AppleInsider today, including sonar-style echolocation for passive proximity detection, and a text-to-speech engine that takes contextual cues about what it’s reading and adds personality to the computer-generated voices it employs. Both of these could result in big changes in the daily use of mobile devices.

Speaking In A Voice You Know

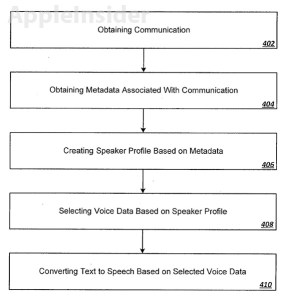

The first patent, called “Voice assignment for text-t0-speech output,” can alter text-to-speech (TTS) profiles based on metadata gleaned from content found on a user’s phone or device. So, for instance, if it’s reading back an email from a contact it can identify as male, 25 and living in the U.K., then the voice it produces to read said email will represent those attributes in accent and tone.

The patent describes using actual recorded audio from an off-site database where possible to achieve as natural a reading as possible, and there’s even a provision whereby, with permission from those involved, an iPhone could record speech from contacts on phone calls and use that technique to produce a reasonable facsimile of their voice for TTS use. That way, if you were to have Siri read you an incoming iMessage, you’d hear it in the voice of the sender.

It’s an interesting play, and one that could encourage greater adoption of TTS services. Stilted, inhuman intonation and pronunciation is frequently cited as one of the major failings of computer-generated speech, and hardly helps promote a sense of identification between a user and their device. That kind of bond is important in driving further use of said services, which is in turn useful to Apple because it clearly seems to want to make Siri a go-to resource for iPhone and iPad users in all areas of discovery and potentially even search.

Guided By Voices

The other patent application found today details a sound-based echolocation system that lets a device determine its distance from other objects. So a mic could be used to take in ambient sound and determine its relative position, also noting when an object gets closer or farther away. This could be used in place of an ambient light sensor to determine an iPhone’s proximity to a user’s face, for instance, and the iPhone could even send out its own audio signal or ping, when ambient sound isn’t detectable, to determine where it is relative to another surface. As we’ve seen with inventions like the jaja pressure-sensitive stylus, this noise need not be audible to the human ear to be picked up by Apple’s mobile hardware.

There’s a clear benefit for Apple from this tech: it potentially allows the elimination of components like the ambient light sensor it removed from the fifth-generation iPod touch. Apple SVP Phil Schiller reportedly responded to a customer email saying the part was left out of the iPod touch because its chassis is “just too thin.” Further reductions in the iPhone’s thickness could necessitate a similar move, in which case the three microphones currently found in Apple’s smartphone could prove a suitable replacement, should the tech described in this new patent application actually function effectively.