Several of Rethink’s Sawyer robotics are crammed into a small corner of a lab at UC Berkeley’s Sutardja Dai Hall. The industrial robotic arms are separated by a makeshift scaffold. It’s a bit of a tight squeeze as we set up to watch the first of two demos, each taking a distinctly different approach to a similar goal: teaching robots to learn how to learn.

It’s a complex, but necessary task at the heart of Assistant Professor Sergey Levine’s robotics group. Part of the school’s Robotic Artificial Intelligence and Learning Lab, Levine’s team is tasked with finding ways to help robots better interact with tasks and human counterparts in real-world settings.

“Robots are really good at essentially doing the same thing, over and over again,” Levine explains.” This is basically how robotics has been for several decades. When it comes to robots going out into unstructured environments like your home or an office or a hospital, all sorts of unpredictable things can happen. I think learning will make it crucial for robots. Then robots can learn from their experience, acquire common sense and use that to act intelligently when things happen in the real world that they didn’t quite expect.”

The team walks us through a pair of demos to showcase how it’s thinking about robotics learning. The first, “Deep Visual Foresight for Planning Robot Motion,” is designed to help robots collect their own data without direct human supervision.

After all, these sorts of training exercises often require robots to execute tens of thousands of sequences or more, in order to have a sufficient database. That means running a robot overnight for days on end — precisely the sort of repetitive task these sorts of industrial robots are designed to replace.

The arm is tasked to push objects over and over again, noting the reaction from a variety of different camera angles. The robot collects the information, but doesn’t assign any real-world value to the objects. Using “deep action-conditioned video prediction models with model-predictive control,” however, the robot is able to push objects it hasn’t encountered previously, in spite of not being programmed to interact on that specific object.

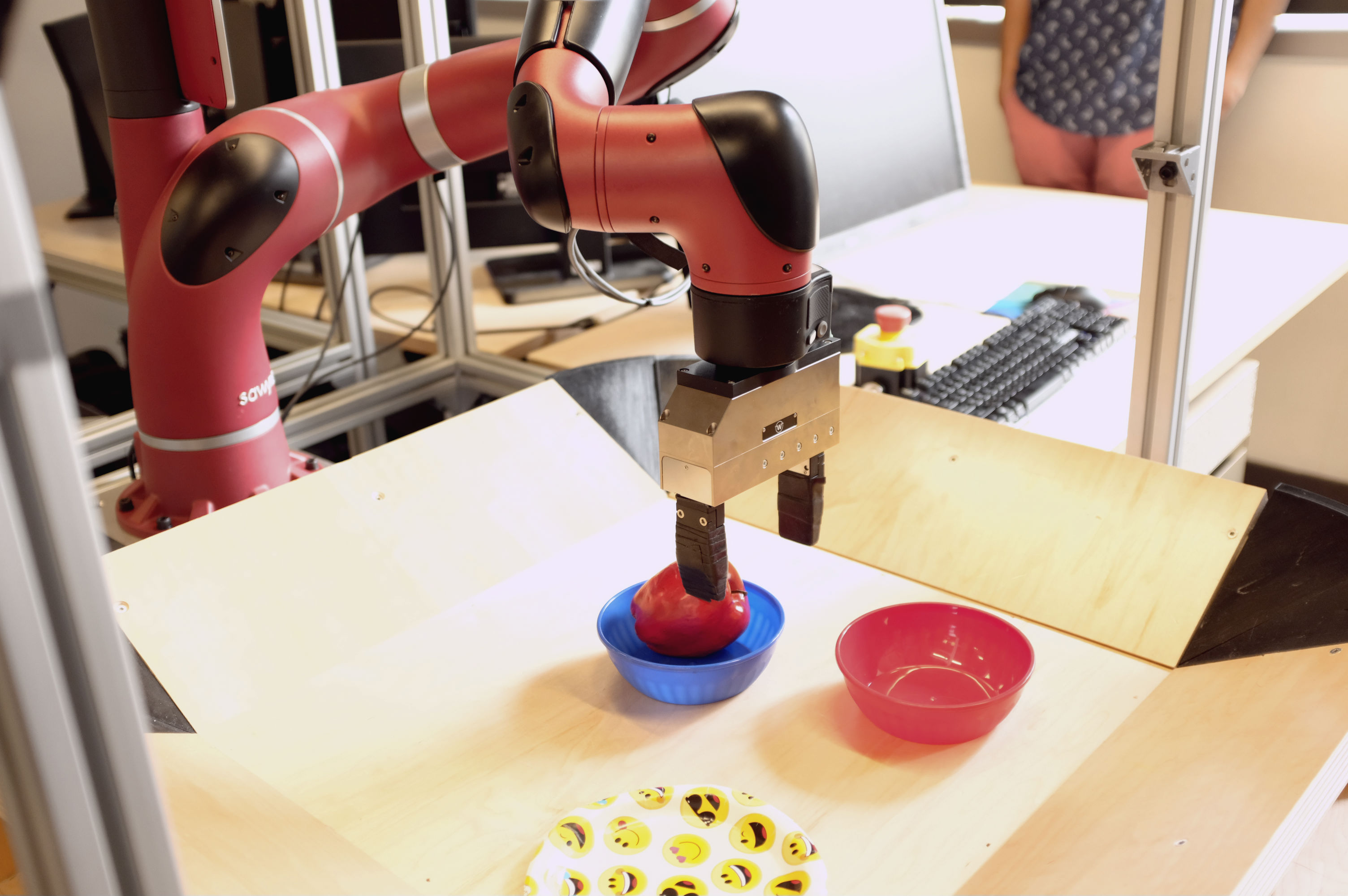

In a second demo, a robot attempts to perform a task based on watching a human doing the same thing. Researcher Chelsea Finn places a fake apple into a blue bowl for the benefit of Sawyer. She then hands the robot the apple and shuffles the bowls on the table. After a few moments, Sawyer pivots its arm above the blue bowl and slowly lowers the apple inside.

“We might have a setting where we’ve already collected a lot of data,” Levine explains. “Maybe the robot has picked up or pushed around thousands of objects. It can use that to get started, but in order for technology to be really powerful, in order for it to enable intelligent behavior in any real-world environment, then we need the robot to be able to continuously improve from its own experience.”

Join us at TC Sessions: Robotics May 11 on the UC Berkeley Campus.