By Ron Porat

As we here at L1ght help Trust & Safety teams to identify and remove such material from social platforms, content sites and search products, we thought it would be useful to share a walkthrough of the common denominators within CSAM classifications and regulations across some key geographies.

Please note: This version of the primer has been edited down for length. Download the full free version here: l1ght.com

Background & definitions

Child pornography, also known as “Child Sexual Abusive Material” is the type of pornography that exploits children for sexual gratification. Its production either entails the direct involvement / sexual assault of a child, or a simulation of it using actors or animation.

Over the years, governments, law enforcement agencies, academia and NGOs have been attempting to create a “scale” or “classification” that will be used as a guide when it comes to defining what precisely constitutes CSAM, and how to differentiate between various instantiations of it. The need arose to create a single, simple and clear scale, by which government entities can not only define, but also penalize offenders according to a well-defined range of severity. Unfortunately, for a wide variety of reasons, a consensus CSAM scale could not be agreed upon, causing the evolution of local state and country versions of it in different geographical locations.

For the purposes of this piece, we will use the acronym CSAM (Child Sexual Abusive Material).

United Kingdom

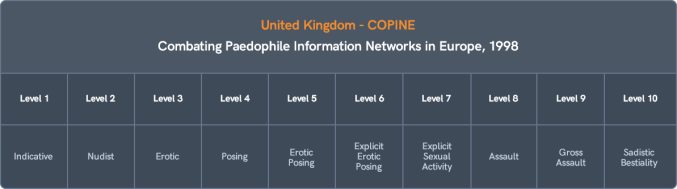

The first groundbreaking research on CSAM classification was conducted at the Department of Applied Psychology at College Cork University, Ireland. The rating system created there, as part of the COPINE Project (“Combating Paedophile Information Networks in Europe”), was primarily used in the United Kingdom to categorize the severity of images of child sexual abuse. See table below:

Image Credits: L1ght (opens in a new window)

- Level 1 – Non-erotic and non-sexualized pictures showing children wearing either underwear or swimsuits from either commercial sources or family albums; Pictures of children playing in normal settings, in which the context or organization of pictures by the collector indicates inappropriateness.

- Level 2 – Pictures of naked or semi-naked children in appropriate nudist settings, and from legitimate sources.

- Level 3 – Surreptitiously taken photographs of children in play areas or other safe environments showing either underwear or varying degrees of nakedness.

- Level 4 – Deliberately posed pictures of children fully clothed, partially clothed or naked (where the amount, context and organization suggests sexual interest).

- Level 5 – Deliberately posed pictures of fully, partially clothed or naked children in sexualized or provocative poses.

- Level 6 – Pictures emphasizing genital areas, where the child is either naked, partially clothed or fully clothed

- Level 7 – Pictures that depict touching, mutual and self-masturbation, oral sex and intercourse by a child, not involving an adult.

- Level 8 – Pictures of children being subjected to a sexual assault, involving digital touching, involving an adult.

- Level 9 – Grossly obscene pictures of sexual assault, involving penetrative sex, masturbation or oral sex, involving an adult.

- Level 10 – (i) Pictures showing a child being tied, bound, beaten, whipped or otherwise subject to something that implies pain. (ii) Pictures where an animal is involved in some form of sexual behavior with a child.

While the COPINE classification was geared more towards therapeutic psychological purposes, the need arose for a scale by which indecent images of children could be “graded”, and that would be more useful for judicial proceedings. To this end, following the case of “Regina v. Oliver” in the England & Wales Court of Appeal, a more economical scale was created, based on COPINE terminology, known as the SAP scale:

Image Credits: L1ght (opens in a new window)

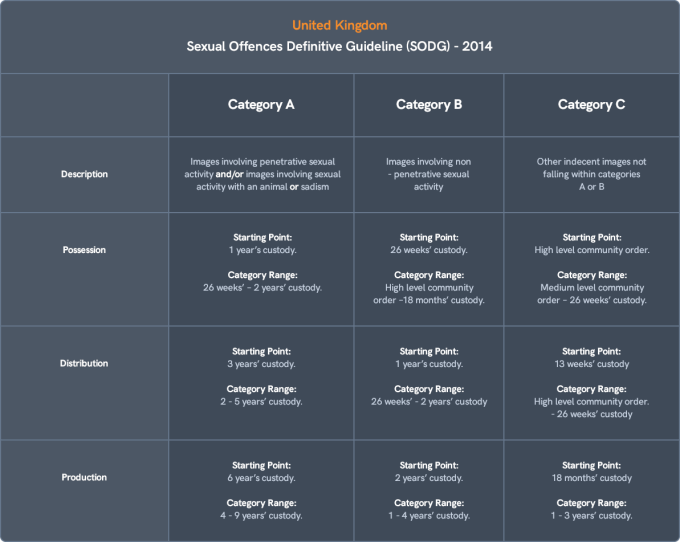

In 2013 a new “Sexual Offences Definitive Guideline” (SODG) was published. This scale was adopted for sentencing in England & Wales in 2014, and until today is the “go-to” scale with respect to evaluating CSAM in its jurisdictions.

Image Credits: L1ght

United States

Laws in the United States define child pornography as a form of child sexual exploitation in the form of any visual depiction of sexually explicit conduct involving a minor (<18 years of age). Visual depictions include photographs, videos, digital or computer generated images indistinguishable from an actual minor, and images created, adapted, or modified, but appear to depict an identifiable, actual minor. Electronically stored data that can be converted into a visual image of child pornography are also deemed illegal visual depictions under federal law.

According to the Department of Justice (DOJ), the legal definition of ”sexually explicit conduct” does not require that the content depict a child engaging in sexual activity necessarily, but instead that a depiction of child nudity may constitute illegal child pornography provided it is sufficiently sexually suggestive. Child pornography images are not protected under First Amendment rights, and are illegal contraband under federal law.

Distribution and receipt of child pornography has been an area of congressional concern since 1977, when the “Protection of Children against Sexual Exploitation” Act was passed. This Act prohibites the use of children to produce pornography and sets a 10-year maximum for first-time trafficking offenders and a 15-year statutory maximum, plus a two-year mandatory minimum, for subsequent offenders.

Congress further clarified its stance against traffickers by passing the Child Protection Act in 1984, which extended penalties to producers and traffickers of child pornography. In 1986 in response to the “Meese Report”, Congress enacted the “Child Sexual Abuse and Pornography” Act of 1986 and the “Child Abuse Victims’ Rights” Act of 1986. The latter provided civil remedies for victims and increased the mandatory minimum penalty for repeat-child-pornography-offenders from 2 to 5 years of imprisonment.

Currently, under federal law, statutory minimums range from 5 to 20 years, to life imprisonment (if the offender has prior convictions for child sexual exploitation, or if the images are violent and the child was sexually abused).

While the possession, creation, reception, and distribution of child pornography is illegal under both federal and state law in all 50 states, cases can become complicated due to the variation in state laws. States vary as to whether first possession offenses are considered felonies or misdemeanours. Federal jurisdiction, however, almost always applies when a child pornography violation occurs using the internet.

Sentencing for federal CSAM convictions range as follows:

Image Credits: L1ght

Canada

Under Canadian law, within the definition of “Offences Tending to Corrupt Morals” (1985), child pornography can be defined provided the evidence falls into one of the four following categories:

- A photographic, film, video or other visual representation, whether or not it was made by electronic or mechanical means,

- that shows a person who is or is depicted as being under the age of eighteen years and is engaged in or is depicted as engaged in explicit sexual activity, or

- a representation where the dominant characteristic is the depiction, for a sexual purpose, of a sexual organ or the anal region of a person under the age of eighteen years.

- Any written material, visual representation or audio recording that advocates or counsels sexual activity with a person under the age of eighteen years.

- Any written material whose dominant characteristic is the description, for a sexual purpose, of sexual activity with a person under the age of eighteen years.

- Any audio recording that has as its dominant characteristic the description, presentation or representation, for a sexual purpose, of sexual activity with a person under the age of eighteen years.

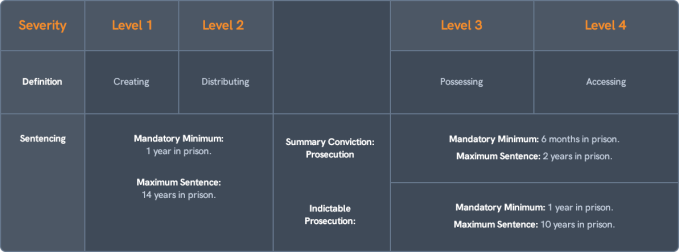

According to the above legislation, there is a clear distinction between four types of child pornography offenses: (i) Creation, (ii) Distribution, (iii) Possession and (iv) Accessing. If a court finds that a child pornography crime was committed with an intent to make profit, this will be considered to be an “aggravating factor” in determining the severity of sentence an offender should receive.

The law also specifies circumstances under which possession of CSAM is not a crime. One such exception applies if the defendant believed that the person depicted in the material was 18 years of age or over, provided he/she “took all reasonable steps to ascertain the age of the person”. Possession of child pornography will also not be illegal if it is possessed for “a legitimate purpose related to the administration of justice or to science, medicine, education or art” and “does not pose an undue risk of harm to persons under the age of eighteen years.” .

Mandatory minimum jail sentences are prescribed for all CSAM crimes, with no discretion given to judges to issue shorter sentences. The mandatory minimums have increased over the last decade and are currently as follows:

Image Credits: L1ght (opens in a new window)

Technologies used to classify CSAM

Even in an era dominated by technology, CSAM identification is performed manually by people. This not only makes it impossible to react in “real time” but also causes vast psychological challenges to those exposed to such toxic materials.

Currently, there are two major technological concepts used to identify CSAM. The first is based on fingerprinting known files (known as “Hashing”). The second, employs image analysis and machine learning for CSAM identification.

Hashing

Hashing allows CSAM media files to be “fingerprinted” through this process:

- A media file is flagged as containing CSAM.

- It is assessed by human moderators.

- If it is determined to be CSAM, it is sent to a mathematical “Hashing” algorithm that transforms the file into a string of letters and numbers. (e.g. b31d032cfdcf47a399990a71e43c5d2a).

- The string is stored in a database that is shared with 3rd parties.

The largest database of hashed CSAM images is offered by Microsoft as “PhotoDNA”.

The two disadvantages of this method are that, 1) new CSAM (even slightly altered) will not be identified, and 2) it requires human moderation to determine the existence of CSAM to trigger the Hashing process itself.

Machine Learning

With Machine Learning (the method that we at L1ght develop), Computer Vision algorithms are trained to determine if an image contains CSAM. This is done by “feeding” the algorithm with images that have already been determined to contain CSAM.

These machine learning models simulate the way by which a human being thinks and works.

The benefits of this method are that 1) it dramatically increases the ability to identify known and unknown CSAM at scale, and 2) it reduces the psychological impact on human moderators.