By Jason Richards, CEO | Daxbot

For the past few decades, robots have been confined to the factory floor. Robotic arms, concealed in big industrial buildings, weld cars, inspected items on conveyor belts and build complicated things. This is all well hidden behind closed doors. And for good reason — industrial robots are bulky, limited, and sometimes dangerous. In other words, not for the neighborhood.

As robot tech and AI have advanced, robots have exploded into pedestrian spaces. More efficient batteries, the ability to run very powerful neural nets in real time, and an incredible supply of commodity sensors and chips allow robots to go further and do more. For many of us, robots have suddenly come to main street. If Marty McFly from Back to the Future II had come just a little later, he would have found the future even more… futuristic. And predictably, this future has arrived with its own poignant conundrum: How will we treat these everyday robots?

I have a lot of experience with this subject of robot acceptance and safety as I work with a Pedestrian Space Robot (PSR) named Dax that has been six years in the making. Much of the inspiration for Dax was taken from science fiction movies where we see robots and humans interacting and working together. Take C-3PO from Star Wars: he has a distinct personality, which is constantly worried, and often unsure of himself. These qualities, though arguably unnecessary for a droid, make him more than just a tool. They make him a “who” instead of a “what”. Dax was designed with that same idea in mind.

I know what you’re thinking,” I’ve seen this before, in 1998, and it was called a Furby.” But before you let some well-aimed cynicism steal your joy, consider two points: first, Furbies are essentially pointless communicators — that is, they have no real needs or practical objectives. And second, that was 1998. We have come a long way since then.

“Function follows form: design as a way of creating meaning and comprehensibility in a world of over-functional chaos.”

Fast forward to 2015 when Dax was first being designed. The challenge was to give Dax the ability to communicate his intentions to strangers with enough emotion that he could take on his own personality, (become a ‘who’), while keeping him from becoming so human-like that he was unnerving to be around. In pursuit of this, Dax was developed to be more like a pet than a human, leading the Dax engineers to coin the word ‘canimorphic’ (from ‘canine’ and ‘anamorphic’). Dax was given a head and neck that could move, two eyes, and the power of beeping but not speaking.

This ability to interact like a “who” and not a “what” was a grand experiment to see if a robot could be accepted on the sidewalk. As we watched Dax begin to interact with cars and people, we could immediately see the impact he made on his community’s sense of psychological safety. For example, Dax would wait on a street corner and look both ways for traffic. This made drivers very comfortable since his body language clearly indicated that he was not about to dart out into traffic. You could sense this comfort in the way they drove, looking at him but not treating him differently than any other pedestrian.

Psychological safety, the uncanny valley, and motor resonance

It seems obvious that humans require a certain level of psychological safety from their community to thrive, and technologies that negatively impact that have been known to generate severe pushback.

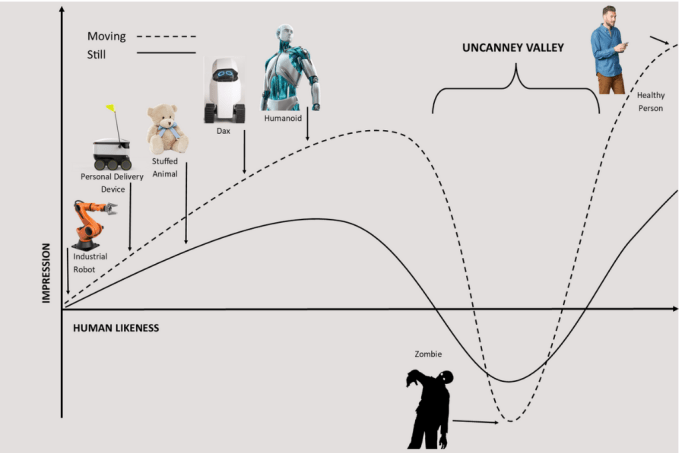

There has been much discussion around the uncanny valley hypothesis which was formulated by robotics professor Masahiro Mori in 1970. This hypothesis addresses the voyage from a “what” to a “who”, with the idea that up to a certain point we have a higher acceptance of things we relate to as a “who”, but past that point created things can become unnerving.

Image Credits: Daxbot (opens in a new window)

According to research, when a human judges a situation to be unpredictable, stress levels rise. Making robots more predictable, therefore, is an important part of their acceptability in urban spaces. Motion is tied to emotion: the motions we make in certain situations can be related to the way we feel. This is something called “motor resonance” — making something that moves like us — to help humans relate to and understand robots, and it’s something that we used in Dax.

A voice in society…?

Although Saudi Arabia did grant citizenship to a robot in 2017, for the most part no one is seriously proposing that robots be given suffrage. But since a future of daily robot service is starting to look more and more likely, it would be smart for us to start forming ideas about what that society should look like. We should prepare our minds and spaces for things that look, act, and feel a lot more like the robots we love in science fiction and a lot less like smart toasters. Tolerance is closer to the word we may be looking for.

Here in the United States, several states and cities have started to design laws for what they call Personal Delivery Devices (PDDs). PDDs are small robots designed specifically for delivery, like how a dish washer is a robot designed to wash the dishes. These robots, like Amazon Scout (no offense!), usually take the shape of small car-like vehicles that operate on sidewalks

“[R]obots, once deployed, won’t be judged solely on how well they do their jobs… Humans, universally, think of robots as social actors … If machines are to co-exist with us, they need to ‘understand’ some basic social rules and be programmed in a way that takes human attributions into account. “

The problem is that these laws focus on devices that are treated like vehicles instead of humanoid robots. They may make sense for a small car-like vehicle on a sidewalk (a “PDD”), they don’t make sense for a “real” robot (yes, like Dax).

Robots should speak for themselves

We are at a critical point in the technological evolution of urban robots. Robotics companies seeking to move into pedestrian spaces should set a high standard for human acceptance.

Engineers are incredibly brilliant people; and like all people they have their flaws. For many engineers, function comes before form, or at least before society (evidenced by the thorny acceptance of rental scooters in many places). If we don’t set a higher standard, we risk making things that annoy and scare people. In a democracy that means that those things will start getting banned, similarly to what happened in San Francisco.

People need robots that elevate our human experience; robots, like Dax, that belong in our human space and can behave like we and our pets behave. Designing robots for psychological safety and acceptance is our pathway forward.

Robots should be able to speak for themselves, and they don’t even have to use words.