Earlier this month, TechCrunch held its inaugural Mobility Sessions event, where leading mobility-focused auto companies, startups, executives and thought leaders joined us to discuss all things autonomous vehicle technology, micromobility and electric vehicles.

Extra Crunch is offering members access to full transcripts of key panels and conversations from the event, such as Megan Rose Dickey‘s chat with Voyage CEO and co-founder Oliver Cameron and Uber’s prediction team lead Clark Haynes on the ethical considerations for autonomous vehicles.

Megan, Oliver and Clark talk through how companies should be thinking about ethics when building out the self-driving ecosystem, while also diving into the technical aspects of actually building an ethical transportation product. The panelists also discuss how their respective organizations handle ethics, representation and access internally, and how their approaches have benefited their offerings.

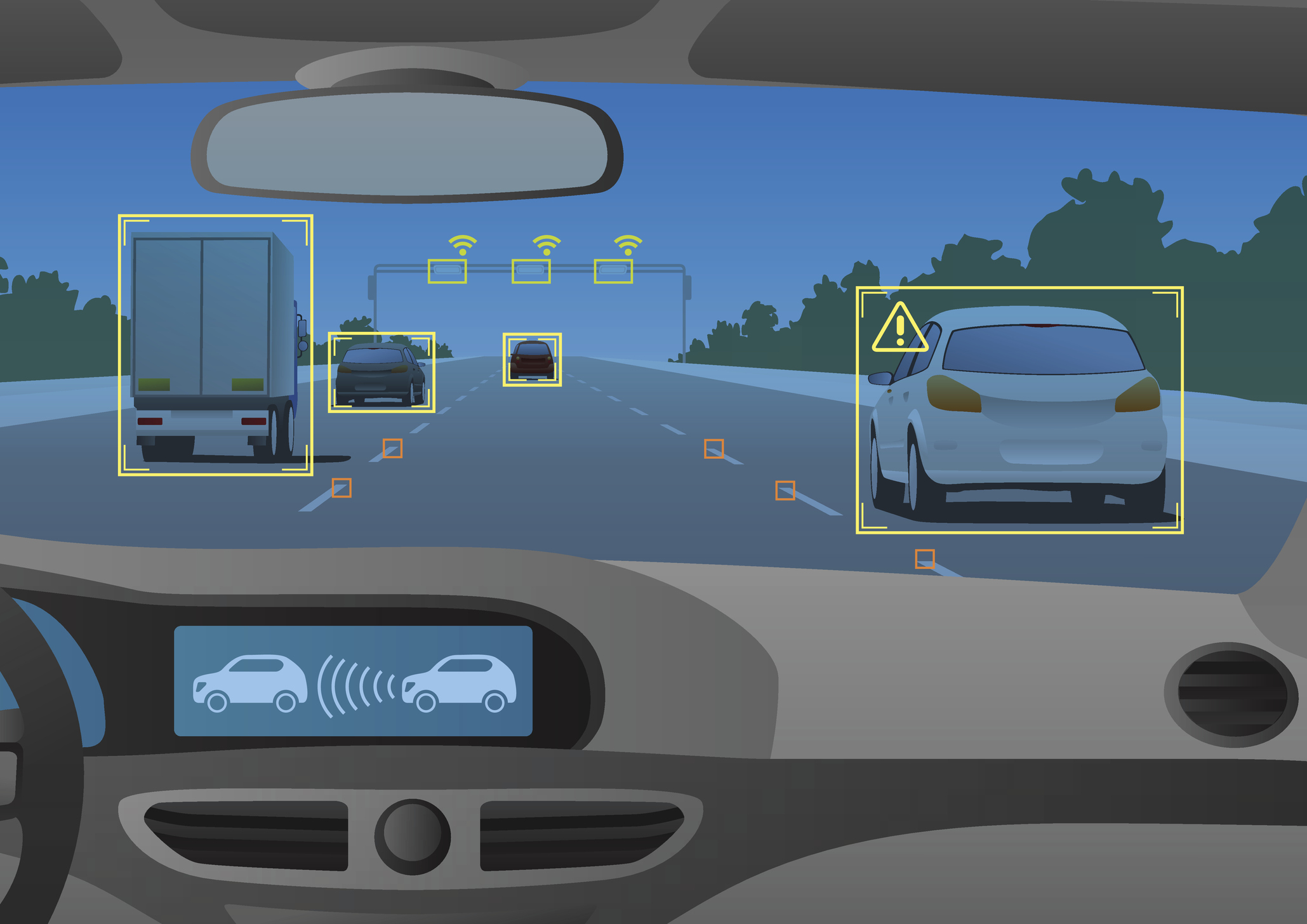

Clark Haynes: So we as human drivers, we’re naturally what’s called foveate. Our eyes go forward and we have some mirrors that help us get some situational awareness. Self-driving cars don’t have that problem. Self-driving cars are designed with 360-degree sensors. They can see everything around them.

But the interesting problem is not everything around you is important. And so you need to be thinking through what are the things, the people, the actors in the world that you might be interacting with, and then really, really think through possible outcomes there.

I work on the prediction problem of what’s everyone doing? Certainly, you need to know that someone behind you is moving in a certain way in a certain direction. But maybe that thing that you’re not really certain what it is that’s up in front of you, that’s the thing where you need to be rolling out 10, 20 different scenarios of what might happen and make certain that you can kind of hedge your bets against all of those.

For access to the full transcription below and for the opportunity to read through additional event transcripts and recaps, become a member of Extra Crunch. Learn more and try it for free.

Megan Rose Dickey: Ready to talk some ethics?

Oliver Cameron: Born ready.

Clark Haynes: Absolutely.

Rose Dickey: I’m here with Oliver Cameron of Voyage, a self-driving car company that operates in communities, like retirement communities, for example. And with Clark Haynes of Uber, he’s on the prediction team for autonomous vehicles.

So some of you in the audience may remember, it was last October, MIT came out with something called the moral machine. And it essentially laid out 13 different scenarios involving self-driving cars where essentially someone had to die. It was either the old person or the young person, the black person, or the white person, three people versus one person. I’m sure you guys saw that, too.

So why is that not exactly the right way to be thinking about self-driving cars and ethics?

Haynes: This is the often-overused trolley problem of, “You can only do A or B choose one.” The big thing there is that if you’re actually faced with that as the hardest problem that you’re doing right now, you’ve already failed.

You should have been working harder to make certain you never ended up in a situation where you’re just choosing A or B. You should actually have been, a long time ago, looking at A, B, C, D, E, F, G, and like thinking through all possible outcomes as far as what your self-driving car could do, in low probability outcomes that might be happening.

Rose Dickey: Oliver, I remember actually, it was maybe a few months ago, you tweeted something about the trolley problem and how much you hate it.

Cameron: I think it’s one of those questions that doesn’t have an ideal answer today, because no one’s got self-driving cars deployed to tens of thousands of people experiencing these sorts of issues on the road. If we did an experiment, how many people here have ever faced that conundrum? Where they have to choose between a mother pushing a stroller with a child and a regular, normal person that’s just crossing the road?

Rose Dickey: We could have a quick show of hands. Has anyone been in that situation?

Haynes: I see no one. Yeah, that’s what we thought. See? Yes.

Rose Dickey: But I think what that question is essentially getting at is, “Okay, well, how are these self-driving cars making decisions?” What’s leading them to decide that one object, to prioritize perhaps one object over the other? And, Clark, you actually wrote a whole patent on this. So how do you think about prioritizing certain objects over others?

Haynes: I think it’s a really interesting problem. So we as human drivers, we’re naturally what’s called foveate, our eyes go forward, we have some mirrors that help us get some situational awareness. Self-driving cars don’t have that problem.

Self-driving cars are designed with 360-degree sensors, they can see everything around them. But the interesting problem is not everything around you is important. And so you need to be thinking through what are the things, the people, the actors in the world that you might be interacting with, and really, really think through possible outcomes there.

I work on the prediction problem of what’s everyone doing? Certainly, you need to know that someone behind you is moving in a certain way in a certain direction. But maybe that thing, that you’re not really certain what it is up in front of you, that’s the thing where you need to be rolling out 10, 20 different scenarios of what might happen and make certain that you can kind of hedge your bets against all of those.

Rose Dickey: How many different inputs of potential objects or actors in the scene are there? Obviously, I can think of a human, a dog, a cat, a trolley, or anything like that. How many inputs are there that are going into that algorithm to then do that processing and prediction?

Haynes: Right, right there, there’s a lot of understanding of what things in the world are. So you, you want to understand what a vehicle is, what a person is, what a bicyclist, other things that are moving that you absolutely need to avoid.

You also need to understand what are the fire hydrants and the garbage cans and all that. I think one of the interesting things that we’ve really learned is this isn’t what would be called a binary classification. It’s not just it is this, it is not this. It’s more about what’s the chance that it might be this? And how are you going to act based upon that chance?

Rose Dickey: Oliver, how do you think about that? Just in terms of more specifically, how do you determine which inputs kind of go into it? And then how do you make sure that you’ve thought of all of them?

Cameron: For sure, I think the truth is you’ll never think of all the inputs, right? The world is crazy, the world is weird, it throws stuff at you that you would never reasonably expect. I think the most important classification of an object is unknown, right?

If you see something in the world, and you just don’t know what it is, just the very fact that you can detect that unknown object and be able to come to a safe stop, or whatever you need to do is crucial. And I think that goes into just the differences in approaches that you see in self-driving technology.

If your sensor stack or your technology approach enables you to be able to know that something’s there, but it’s unknown in its class, that, to me, is a much safer approach than just relying upon a whole bunch of different classifications of what you think the world will throw you.

Rose Dickey: Clark, it seems like you’re in agreement?

Haynes: We’re essentially saying the same thing. And unknown also means it could be one of many things, and you need to think that through.

Rose Dickey: And so how do you think that through? In all the testing scenarios, you’re probably coming across some unknown, so then once you see it you can put it as simple as that?

Haynes: Yeah, I would say this is true across the industry. Machine learning is the way of the game. So you build these into what you expect your machine learning models to be able to do. And there’s a huge realm of research on how you make these machines or models, iterate on them, make certain that they are what is called calibrated in the probabilities that they put out.

So that if you say 10% chance that this is a person, that’s accurate. So that’s how we build that into the system. And I think that’s pretty true across the whole industry of moving to machine learning for really doing that.

Rose Dickey: I came across this study from the Georgia Institute of Technology around autonomous vehicles. And the caveat is that it wasn’t using real data from the likes of Uber or Voyage or any other self-driving companies, which is definitely worth pointing out.

But what it found was that some self-driving vehicles may not be as good as detecting people of color than people with lighter skin.

And I think that just gets to this question of how can you be sure that your machines are actually recognizing people of all different colors and sizes and heights and weights? And whether they’re walking or maybe in a wheelchair. How do you solve for that?

Haynes: Yeah, I think the first thing to point out there is that there’s no sensor or perception system that is trying to say this is a certain person of this gender, race, creed, culture. People are people, that’s what these systems are being engineered to do.

And I think the critical thing is that you have to look at your data and see if you are subject to those problems. And that’s where, of course, if you have a data set that you’re training a machine learning system on and it is over-represented in one area, and underrepresented in the other, that’s a huge pitfall of machine learning. That it can marginalize out those the smaller sets.

And that’s where you absolutely have to dig into it, understand it and ensure that isn’t a problem. That being said, our sensors and our algorithms are designed to find people. It’s not trying to find one certain type of person. And that’s where the testing is so important. And then also back to that point of you need to understand these low probability outcomes.

Regardless of race, gender, creed, you should still have some sense of that’s a person and maybe there might be times where you’re not 100% sure. But you need to be guarding your system and hedging against the possibility even down to very low, low probabilities. That is a person and they just look different than what you’re used to.

Rose Dickey: I believe it was last year, the Uber self-driving cars, unfortunately, struck and killed a someone walking through the street. What are some of the learnings that your team has taken away from that? And then what have you done to try to make sure that doesn’t happen again?

Haynes: I would say we’ve done a complete overhaul of how we go about everything we do. And we shared this all with the industry. So we did an internal safety review, as well as an external review where we brought in experts like, I believe a former head of the NTSB, to help us evaluate what is the process by which we should be developing and testing these self-driving systems.

And the outcome was just a laundry list of not any one specific thing where it was like, “Oh, yeah, you just need to fix this and you’ll be safe again.” It was more a full list of ways to improve. We’ve shared that list with the industry and we’ve completely rethought how we go about testing.

One of the main things we do now is extremely structured testing on the track where we’ve taken everything we learned from millions of miles on the road. And we exhaustively, exhaustively run these really hard situations in, in simulation and in real life and really have a very strict gate of what is safe enough.

Rose Dickey: Oliver, how do you think about — going back to the Georgia Institute of Technology study — ensuring that your algorithms aren’t biased and don’t miss out on certain people.

Cameron: I’d say that Silicon Valley has a history of sucking when it comes to generating data sets that are diverse. If you go and you try and build a self-driving car for Palo Alto, your data set that you generate from driving in Palo Alto probably is not going to be diverse, right?

So it’s imperative that if you’re trying to build a safe, awesome self-driving car that you can collect data from a whole abundance of different locations around the country in which to feed into your data set to make your models more generalizable.

That’s the first thing. The second thing is that I very much believe, in a similar way to the airline industry, you need to have redundancy across everything that you do. You cannot assume that any piece of software is absolutely bulletproof to all of the time capture every and any object in and around you.

So we have what we call led perception, which effectively has at the very top layer, a really awesome deep learning model that’s able to detect objects, using cameras, radars, lidars. It has, unfortunately, one flaw. That flaw as Clark mentioned is that if your data set doesn’t have an object, then it can’t detect the feature.

So if an elephant runs across the road, we’re not going to detect that because we don’t have elephants in our data set. So for us, we assume that that primary lab will have some issue. And then we have numerous other perception layers using fundamentally different approaches, which aren’t as reliant on data sets to be able to capture any and all objects in and around us.

Rose Dickey: So maybe one of those other layers would catch the elephant.

Cameron: Exactly, it’s not a machine-learned model, that’s that second or third layer, it’s an entirely different approach, which doesn’t have the same deficiencies as a machine learning model.

Rose Dickey: But when you’re driving these things can happen so fast. How quickly are those other layers then getting activated?

Cameron: Super-fast. So humans are actually pretty good at perception in terms of latency from our brains, it’s pretty good. But machines are very good too. So for us, because our first product is a truly driverless cars at zero to 25, we have some buffer in our latency, from sensor reading to outputting something intelligent for the car to do.

We think about that budget in the 90 to 100-millisecond mark. And of course, all of that cannot be perception, it has to be a subset because you’ve got other things that you’re doing, like prediction, etc. For us, our entire perception stack runs in about 30 to 35 milliseconds. So that’s from sensor scan, cameras, radars fed into our perception system, through the different lenses and our outcomes are all of these detected objects. It’s pretty fast.

Rose Dickey: We’ve definitely seen a lot of stories around algorithmic bias. And in the news over the last couple of years, whether it’s regarding Google or Facebook, really any of the big tech companies have grappled with these issues.

And then people say that a lot of these issues could be or maybe would have been caught if the engineering team was actually truly diverse. So how are your respective engineering teams specific to prediction and self-driving?

Haynes: We are a large team at Uber ATG. And this is one area where I think we are doing very well, but we’re always working really, really hard to increase our diversity. Two things I’ll call out, we have the Uber ATG Research and Development Office in Toronto, that’s entirely female lead. Our chief scientist, the engineering leader at that office, and the program manager at that office are all women.

And the important thing to note is that they’re not in that job because they’re women. They’re in that job, because they’re awesome at what they do, and they just happen to be women. So I think that’s one example,

Another just within my team. I look at Oliver and myself, and we’re old white males. We could have improved on that side. That being said, the team that I work with, the people I work with every day are very different than myself. I work with some amazing engineers who come from different races, cultures, creeds, and gender.

And I’ve learned a ton as a manager of these people have, from working with them, learning differences in opinion or things like, did you know that thumbs up in Persian cultures is actually rather offensive? Did you know that the emoji that you just shared on Slack might have had a completely different outcome from what you intended? There are conversations like that.

But again, we have to hire people who are just great at what they do. And it’s just a side effect that one of the best people on my team is a woman, I don’t care that it’s a man or a woman. It’s an awesome engineer, and I love working with that person.

Cameron: So from my perspective, the Voyage team is not diverse enough. It’s something that we continuously work to improve. But it’s today, not something that I would put up as a shining beacon of amazingness. We are working on that and definitely need to improve.

I will say that one of the things we’ve embraced from day one, and I would say we are definitely leading on, is being accepting of different backgrounds. We assume that there are great minds all over the world and we don’t just look at previous educational history, PhDs and such to make our assessment as to whether someone’s good.

We’re very accepting of all folks that have been through numerous different avenues in life to get to where they are. So I’m really proud of that. But from a diversity perspective, it’s something we’re definitely working on.

Rose Dickey: Have there been any instances where a problem has come up and you thought, “Oh, maybe if we had had a person of color looking at this, we might have caught this earlier?”

Cameron: It’s a good question. Likely so. I can’t think of a specific example. And we do have folks of color on our team. But I can’t think of an example. I’m curious if you can.

Haynes: There are disagreements in a workplace, you have different people. And I won’t go into any detail but a situation where maybe one thing to one person based upon their upbringing and their culture meant something very different to someone else, and it didn’t have an impact on the tech itself or a problem in the tech.

It’s more about how do diverse people work together and come to understand their differences and celebrate the differences. And I think that’s really, really important in a diverse workforce.

Rose Dickey: So I kind of want to switch gears a little bit, still though in the realm of ethics. But just on Universal Basic Transportation and making sure that autonomous cars are not just for the able-bodied or for those in affluent neighborhoods.

We’ll start with you, Oliver. You’re in retirement communities. So that’s definitely a pretty specific place. But I’m thinking about people with disabilities, people in wheelchairs, people with blindness. How do you ensure that they can also experience these vehicles?

Cameron: Definitely. So that’s what we’ve been about for nearly three years now. The beginning of Voyage was very much informed by finding a customer first and working backwards to figure out what technology those folks needed.

I think all too often in self-driving cars, you start with this technology that’s crazy cool and talk about deep learning and algorithms and lidars, etc. And then we’ll figure out the customer future, right? We’ll figure out who needs this because it’ll be a big market.

We’ve always been focused on the fact that driverless cars need to have a defined customer and we’re going to go after that customer with a great product that they love. So that’s what we’ve been about for a while. And the worst thing that I think we could do with those customers is assume that we know best, because the truth is we’re as Clark said, two dudes in Silicon Valley, we do not know best, I guarantee you that.

So we make sure that we talked to these folks, that we get accurate real feedback that’s candid and transparent. And we’re not afraid for someone to tell us that we’re doing something stupid and to change course, as is necessary. I think that’s probably the most important thing, but it’s in our DNA that we serve customers that need this technology, not just want the technology. But their lives would get much, much, much better as a result.

Rose Dickey: So as it is today, people with wheelchairs can get into the Voyage cars, no problem.

Cameron: I wish. So unfortunately, we have tried wheelchair ramps for the specific type of Chrysler Pacifica that we use. It’s a no-go. It’s a long story — It’s a vehicle, they can’t retrofit to become wheelchair accessible.

But that said, we do look at new vehicle form factors. And we do look at new companies building new cars and all this great stuff to bake in from day one. Our app that customers use to summon our vehicles are entirely voice-over enabled, so folks that are vision impaired and hard of hearing can use our app to figure out where they want to go.

Rose Dickey: Okay, Clark.

Haynes: Sure. From Uber’s side, there’s a long history of having this equality in transportation. You go back to the studies on New York taxi cab medallions, the percent of trips that started outside of the city was about 5%. That’s about 50% now today with Uber. So we really believe that you should not be discriminated in terms of transportation.

I think the other place where this is really key for Uber is this idea of optionality. Everyone here hopefully has the Uber app on their phone. You can see you have a lot of different options and we have built options that do wheelchair access. And when it comes to self-driving, that’s just going to be another option.

We strongly believe in a hybrid approach, where you’re not going to solve the self-driving problem overnight and tomorrow have 10,000 cameras, 10,000 cars on the road, servicing all mobility needs. It’s going to be a slow ramp up and having this hybrid approach where humans are going to be in some cars, and others are going to be self-driven. And you let the network dictate where the needs are.

And the needs can adapt to different people based upon where the demand is in the city, or even just the socioeconomic abilities to pay for different modes of transportation. So ranging all the way from your super luxe vehicle travel all the way down to just a scooter or a bike ride, just to get a few blocks. We believe that there really should be a lot of options.

Rose Dickey: But is Uber building these autonomous vehicles with that in mind?

Haynes: Yeah, I’d say like Oliver, not today. That’s not our mandate, but we want to build it into the network and the culture of that network. So it is part of the plan.

Rose Dickey: There is definitely some fears — especially with Uber essence right now, the company relies on human drivers —that self-driving is going to take away jobs from all of these people. So How do you think about making sure that there are still opportunities for the core Uber drivers?

Haynes: Yeah, I think this is the critical thing at least in terms of Uber. It’s wrong to think about the autonomous cars against the human-driven Ubers. Uber is still a very, very tiny fraction of all vehicle transport in the US. And we believe that we can really free up a lot of people’s time by upping that percentage.

So it’s really about personal car ownership versus rideshare. And human drivers and autonomous vehicles have a huge future in delivering on rideshare. And that’s really where we think it is.

Rose Dickey: Yeah, Oliver, you had these retirement communities where you’re operating. Was there something before Voyage? Did they have vehicles driving people around or not necessarily?

Cameron: So that was really one of the big reasons we focused on that specific category first, because there wasn’t an alternative to driving your own car. So my answer is a lot easier than Clark’s, which is that we’re not really replacing anything that currently exists.

In fact, there are just a real-challenges going on around the world where folks reach the end of their ability to drive but because there is no good alternative, they continue driving. And sometimes they continue driving just in the daytime, and they’ll stop driving in the evenings.

But in many cases that’s entirely unsafe, for both those folks and the people around them. So what we want to be able to do is just give those folks that ability to say, “Okay, I can now give up my driver’s license and transition to something that’s hopefully way more affordable than even the person driven car.”

Rose Dickey: Well, you’ve both left us all with a lot to think about. So thank you, but we’re unfortunately out of time.

Cameron: Thank you.

Haynes: Thank you.