A picture is worth 1,000 words, as the saying goes, and now a startup called Nuralogix is taking this idea to the next level: Soon, a selfie will be able give you 1,000 diagnostics about the state of your health.

Anura, the company’s flagship health and wellness app, takes a 30-second selfie and uses the data from that to create a catalogue of readings about you. They include vital stats like heart rate and blood pressure; mental health-related diagnostics like stress and depression levels; details about your physical state like body mass index and skin age; your level of risk for things like hypertension, stroke and heart disease; and biomarkers like your blood sugar levels.

Some of these readings are more accurate than others and are being improved over time. Just today, to coincide with CES in Vegas — where I came across the company — Nuralogix announced that its contactless blood pressure measurements were becoming more accurate, specifically with accuracy corresponding to a standard deviation of error of less than 8 mmHg.

Anura’s growth is part of a bigger trend in the worlds of medicine and wellness. The COVID-19 pandemic gave the world a prime opportunity to use and develop more remote health services, normalizing what many had thought of as experimental or suboptimal.

That, coupled with a rising awareness that regular monitoring can be key to preventing health problems, has led to a number of apps and devices proliferating the market. Anura is by far not the only one out there, but it’s a notable example of how companies are playing out the equation of relying on low friction to yield big results. That in a way has been the holy grail of a lot of modern medicine — it’s one reason why so many wanted Theranos to be real.

So while some pandemic-era behaviors are not sticking as firmly as people thought they might (e-commerce has not completely replaced in-person shopping, for one) observers believe there is a big future in tele-health and companies like Nuralogix providing the means to implement it.

Grandview Research estimates that tele-health was an $83.5 billion market globally in 2022 and that this number will balloon to $101.2 billion in 2023, growing at CAGR of 24% to 2030 when it will be a $455.3 billion market.

The startup — which is based out of Toronto, Canada, and backed by the city’s Mars Innovation effort (a consortium of universities and research groups helping to spin out academic research) and others — uses a B2B business model and counts Japan’s NTT and Spanish insurance provider Sanitas among its customers. It’s also talking to automotive companies that see the potential of being able to use this to track, say, when a driver is getting tired and distracted or having a health crisis of some other kind.

Right now, the results that Anura comes up with are positioned as guidance — for “investigational” insights that complement other kinds of assessments. The company claims to be compliant with HIPAA, GDPR and other data protection regulations as part of its wider security policy, and it’s currently going trough the process of FDA approval so that its customers can use the results in a more proactive manner.

It also has a Lite version of the application (on iOS and Android) where individuals can get some — but not all — of these diagnostics.

The Lite version is worth looking at not just as a way for the company to publicize itself but how it gathers data.

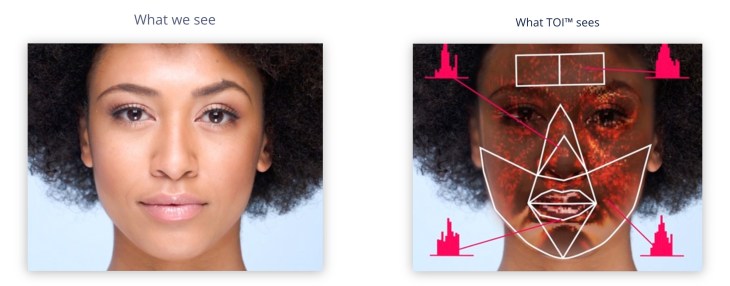

Nuralogix built Anura on the back of an AI that was trained on data from some 35,000 of different users. A typical 30-second video image of a user’s face is analyzed to see how blood moves around it. “Human skin is translucent,” the company notes. “Light and its respective wavelengths are reflected at different layers below the skin and can be used to reveal blood flow information in the human face.”

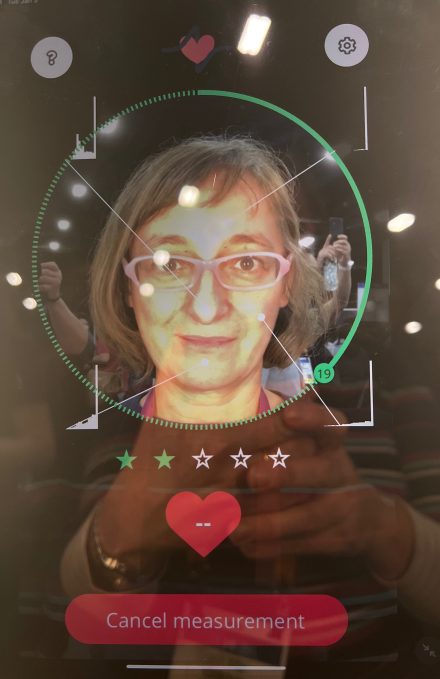

Ingrid testing out the app at CES. Image Credits; Ingrid Lunden

That in turn is matched up with different diagnostics from those people using traditional measuring tools and uploaded to the company’s “DeepAffex” Affective AI engine. Then users of the Anura app are essentially “read” based on what the AI has been trained to see: blood moving in one direction or another, or a person’s skin color, can say at lot about how the person is doing physically and mentally.

DeepAffex is potentially being used for more than just tele-health diagnostics. Previous to its pivot to health, the company’s AI technology and using this technique of “transdermal optical imaging” (shortened to TOI by the company) to “read” faces, was being applied to reading users’ emotions. One potential application of that was using the tech to augment or even replace traditional lie detector tests, which are regularly used by police and others to determine whether a person is representing things truthfully but have been proven to be flawed.

There are also horizons that extend into hardware. The current version of Anura is based on an app that you access via smartphones or tablets but longer term the company might also work on their own scanning devices to add in other kinds of facial scanning and other tools such as infrared to pick up even more information and produce more diagnostics. (One area for example that’s not currently touched is blood oxygen, an area that the company definitely wants to tackle.)

I tried out the full version of the Anura app this week in Las Vegas and have to say it’s a pretty compelling experience and indeed is low-friction enough to likely catch on with a lot of people. (As a measure of that, the company’s demo had a permanent queue of people waiting to try it out.)