Research in the field of machine learning and AI, now a key technology in practically every industry and company, is far too voluminous for anyone to read it all. This column, Perceptron, aims to collect some of the most relevant recent discoveries and papers — particularly in, but not limited to, artificial intelligence — and explain why they matter.

Over the past few weeks, researchers at MIT have detailed their work on a system to track the progression of Parkinson’s patients by continuously monitoring their gait speed. Elsewhere, Whale Safe, a project spearheaded by the Benioff Ocean Science Laboratory and partners, launched buoys equipped with AI-powered sensors in an experiment to prevent ships from striking whales. Other aspects of ecology and academics also saw advances powered by machine learning.

The MIT Parkinson’s-tracking effort aims to help clinicians overcome challenges in treating the estimated 10 million people afflicted by the disease globally. Typically, Parkinson’s patients’ motor skills and cognitive functions are evaluated during clinical visits, but these can be skewed by outside factors like tiredness. Add to that fact that commuting to an office is too overwhelming a prospect for many patients, and their situation grows starker.

As an alternative, the MIT team proposes an at-home device that gathers data using radio signals reflecting off of a patient’s body as they move around their home. About the size of a Wi-Fi router, the device, which runs all day, uses an algorithm to pick out the signals even when there are other people moving around the room.

In a study published in the journal Science Translational Medicine, the MIT researchers showed that their device was able to effectively track Parkinson’s progression and severity across dozens of participants during a pilot study. For instance, they showed that gait speed declined almost twice as fast for people with Parkinson’s compared to those without, and that daily fluctuations in a patient’s walking speed corresponded with how well they were responding to their medication.

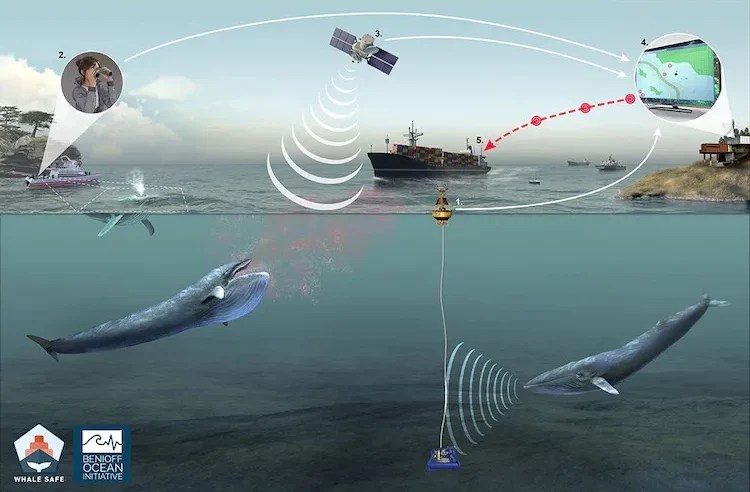

Moving from healthcare to the plight of whales, the Whale Safe project — whose stated mission is to “utilize best-in-class technology with best-practice conservation strategies to create a solution to reduce risk to whales” — in late September deployed buoys equipped with onboard computers that can record whale sounds using an underwater microphone. An AI system detects the sounds of particular species and relays the results to a researcher, so that the location of the animal — or animals — can be calculated by corroborating the data with water conditions and local records of whale sightings. The whales’ locations are then communicated to nearby ships so they can reroute as necessary.

Collisions with ships are a major cause of death for whales — many species of which are endangered. According to research carried out by the nonprofit Friend of the Sea, ship strikes kill more than 20,000 whales every year. That’s destructive to local ecosystems, as whales play a significant role in capturing carbon from the atmosphere. A single great whale can sequester around 33 tons of carbon dioxide on average.

Image Credits: Benioff Ocean Science Laboratory

Whale Safe currently has buoys deployed in the Santa Barbara Channel near the ports of Los Angeles and Long Beach. In the future, the project aims to install buoys in other American coastal areas including Seattle, Vancouver and San Diego.

Conserving forests is another area where technology is being brought into play. Surveys of forest land from above using lidar are helpful in estimating growth and other metrics, but the data they produce aren’t always easy to read. Point clouds from lidar are just undifferentiated height and distance maps — the forest is one big surface, not a bunch of individual trees. Those tend to have to be tracked by humans on the ground.

Purdue researchers have built an algorithm (not quite AI but we’ll allow it this time) that turns a big lump of 3D lidar data into individually segmented trees, allowing not just canopy and growth data to be collected but a good estimate of actual trees. It does this by calculating the most efficient path from a given point to the ground, essentially the reverse of what nutrients would do in a tree. The results are quite accurate (after being checked with an in-person inventory) and could contribute to far better tracking of forests and resources in the future.

Self-driving cars are appearing on our streets with more frequency these days, even if they’re still basically just beta tests. As their numbers grow, how should policy makers and civic engineers accommodate them? Carnegie Mellon researchers put together a policy brief that makes a few interesting arguments.

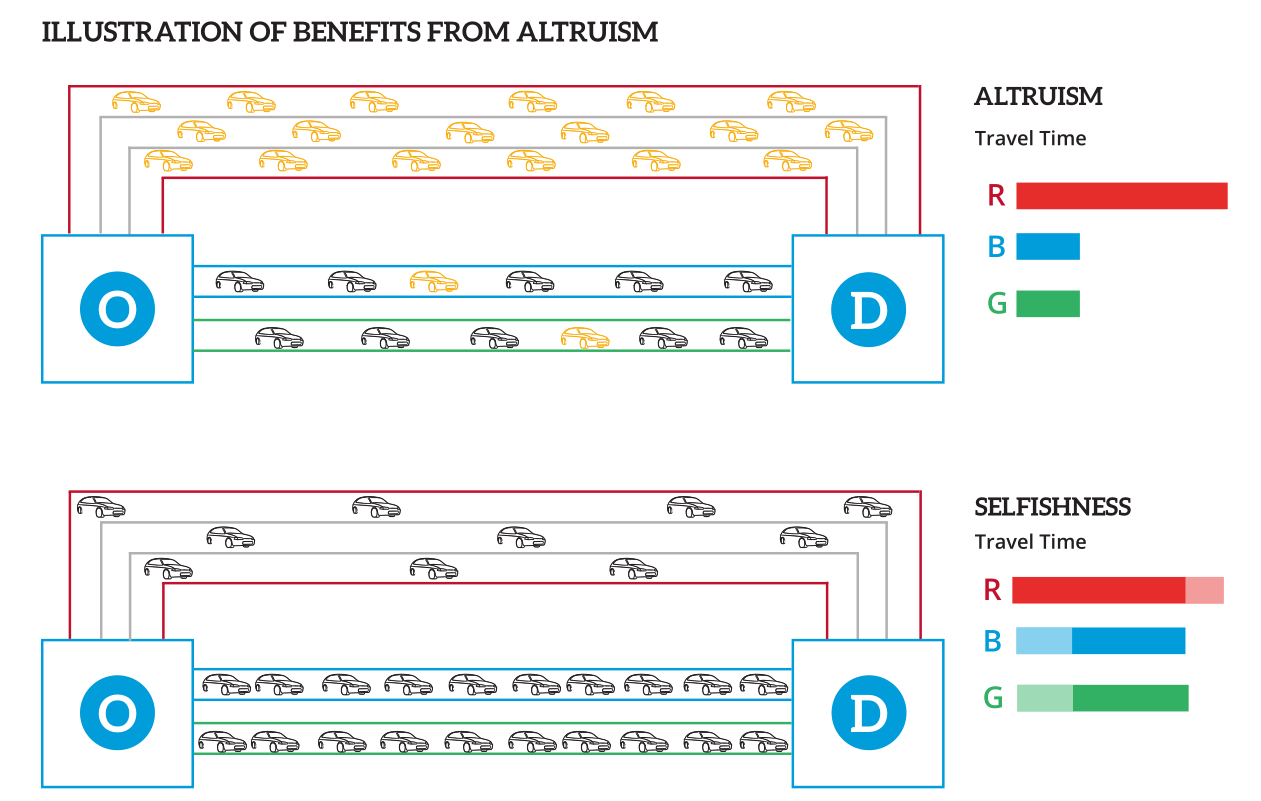

Diagram showing how collaborative decision making in which a few cars opt for a longer route actually makes it faster for most. Image Credits: Carnegie Mellon University

The key difference, they argue, is that autonomous vehicles drive “altruistically,” which is to say they deliberately accommodate other drivers — by, say, always allowing other drivers to merge ahead of them. This type of behavior can be taken advantage of, but at a policy level it should be rewarded, they argue, and AVs should be given access to things like toll roads and HOV and bus lanes, since they won’t use them “selfishly.”

They also recommend that planning agencies take a real zoomed-out view when making decisions, involving other transportation types like bikes and scooters and looking at how inter-AV and inter-fleet communication should be required or augmented. You can read the full 23-page report here (PDF).

Turning from traffic to translation, Meta this past week announced a new system, Universal Speech Translator, that’s designed to interpret unwritten languages like Hokkien. As an Engadget piece on the system notes, thousands of spoken languages don’t have a written component, posing a problem for most machine learning translation systems, which typically need to convert speech to written words before translating the new language and reverting the text back to speech.

To get around the lack of labeled examples of language, Universal Speech Translator converts speech into “acoustic units” and then generates waveforms. Currently, the system is rather limited in what it can do — it allows speakers of Hokkien, a language commonly used in southeastern mainland China, to translate to English one full sentence at a time. But the Meta research team behind Universal Speech Translator believes that it’ll continue to improve.

Illustration for AlphaTensor. Image Credits: DeepMind

Elsewhere within the AI field, researchers at DeepMind detailed AlphaTensor, which the Alphabet-backed lab claims is the first AI system for discovering new, efficient and “provably correct” algorithms. AlphaTensor was designed specifically to find new techniques for matrix multiplication, a math operation that’s core to the way modern machine learning systems work.

To leverage AlphaTensor, DeepMind converted the problem of finding matrix multiplication algorithms into a single-player game where the “board” is a three-dimensional array of numbers called a tensor. According to DeepMind, AlphaTensor learned to excel at it, improving an algorithm first discovered 50 years ago and discovering new algorithms with “state-of-the-art” complexity. One algorithm the system discovered, optimized for hardware such as Nvidia’s V100 GPU, was 10% to 20% faster than commonly used algorithms on the same hardware.