As interest around large AI models — particularly large language models (LLMs) like OpenAI’s GPT-3 — grows, Nvidia is looking to cash in with new fully managed, cloud-powered services geared toward enterprise software developers. Today at the company’s fall 2022 GTC conference, Nvidia announced the NeMo LLM Service and BioNeMo LLM Service, which ostensibly make it easier to adapt LLMs and deploy AI-powered apps for a range of use cases including text generation and summarization, protein structure prediction and more.

The new offerings are a part of Nvidia’s NeMo, an open source toolkit for conversational AI, and they’re designed to minimize — or even eliminate — the need for developers to build LLMs from scratch. LLMs are frequently expensive to develop and train, with one recent model — Google’s PaLM — costing an estimated $9 million to $23 million leveraging publicly available cloud computing resources.

Using the NeMo LLM Service, developers can create models ranging in size from 3 billion to 530 billion parameters with custom data in minutes to hours, Nvidia claims. (Parameters are the parts of the model learned from historical training data — in other words, the variables that inform the model’s predictions, like the text it generates.) Models can be customized using a technique called prompt learning, which Nvidia says allows developers to tailor models trained with billions of data points for particular, industry-specific applications — e.g. a customer service chatbot — using a few hundred examples.

Developers can customize models for multiple use cases in a no-code “playground” environment, which also offers features for experimentation. Once ready to deploy, the tuned models can run on cloud instances, on-premises systems or through an API.

The BioNeMo LLM Service is similar to the LLM Service, but with tweaks for life sciences customers. Part of Nvidia’s Clara Discovery platform and soon available in early access on Nvidia GPU Cloud, it includes two language models for chemistry and biology applications as well as support for protein, DNA and chemistry data, Nvidia says.

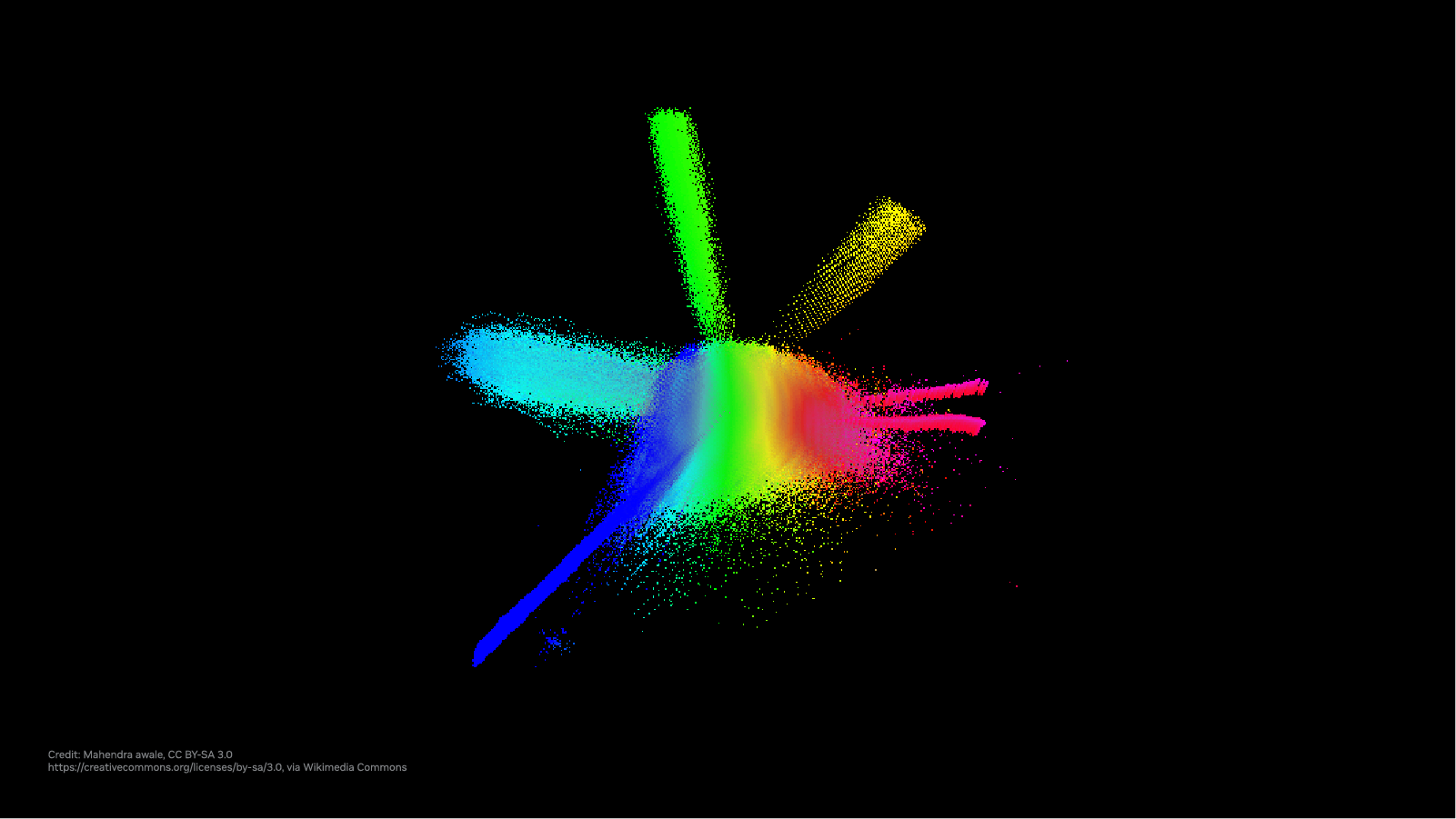

Visualization of bio processes predicted by AI models.

BioNeMo LLM will include four pretrained language models to start, including a model from Meta’s AI R&D division, Meta AI Labs, that processes amino acid sequences to generate representations that can be used to predict protein properties and functions. Nvidia says that in the future, researchers using the BioNeMo LLM Service will be able to customize the LLMs for higher accuracy

Recent research has shown that LLMs are remarkably good at predicting certain biological processes. That’s because structures like proteins can be modeled as a sort of language — one with a dictionary (amino acids) strung together to form a sentence (protein). For example, Salesforce’s R&D division several years ago created an LLM model called ProGen that can generate structurally, functionally viable sequences of proteins.

Both the BioNeMo LLM Service and LLM Service include the option to use ready-made and custom models through a cloud API. Usage of the services also grants customers access to the NeMo Megatron framework, now in open beta, which allows developers to build a range of multilingual LLM models including GPT-3-type language models.

Nvidia says that automotive, computing, education, healthcare and telecommunications brands are currently using NeMo Megatron to launch AI-powered services in Chinese, English, Korean and Swedish.

The NeMo LLM and BioNeMo services and cloud APIs are expected to be available in early access starting next month. As for the NeMo Megatron framework, developers can try it via Nvidia’s LaunchPad piloting platform at no charge.