Research in the field of machine learning and AI, now a key technology in practically every industry and company, is far too voluminous for anyone to read it all. This column, Perceptron, aims to collect some of the most relevant recent discoveries and papers — particularly in, but not limited to, artificial intelligence — and explain why they matter.

Over the past few weeks, scientists developed an algorithm to uncover fascinating details about the moon’s dimly lit — and in some cases pitch-black — asteroid craters. Elsewhere, MIT researchers trained an AI model on textbooks to see whether it could independently figure out the rules of a specific language. And teams at DeepMind and Microsoft investigated whether motion capture data could be used to teach robots how to perform specific tasks, like walking.

With the pending (and predictably delayed) launch of Artemis I, lunar science is again in the spotlight. Ironically, however, it is the darkest regions of the moon that are potentially the most interesting, since they may house water ice that can be used for countless purposes. It’s easy to spot the darkness, but what’s in there? An international team of image experts has applied ML to the problem with some success.

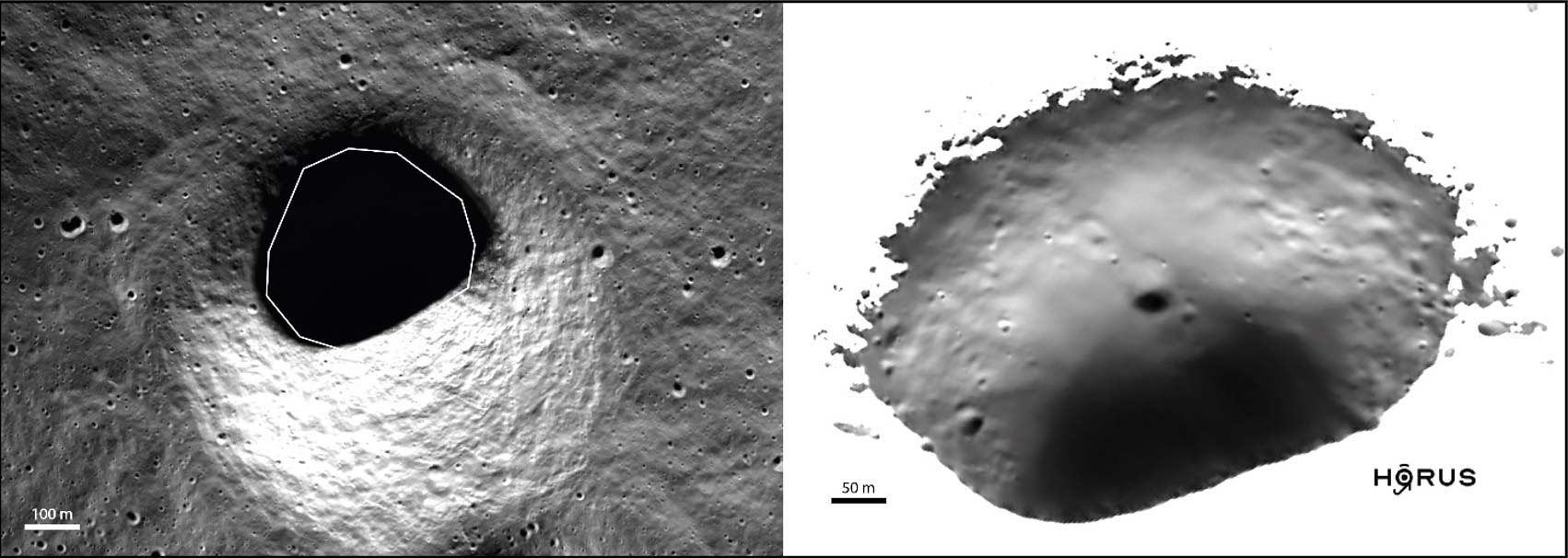

Though the craters lie in deepest darkness, the Lunar Reconnaissance Orbiter still captures the occasional photon from within, and the team put together years of these underexposed (but not totally black) exposures with a “physics-based, deep learning-driven post-processing tool” described in Geophysical Research Letters. The result is that “visible routes into the permanently shadowed regions can now be designed, greatly reducing risks to Artemis astronauts and robotic explorers,” according to David Kring of the Lunar and Planetary institute.

Let there be light! The interior of the crater is reconstructed from stray photons. Image Credits: V. T. Bickel, B. Moseley, E. Hauber, M. Shirley, J.-P. Williams and D. A. Kring

They’ll have flashlights, we imagine, but it’s good to have a general idea of where to go beforehand, and of course it could affect where robotic exploration or landers focus their efforts.

However useful, there’s nothing mysterious about turning sparse data into an image. But in the world of linguistics, AI is making fascinating inroads into how and whether language models really know what they know. In the case of learning a language’s grammar, an MIT experiment found that a model trained on multiple textbooks was able to build its own model of how a given language worked, to the point where its grammar for Polish, say, could successfully answer textbook problems about it.

“Linguists have thought that in order to really understand the rules of a human language, to empathize with what it is that makes the system tick, you have to be human. We wanted to see if we can emulate the kinds of knowledge and reasoning that humans (linguists) bring to the task,” said MIT’s Adam Albright in a news release. It’s very early research on this front but promising in that it shows that subtle or hidden rules can be “understood” by AI models without explicit instruction in them.

But the experiment didn’t directly address a key, open question in AI research: how to prevent language models from outputting toxic, discriminatory or misleading language. New work out of DeepMind does tackle this, taking a philosophical approach to the problem of aligning language models with human values.

Researchers at the lab posit that there’s no “one-size-fits-all” path to better language models, because the models need to embody different traits depending on the contexts in which they’re deployed. For example, a model designed to assist in scientific study would ideally only make true statements, while an agent playing the role of a moderator in a public debate would exercise values like toleration, civility and respect.

So how can these values be instilled in a language model? The DeepMind co-authors don’t suggest one specific way. Instead, they imply models can cultivate more “robust” and “respectful” conversations over time via processes they call context construction and elucidation. As the co-authors explain: “Even when a person is not aware of the values that govern a given conversational practice, the agent may still help the human understand these values by prefiguring them in conversation, making the course of communication deeper and more fruitful for the human speaker.”

Google’s LaMDA language model responding to a question. Image Credits: Google

Sussing out the most promising methods to align language models takes immense time and resources — financial and otherwise. But in domains beyond language, particularly scientific domains, that might not be the case for much longer, thanks to a $3.5 million grant from the National Science Foundation (NSF) awarded to a team of scientists from the University of Chicago, Argonne National Laboratory and MIT.

With the NSF grant, the recipients plan to build what they describe as “model gardens,” or repositories of AI models designed to solve problems in areas like physics, mathematics and chemistry. The repositories will link the models with data and computing resources as well as automated tests and screens to validate their accuracy, ideally making it simpler for scientific researchers to test and deploy the tools in their own studies.

“A user can come to the [model] garden and see all that information at a glance,” Ben Blaiszik, a data science researcher at Globus Labs involved with the project, said in a press release. “They can cite the model, they can learn about the model, they can contact the authors, and they can invoke the model themselves in a web environment on leadership computing facilities or on their own computer.”

Meanwhile, over in the robotics domain, researchers are building a platform for AI models not with software, but with hardware — neuromorphic hardware to be exact. Intel claims the latest generation of its experimental Loihi chip can enable an object recognition model to “learn” to identify an object it’s never seen before using up to 175 times less power than if the model were running on a CPU.

A humanoid robot equipped with one of Intel’s experimental neuromorphic chips. Image Credits: Intel

Neuromorphic systems attempt to mimic the biological structures in the nervous system. While traditional machine learning systems are either fast or power efficient, neuromorphic systems achieve both speed and efficiency by using nodes to process information and connections between the nodes to transfer electrical signals using analog circuitry. The systems can modulate the amount of power flowing between the nodes, allowing each node to perform processing — but only when required.

Intel and others believe that neuromorphic computing has applications in logistics, for example powering a robot built to help with manufacturing processes. It’s theoretical at this point — neuromorphic computing has its downsides — but perhaps one day, that vision will come to pass.

Image Credits: DeepMind

Closer to reality is DeepMind’s recent work in “embodied intelligence,” or using human and animal motions to teach robots to dribble a ball, carry boxes and even play football. Researchers at the lab devised a setup to record data from motion trackers worn by humans and animals, from which an AI system learned to infer how to complete new actions, like how to walk in a circular motion. The researchers claim that this approach translated well to real-world robots, for example allowing a four-legged robot to walk like a dog while simultaneously dribbling a ball.

Coincidentally, Microsoft earlier this summer released a library of motion capture data intended to spur research into robots that can walk like humans. Called MoCapAct, the library contains motion capture clips that, when used with other data, can be used to create agile bipedal robots — at least in simulation.

“[Creating this dataset] has taken the equivalent of 50 years over many GPU-equipped [servers] … a testament to the computational hurdle MoCapAct removes for other researchers,” the co-authors of the work wrote in a blog post. “We hope the community can build off of our dataset and work to do incredible research in the control of humanoid robots.”

Peer review of scientific papers is invaluable human work, and it’s unlikely AI will take over there, but it may actually help make sure that peer reviews are actually helpful. A Swiss research group has been looking at model-based evaluation of peer reviews, and their early results are mixed — in a good way. There wasn’t some obvious good or bad method or trend, and publication impact rating didn’t seem to predict whether a review was thorough or helpful. That’s okay though, because although quality of reviews differs, you wouldn’t want there to be a systematic lack of good review everywhere but major journals, for instance. Their work is ongoing.

Last, for anyone concerned about creativity in this domain, here’s a personal project by Karen X. Cheng that shows how a bit of ingenuity and hard work can be combined with AI to produce something truly original.