Ofcom, the U.K.’s soon-to-be social media harms watchdog under incoming online safety legislation, has warned tech platforms that they are failing to take women’s safety seriously.

Publishing new research (PDF) into the nation’s online habits today, Ofcom said it has found that female internet users in the U.K. are less confident about their online safety than men, as well as being more affected by discriminatory, hateful and trolling content.

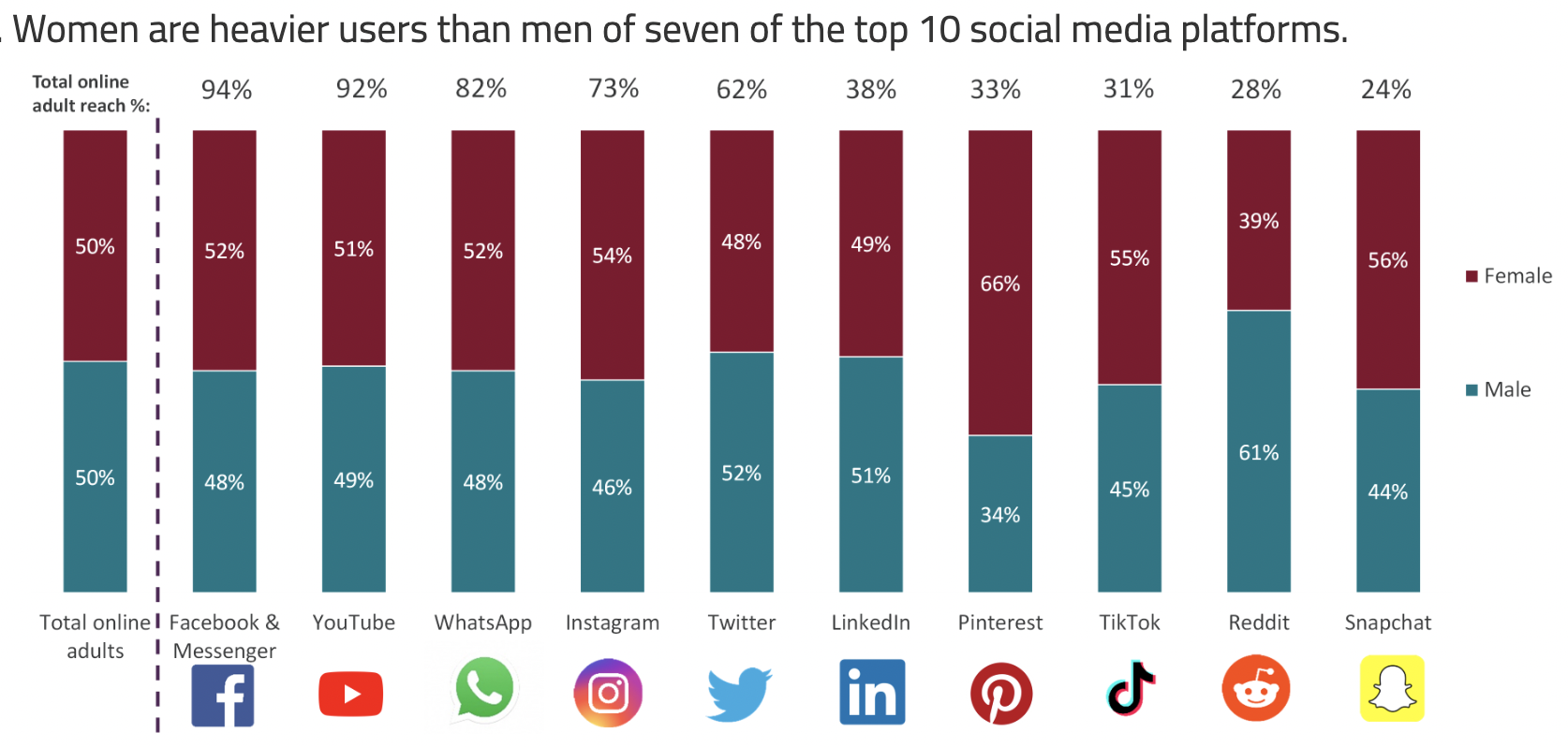

Its study, which involved the regulator polling some 6,000 Brits to understand their online experiences and habits, also indicates that women feel less able to have a voice and share their opinions on the web than male counterparts — and that’s despite another finding from the study that women tend to be more avid users of the internet and major social media services.

Ofcom found women spend more than a quarter of their waking hours online — around half an hour each day more than men (4 hrs 11min vs. 3 hrs 46 min).

Image Credits: Ofcom’s Online Nation 2022 report

The regulator is urging tech companies to listen to its findings and take action now to make their platforms more welcoming and safe for women and girls.

While the regulator doesn’t yet have formal powers to force platforms to change how they operate, under the Online Safety Bill that’s currently before Parliament — which is set to introduce a duty of care on platforms to protect users from a range of illegal and other types of harms — it will be able to fine rule-breakers up to 10% of their global annual turnover. So Ofcom’s remarks can be seen as a warning shot across the bows of social media giants like Facebook and Instagram owner, Meta, which will face close operational scrutiny from the regulator once the law is passed and comes into effect — likely next year.

In a statement accompanying the research, Ofcom’s CEO, Melanie Dawes, said:

The message from women who go online is loud and clear. They are less confident about their personal online safety and feel the negative effects of harmful content like trolling more deeply.

We urge tech companies to take women’s online safety concerns seriously and place people’s safety at the heart of their services. That includes listening to feedback from users when they design their services and the algorithms that serve up content.

Discussing the findings with BBC Radio 4’s “Today” program this morning, Dawes further emphasized that the research shows — “on every measure” — that women feel less positive about being online than men do. “They simply feel less safe and they’re more deeply affected by hate speech and trolling,” she added. “As a result there’s a chilling effect, to be honest — women feel less able to share their opinions online and less able to have their voices heard.”

Another finding from the research highlights the greater impact negative online experiences can have on women’s mental health, especially for younger women and black women — with Ofcom finding that women aged 18-34 were more likely than any other group to disagree with the statement that “being online has a positive effect on my mental health” (23% vs. 14% for the average U.K. adult, and 12% of men). Nearly a quarter (23%) of Black women also disagreed with the statement — which was higher than white women (16%) and Asian women (12%), per Ofcom.

“We think the social media companies need to take more action,” Dawes also told the BBC, indicating how it would like Big Tech to respond. “They need to talk to women on their services, find out what they think, give them the tools to report harm when they find it and above all show they’re acting when something’s gone wrong.”

Asked about the new role Ofcom will be taking on regulating social media giants under the Online Safety Bill, Dawes welcomed the incoming legislation — and her response suggested it will be paying close attention to social media giants’ content-sorting and amplifying algorithms.

“I think there’s a lot that the social media companies can do. We think they need to look at their algorithms and what goes viral because too often companies place their growth and their revenues above public safety. And some of the worst harms are caused, not so much by individual posts, but actually when things go viral and are shared with hundreds of thousands of people,” she said.

“I’d also say to the social media companies: Look at yourselves, look at where the women are in your businesses — because we know that most tech and engineering teams, these are the people who are actually developing new services, are made up of men so the companies need to make a special effort to get women’s voices heard.”

She suggested Ofcom will be directing the lion’s share of effort and resource toward “the big social media apps,” which she noted is where the research shows online Brits are spending most of their time.

“The legislation that’s going through Parliament is really clear that the obligations are highest on those biggest and most high-reach services and that’s where we’ll be focusing our effort,” she said, adding: “And we’re going to be very careful … to make sure that we think about competition and to make sure that we don’t stifle innovation and that we make it easier for smaller companies to grow and to flourish.”

She also argued against concerns the bill will make it harder for new entrants to compete against better resourced tech giants who can throw more money at compliance, suggesting — instead — that the regulation will rather help smaller businesses and new entrants by creating “clearer expectations so it’s easier for them to know what they need to do to protect the public.”

On enforcement, Dawes indicated social media giants will also be first in line — saying Ofcom will “absolutely be going in there and asking for information as soon as the bill is live next year and asking the social media companies what they’re doing — and above all what they’re doing to prevent these problems by how they redesign their services.”

She was also quizzed on how the regulator will negotiate the fuzzy issue of content that’s legal but may be offensive to some web users. The U.K.’s approach with the Online Safety Bill proposes to regulate how platforms respond to illegal speech but ministers want it to go much further and tackle a far wider array of potentially problematic but not technically illegal speech (such as trolling, insults, certain types of threats, etc.) — an approach which continues to cause huge concern about the legislation’s impact on freedom of expression.

The Ofcom CEO described this element of the bill as “important,” while saying she expects there will be a lot of debate over the detail as the legislation goes through parliament.

But she also pointed back to the research findings — reiterating that women have a consistently more negative online experience than men and are more likely to suffer online abuse, adding: “So this is a problem and I’m afraid it’s getting worse.”