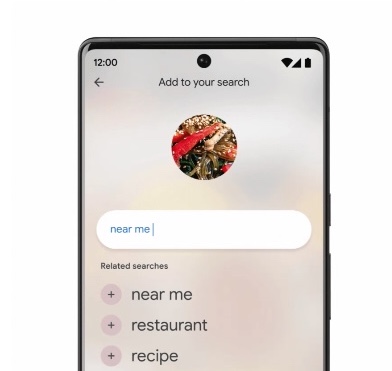

In April, Google introduced a new “multisearch” feature that offered a way to search the web using both text and images at the same time. Today, at Google’s I/O developer conference, the company announced an expansion to this feature, called “Multisearch Near Me.” This addition, arriving later in 2022, will allow Google app users to combine either a picture or a screenshot with the text “near me” to be directed to options for local retailers or restaurants that would have the apparel, home goods or food you’re in search of. It’s also announcing a forthcoming development to multisearch that appears to be built with AR glasses in mind, as it can visually search across multiple objects in a scene based on what you’re currently “seeing” via a smartphone camera’s viewfinder.

With the new “near me” multisearch query, you’ll be able to find local options related to your current visual and text-based search combination. For example, if you were working on a DIY project and came across a part that you needed to replace, you could snap a photo of the part with your phone’s camera to identify it and then find a local hardware store that has a replacement in stock.

This isn’t all that different from how multisearch already works, Google explains — it’s just adding the local component.

Image Credits: Google

Originally, the idea with multisearch was to allow users to ask questions about an object in front of them and refine those results by color, brand or other visual attributes. The feature today works best with shopping searches, as it allows users to narrow down product searches in a way that standard text-based web searches could sometimes struggle with. For instance, a user could snap a photo of a pair of sneakers, then add text to ask to see them in blue to be shown just those shoes in the color specified. They could choose to visit the website for the sneakers and immediately purchase them, as well. The expansion to include the “near me” option now simply limits the results further in order to point users to a local retailer where the given product is available.

In terms of helping users find local restaurants, the feature works similarly. In this case, a user could search based on a photo they found on a food blog or somewhere else on the web to learn what the dish is and which local restaurants might have the option on their menu for dine-in, pickup or delivery. Here, Google Search combines the image with the intent that you’re in search of a nearby restaurant and will scan across millions of images, reviews and community contributions to Google Maps to find the local spot.

The new “near me” feature will be available globally in English and will roll out to more languages over time, Google says.

The more interesting addition to multisearch is the capability to search within a scene. In the future, Google says users will be able to pan their camera around to learn about multiple objects within that wider scene.

Google suggests the feature could be used to scan the shelves at a bookstore then see helpful several insights overlaid in front of you.

Image Credits: Google

“To make this possible, we bring together not only computer vision, natural language understanding, but we also bring that together with the knowledge of the web and on-device technology,” noted Nick Bell, senior director, Google Search. “So the possibilities and the capabilities of this are going to be huge and significant,” he noted.

The company — which came to the AR market early with its Google Glass release — didn’t confirm it had any sort of new AR glasses-type device in the works, but hinted at the possibility.

“With AI systems now, what’s possible today — and going to be possible over the next few years — just kind of unlocks so many opportunities,” said Bell. In addition to voice search, desktop and mobile search, the company believes visual search will also be a bigger part of the future, he noted.

“There are 8 billion visual searches on Google with Lens every single month now and that number is three times the size that it was just a year ago,” Bell continued. “What we’re definitely seeing from people is that the appetite and the desire to search visually is there. And what we’re trying to do now is lean into the use cases and identify where this is most useful,” he said. “I think as we think about the future of search, visual search is definitely a key part of that.”

The company, of course, is reportedly working on a secret project, codenamed Project Iris, to build a new AR headset with a projected 2024 release date. It’s easy to imagine not only how this scene-scanning capability could run on such a device, but also how any sort of image-plus-text (or voice!) search feature could be used on an AR headset. Imagine again looking at the pair of sneakers you liked, for instance, then asking a device to navigate to the nearest store you could make the purchase.

“Looking further out, this technology could be used beyond everyday needs to help address societal challenges, like supporting conservationists in identifying plant species that need protection, or helping disaster relief workers quickly sort through donations in times of need,” suggested Prabhakar Raghavan, Google Search SVP, speaking on stage at Google I/O.

Unfortunately, Google didn’t offer a timeframe for when it expected to put the scene-scanning capability into the hands of users, as the feature is still “in development.”