Google announced today that it’s rolling out a new “multisearch” feature that allows users to search using text and images at the same time via Google Lens, the company’s image recognition technology. Google first teased the functionality last September at its Search On event and said it would be launching the feature in the coming months after testing and evaluation. Starting today, the new multisearch functionality is available as a beta feature in English in the United States.

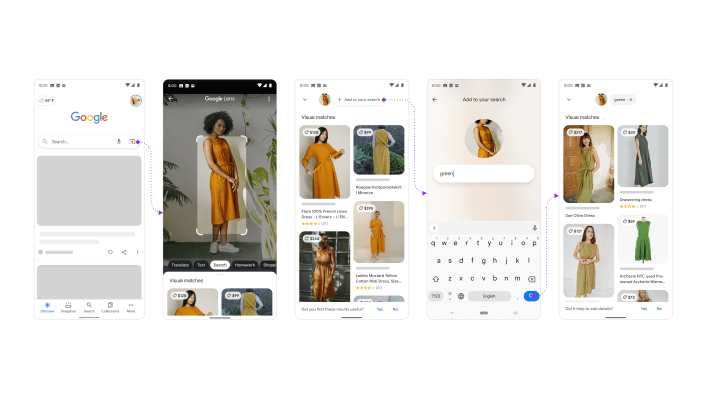

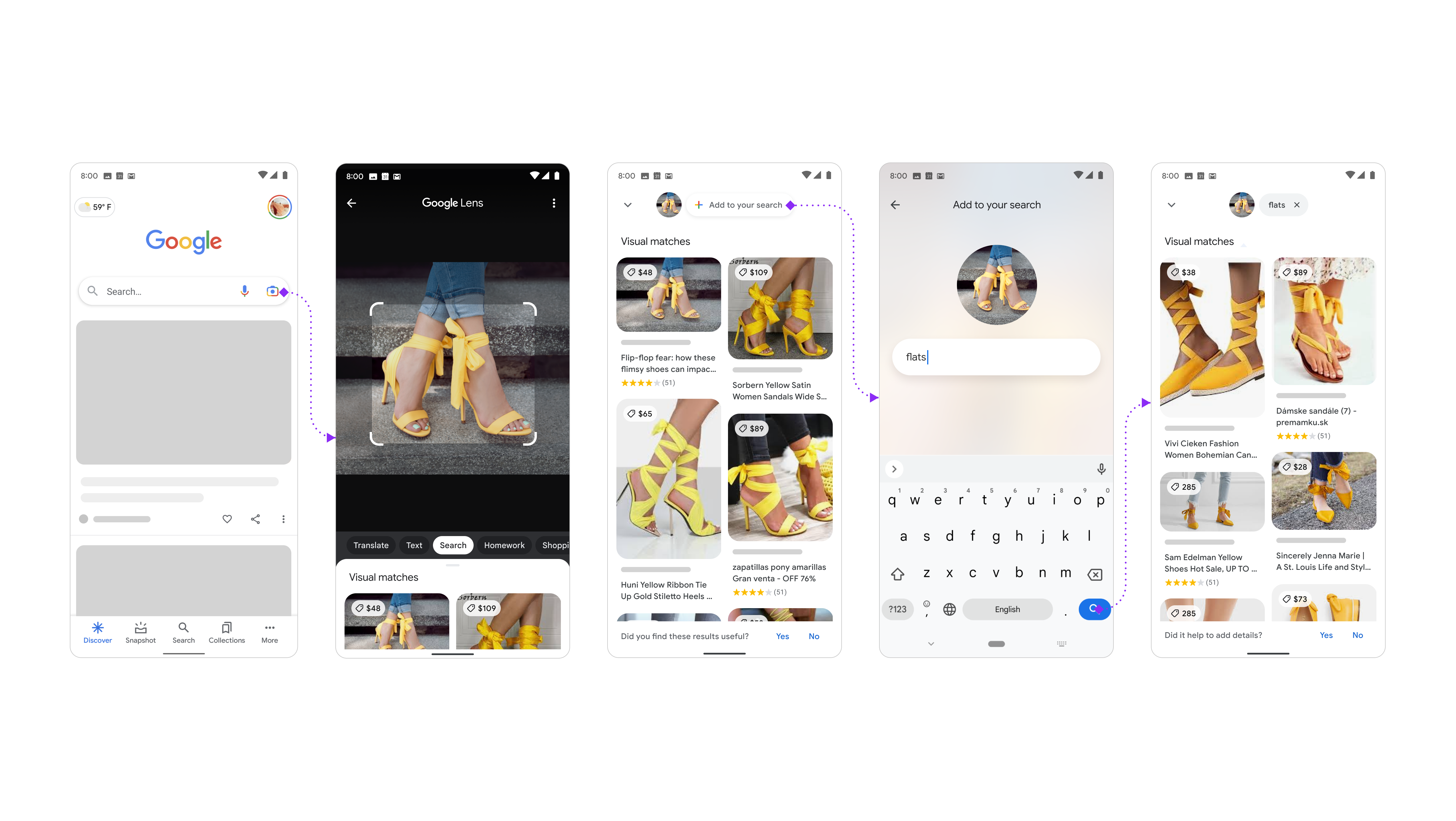

To get started, you need to open the Google app on Android or iOS, tap the Lens camera icon and either search one of your screenshots or take a photo. Then, you can swipe up and tap the “+ Add to your search” button to add text. Google notes that users should have the latest versions of the app to access the new functionality.

Image Credits: Google

With the new multisearch feature, you can ask a question about an object in front of you or refine your search results by color, brand or visual attributes. Google told TechCrunch that the new feature currently has the best results for shopping searches, with more use cases to come in the future. With this initial beta launch, you can also do things beyond shopping, but it won’t be perfect for every search.

In practice, this is how the new feature could work. Say you found a dress that you like but aren’t a fan of the color it’s available in. You could pull up a photo of the dress and then add the text “green” in your search query to find it in your desired color. In another example, you’re looking for new furniture, but want to make sure it complements your current furniture. You can take a photo of your dining set and add the text “coffee table” in your search query to find a matching table. Or, say you got a new plant and aren’t sure how to properly take care of it. You could take a picture of the plant and add the text “care instructions” in your search to learn more about it.

The new functionality could be especially useful for the type of queries that Google currently has trouble with — where there’s a visual component to what you’re looking for that is hard to describe using words alone. By combining the image and the words into one query, Google may have a better shot at delivering relevant search results.

Image Credits: Google

“At Google, we’re always dreaming up new ways to help you uncover the information you’re looking for — no matter how tricky it might be to express what you need,” Google said in a blog post about the announcement. “That’s why today, we’re introducing an entirely new way to search: using text and images at the same time. With multisearch on Lens, you can go beyond the search box and ask questions about what you see.”

Google says the new functionality is made possible by its latest advancements in artificial intelligence. The company is also exploring ways in which multisearch could be enhanced by MUM, its latest AI model in Search. Multitask Unified Model, or MUM, can simultaneously understand information across a wide range of formats, including text, images and videos, and draw insights and connections between topics, concepts and ideas.

Today’s announcement comes a week after Google announced it’s rolling out improvements to its AI model to make Google Search a safer experience and one that’s better at handling sensitive queries, including those around topics like suicide, sexual assault, substance abuse and domestic violence. It’s also using other AI technologies to improve its ability to remove unwanted explicit or suggestive content from Search results when people aren’t specifically seeking it out.