Security — as in “hey you, you can’t go in there” — quickly becomes a complex, arguably impossible job once you get past a few buildings and cameras. Who can watch everywhere at once, and send someone in time to prevent a problem? Ambient.ai isn’t the first to claim that AI can, but they may be the first to actually pull it off at scale — and they’ve raised $52 million to keep growing.

The problem with today’s processes is the sort of thing anybody can point out. If you’ve got a modern company or school campus with dozens or hundreds of cameras, they produce so much footage and data that even a dedicated security team will have trouble keeping up with it. As a consequence, not only are they likely to miss an important event as it happens, but they’re also up to their ears in false alarms and noise.

“Victims are always looking at the cameras, expecting that someone is coming to help them… but it just isn’t the case,” CEO and co-founder of Ambient.ai, Shikhar Shrestha, told TechCrunch. “Best case is you wait for the incident to happen, you go and pull the video, and you work from there. We have the cameras, we have the sensors, we have the officers — what’s missing is the brain in the middle.”

Obviously Shrestha’s company is looking to provide the brain: a central visual processing unit for live security footage that can tell when something’s going wrong and immediately tell the right people. But without the bias that threatens such endeavors, and no facial recognition.

Others have made inroads on this particular idea before now, but so far none has seen serious adoption. The first generation of automatic image recognition, Shrestha said, was simple motion detection, little more than checking whether pixels were moving around on the screen — with no insight into whether it was a tree or a home invader. Next came the use of deep learning to do object recognition: identifying a gun in hand or a breaking window. This proved useful but limited and somewhat high maintenance, needing lots of scene- and object-specific training.

“The insight was, if you look at what humans do to understand a video, we take lots of other information: is the person sitting or standing? Are they opening a door, are they walking or running? Are they indoors or outdoors, daytime or nighttime? We bring all that together to create a kind of comprehensive understanding of the scene,” Shrestha explained. “We use computer vision intelligence to mine the footage for a whole range of events. We break down every task and call it a primitive: interactions, objects, etc., then we combine those building blocks to create a ‘signature.'”

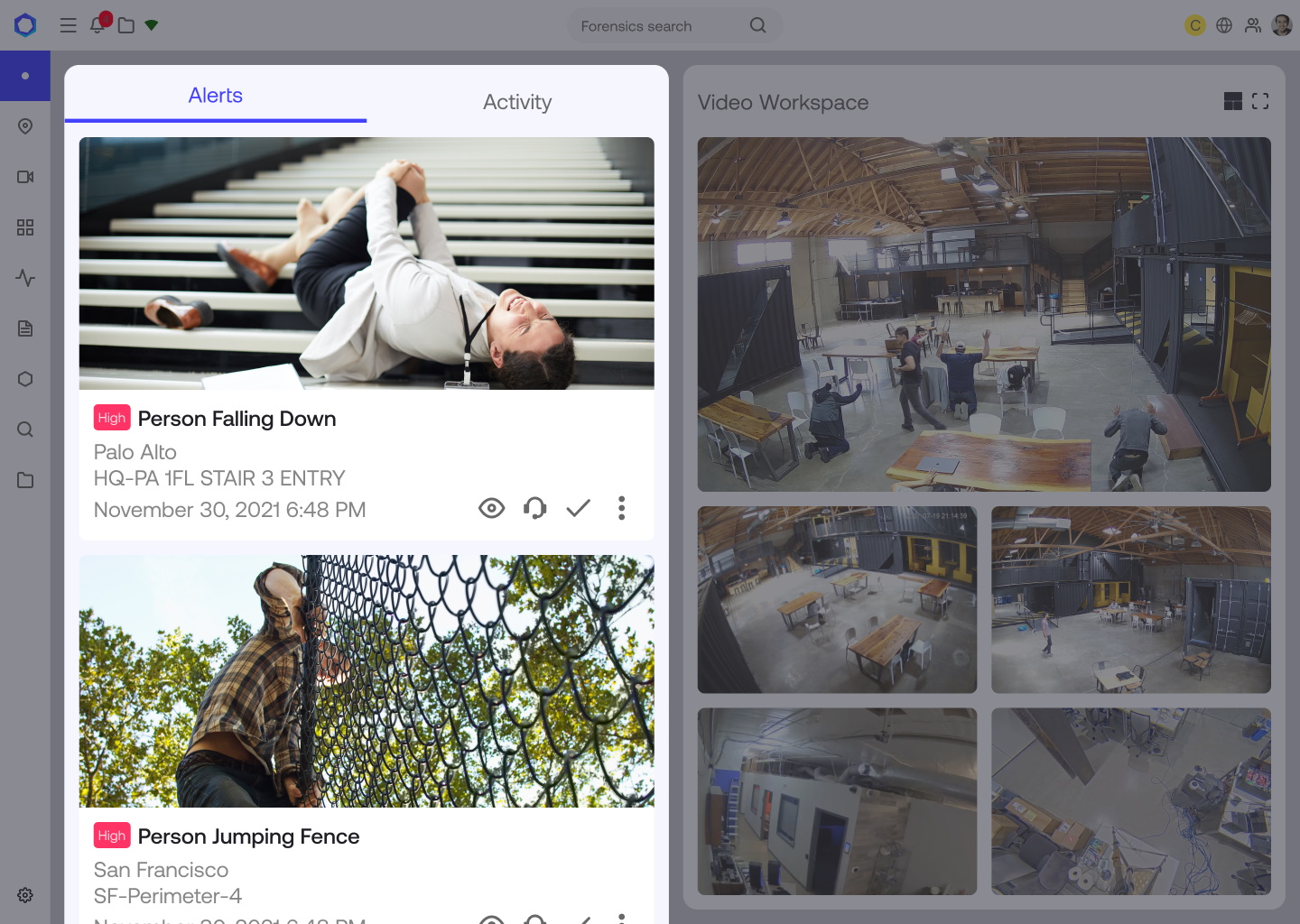

The Ambient.ai system uses elements of behavior and plugs them into each other to tell whether they’re a problem. Image Credits: Ambient.ai

A signature may be something like “a person sitting in their car for a long time at night,” or “a person standing by a security checkpoint not interacting with anyone,” or any number of things. Some have been tweaked and added by the team, some have been arrived at independently by the model, which Shrestha descrbed as “kind of a managed semi-supervised approach.”

The benefit of using an AI to monitor a hundred video streams at once is plain even if you were to assume that said AI is only, say, 80% as good as a human at spotting something bad happening. With no such shortcomings as distraction, fatigue or only having two eyes, an AI can apply that level of success without limits on time or feed number, meaning the chance of success is actually quite high.

But the same might have been said of a proto-AI system from a few years ago that was only looking for guns. What Ambient.ai is aiming for is something more comprehensive.

“We built the platform around the idea of privacy by design,” Shrestha said. With AI-powered security, “people just assume facial recognition is part of it, but with our approach you have this large number of signature events, and you can have a risk indicator without having to do facial recognition. You don’t just have one image and one model that says what’s happening — we have all these different blocks that allow you to get more descriptive in the system.”

Essentially this is done by keeping each individual recognized activity bias-free to begin with. For instance, whether someone is sitting or standing, or how long they’ve been waiting outside a door — if each of these behaviors can be audited and found to be detected across demographics and groups, then the sum of such inferences must likewise be free of bias. In this way the system structurally reduces bias.

It must, however, be said that bias is insidious and complex, and our ability to recognize it and mitigate it lags behind the state of the art. Nevertheless it seems intuitively true that, as Shrestha put it, “if you don’t have an inference category for something that can be biased, there’s no way for bias to come in that way.” Let’s hope so!

Ambient.ai co-founders Vikesh Khanna (CTO, left) and Shikhar Shrestha (CEO). Image Credits: Ambient.ai

We’ve seen a few startups come and go along these lines, so it’s important for these ideas to be demonstrated on the record. And despite keeping relatively quiet about itself, Ambient.ai has a number of active customers that have helped prove out its product hypothesis. Of course the last couple years haven’t exactly been business as usual… but it’s hard to imagine “five of the largest U.S. tech companies by market cap” would be customers (and they are) if it didn’t work.

One test at an unnamed “Fortune 500 Technology Company” was looking to reduce “tailgating,” where someone enters a secured area right behind another person authorized to do so. If you think no one does this, well, they identified 2,000 incidents the first week. But by sending GIFs of the events in near real time to security, who presumably went and wagged their fingers at the offenders, that number was reduced to 200 a week. Now it’s 10 a week, probably by people like me.

In another test case Ambient.ai documented, a school’s security cameras caught someone scaling the fence after hours. The security head was sent the footage immediately and called the cops. Turns out the guy had priors. The point I take here is not that we need to lock down our school campuses, and this will help do that, but something else mentioned in the document, which is that the system can combine the knowledge of “someone is climbing a fence” with other stuff, like “this happens a lot a bit before 8:45,” so kids taking a shortcut don’t get the cops called on them. And the AI could also discern between climbing, falling and loitering, which in different circumstances might matter, or not.

Ambient.ai claims that part of the system’s flexibility is in all these “primitives” being easy to rearrange depending on the needs of the site — maybe you truly don’t care if someone climbs a fence, unless they fall — as well as being able to learn new situations: “Ah, so this is what it looks like when someone is cutting a fence.” The team currently has about 100 suspicious behavior “signatures” and hopes to double that over the next year.

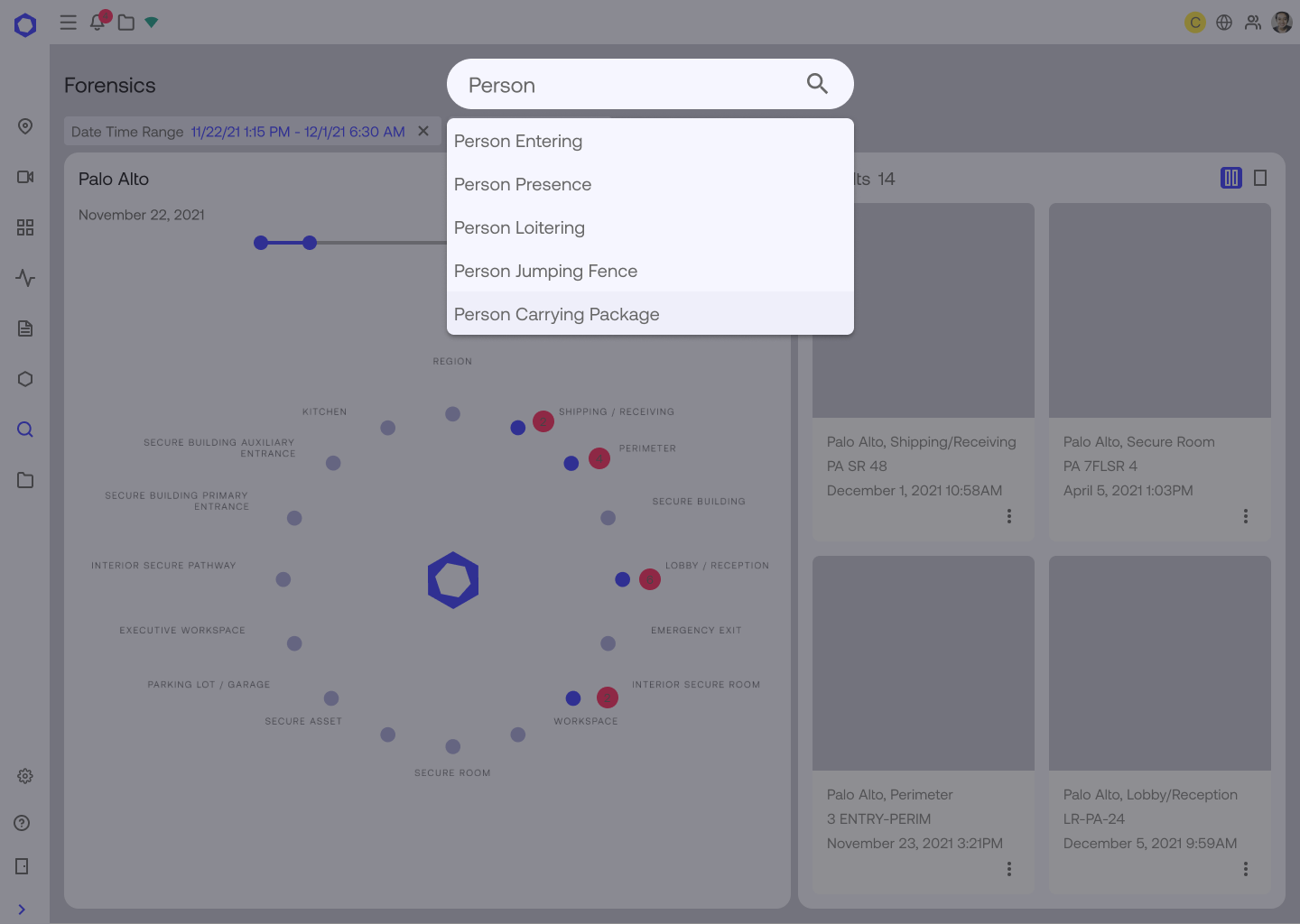

Making the existing security personnel more effective by giving them more control over what blows up their phone or radio saves time and improves outcomes (Ambient.ai says it reduces the number of common alarms in general by 85-90%). And AI-powered categorization of footage helps with records and archives as well. Saying “download all footage of people climbing a fence at night” is a lot easier than scrubbing through 5,000 hours manually.

The $52 million round was led by a16z, but there’s also a bit of a who’s-who in the individual investor pile: Ron Conway, Ali Rowghani from Y Combinator, Okta co-founder Frederic Kerrest, CrowdStrike CEO George Kurtz, Microsoft CVP Charles Dietrich, and several others who know of what they invest in.

“It’s a unique time; security practitioners are expected to do a lot more. The basic proposal of not having to have someone watching all these feeds is universal,” said Shrestha. “We spend so much money on security, $120 billion… it’s crazy that the outcomes aren’t there — we don’t prevent incidents. It feels like all roads are leading to convergence. We want to be a platform that an organization can adopt and future-proof their security.”