I see far more research articles than I could possibly write up. This column collects the most interesting of those papers and advances, along with notes on why they may prove important in the world of tech and startups. This week: supercomputers take on COVID-19, beetle backpacks, artificial spiderwebs, the “overwhelming whiteness” of AI and more.

First off, if (like me) you missed this amazing experiment where scientists attached tiny cameras to the backs of beetles, I don’t think I have to explain how cool it is. But you may wonder… why do it? Prolific UW researcher Shyam Gollakota and several graduate students were interested in replicating some aspects of insect vision, specifically how efficient the processing and direction of attention is.

The camera backpack has a narrow field of view and uses a simple mechanism to direct its focus rather than processing a wide-field image at all times, saving energy and better imitating how real animals see. “Vision is so important for communication and for navigation, but it’s extremely challenging to do it at such a small scale. As a result, prior to our work, wireless vision has not been possible for small robots or insects,” said Gollakota. You can watch the critters in action below — and don’t worry, the beetles lived long, happy lives after their backpack-wearing days.

The health and medical community is always making interesting strides in technology, but it’s often pretty niche stuff. These two items from recent weeks are a bit more high-profile.

One is a new study being conducted by UCLA in concert with Apple, which especially with its smartwatch has provided lots of excellent data to, for example, studies of arrhythmia. In this case, doctors are looking at depression and anxiety, which are considerably more difficult to quantify and detect. But by using Apple Watch, iPhone and sleep monitor measurements of activity levels, sleep patterns and so on, a large body of standardized data can be amassed.

“Current approaches to treating depression rely almost entirely on the subjective recollections of depression sufferers. This is an important step for obtaining objective and precise measurements that guide both diagnosis and treatment,” said UCLA’s Dr. Nelson Freimer.

The second item in health tech is COVID-19-related, though I usually try to defer to more qualified outlets on such important matters. In this situation it’s more about the big-data approach. Oak Ridge National Labs scientists did some genetic data mining on several thousand samples, occupying Summit — the second-most powerful supercomputer in the world right now — for a whole week.

It didn’t break the case wide open, but that’s kind of the point. Instead, it generated some vague but actionable leads, which are solid gold in the field right now. Specifically, it found that genes related to producing a protein called bradykinin were reliably overexpressed, and genes that inhibit or break it down underexpressed. It’s a starting point that could suggest both harm mechanisms and therapeutic approaches. Supercomputing will never output a panacea for something as complex as this, but it does a pretty good job boiling the data ocean.

Image recognition engines frequently feature in this column because there are tons of interesting niche applications that nevertheless aren’t going to spin out into startups or major products. But they do amount to a sea change in methods used across hundreds of disciplines, and frequent refinements eventually raise an approach from experiment to real tool. Today’s no exception, with this study from Old Dominion University showing how refugee camps and numbers can be accurately counted by an AI examining aerial imagery.

Previous image recognition models for monitoring refugee situations (in this case the one in Syria) got good ballpark estimates, but could be off by anywhere from 10 to a few hundred percent. Not exactly reliable! Jiang Li’s work significantly improves the accuracy and precision, meaning it both gets closer to the real ground-truth numbers and does so more frequently. It’s getting to the point where these measurements could actually be trusted, and the region-agnostic tools could be deployed on short notice to monitor refugee situations in near real-time — a huge boon for governments and NGOs looking to help.

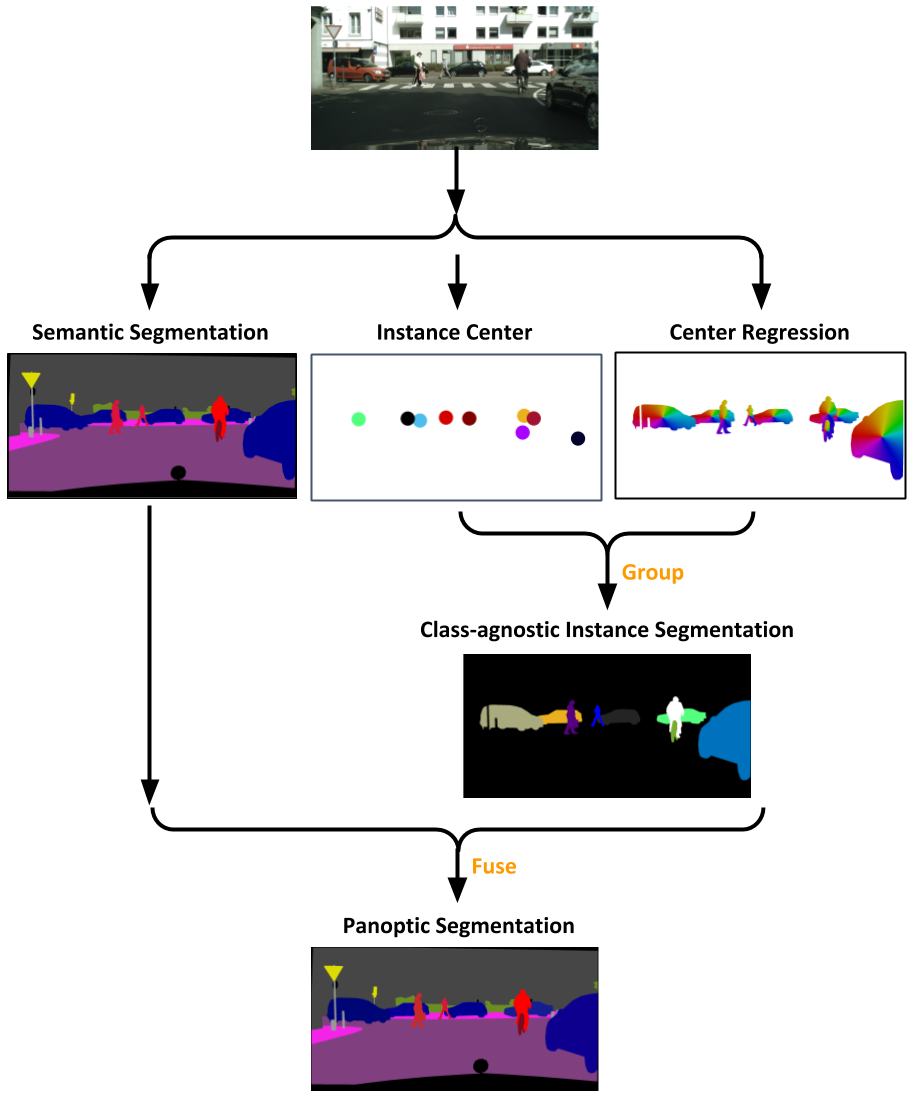

Google frequently puts out work advancing the ball on image recognition, and a recent study aims to change an interesting shortcoming of object identification I wasn’t aware of. It seems many systems, in labeling parts of an image “tree,” “car,” “bike” and so on don’t always label each car or bike individually — it’s more like saying they’re a color than an object, so it takes extra cycles to drill down and say “car 1,” “car 2,” etc. Google’s work bakes this object separation into the recognition process in an interesting way, simplifying the pipeline. Just one of those fixes that you might not even know needed to be made.

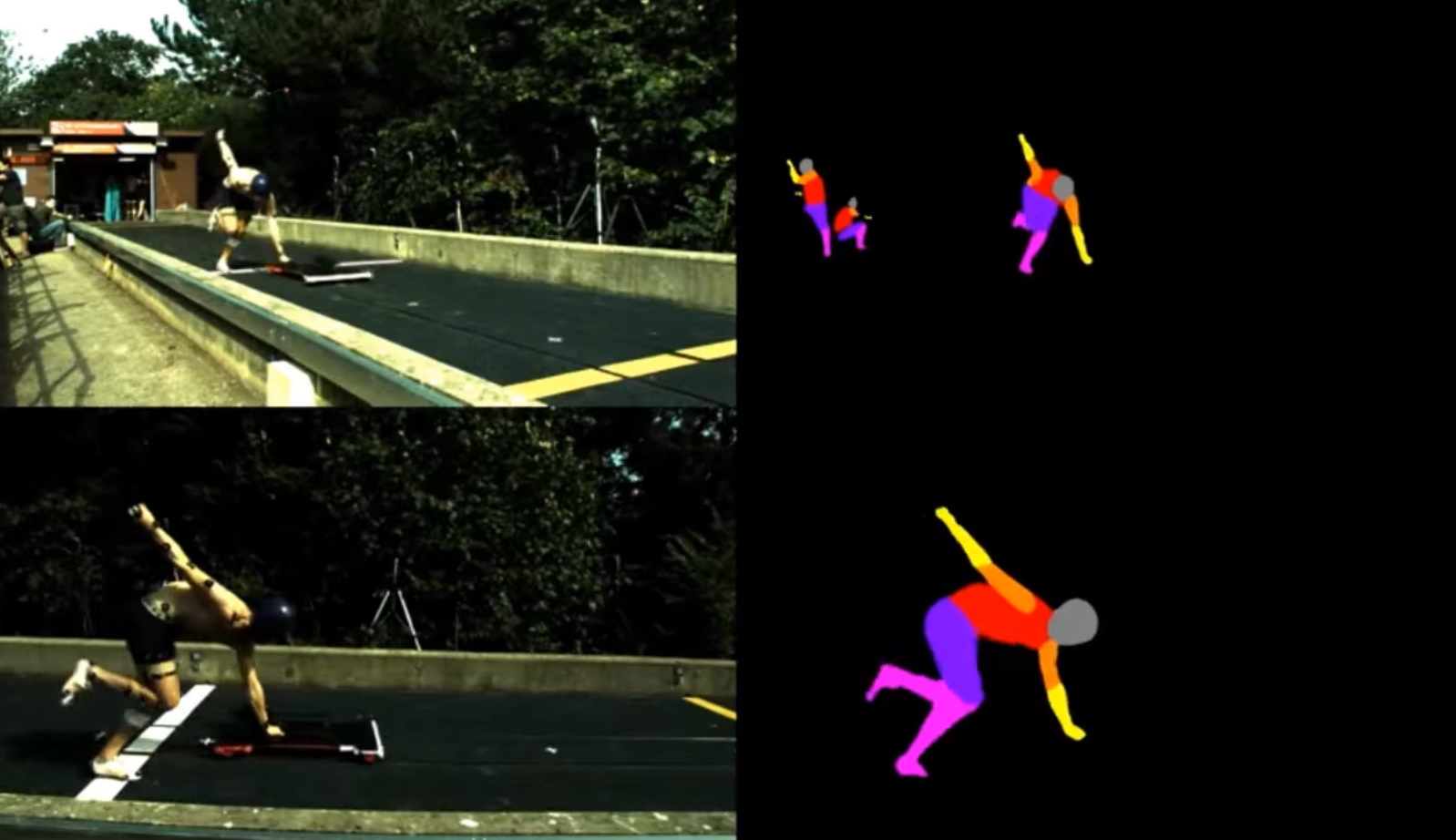

Another rather obscure advance comes from the University of Bath, where they have developed a new method of tracking the motions of skeleton racers. Skeleton is a sport that’s a bit like a self-propelled luge, and like other icy, high-speed sports, even hundredths of a second count, and optimizing one’s start and acceleration is crucial. It’s already high-tech in that skeleton racers will be imaged while wearing markers, like a motion capture studio — but the Bath researchers have come up with a way to do it just as well without the markers, and out in the wild.

A skeleton racer is captured in slow motion and his movements analyzed by AI. Image Credits: University of Bath

“The non-invasive nature of this approach not only means that we can capture push start-information without interfering with the athlete’s training session, but we can do so in way that conforms with the current need for social distancing,” said Bath’s Laurie Needham. It’s a reminder that this type of cutting-edge athletic analysis isn’t limited to, say, basketball or football and their millions of dollars in team budgets.

AI’s dark side, however, was highlighted in a pair of studies emphasizing dangers in both its creation and applications.

Or perhaps I should have said light side: A new Cambridge study points out the “overwhelming whiteness” of AI. But how can an AI be white? Well, if all the data used to form it carries inherent bias because of a prevalence of white faces, white European ideas and (because of its mostly white creators) white priorities. The full paper is an interesting and substantial read.

“Given that society has, for centuries, promoted the association of intelligence with White Europeans, it is to be expected that when this culture is asked to imagine an intelligent machine it imagines a White machine,” said lead author Kanta Dihal. For instance, virtual assistants default to a white, middle-class lexicon and accent, with the idea of Black dialects deemed too risky. Bias against people of color in shipping AI models is well documented. Dihal and others are leading an effort to “decolonize AI” that you can read about here.

An expert workshop conducted by the Dawes Centre for Future Crime (!) at University College London identified the most worrying potential crimes made possible by AI tools. But it’s not stuff like terminators. Instead, the experts settled on crimes that not only were likely to happen or are already happening, but cause significant harm and can be easily scaled.

The top six issues selected were:

- Deepfakes – a convincing interactive digital impersonation is a Swiss Army knife for hundreds of cons and crimes, and has the side effect of potentially discrediting real digital evidence as well.

- Driverless vehicles as weapons – self explanatory. One more reason why self-driving cars must destroy themselves.

- Tailored phishing – imagine the versatility of GPT-3-type language engines applied to phishing attacks. Brrr!

- Disrupting AI-controlled systems – relying on AI to manage the power grid, distribution logistics or traffic is an invitation for serious mischief.

- Large-scale blackmail – AI could collect or fake evidence and perform tailored extortion schemes.

- AI-authored fake news – benefits are dubious, but harm is high and it’s practically impossible to combat.

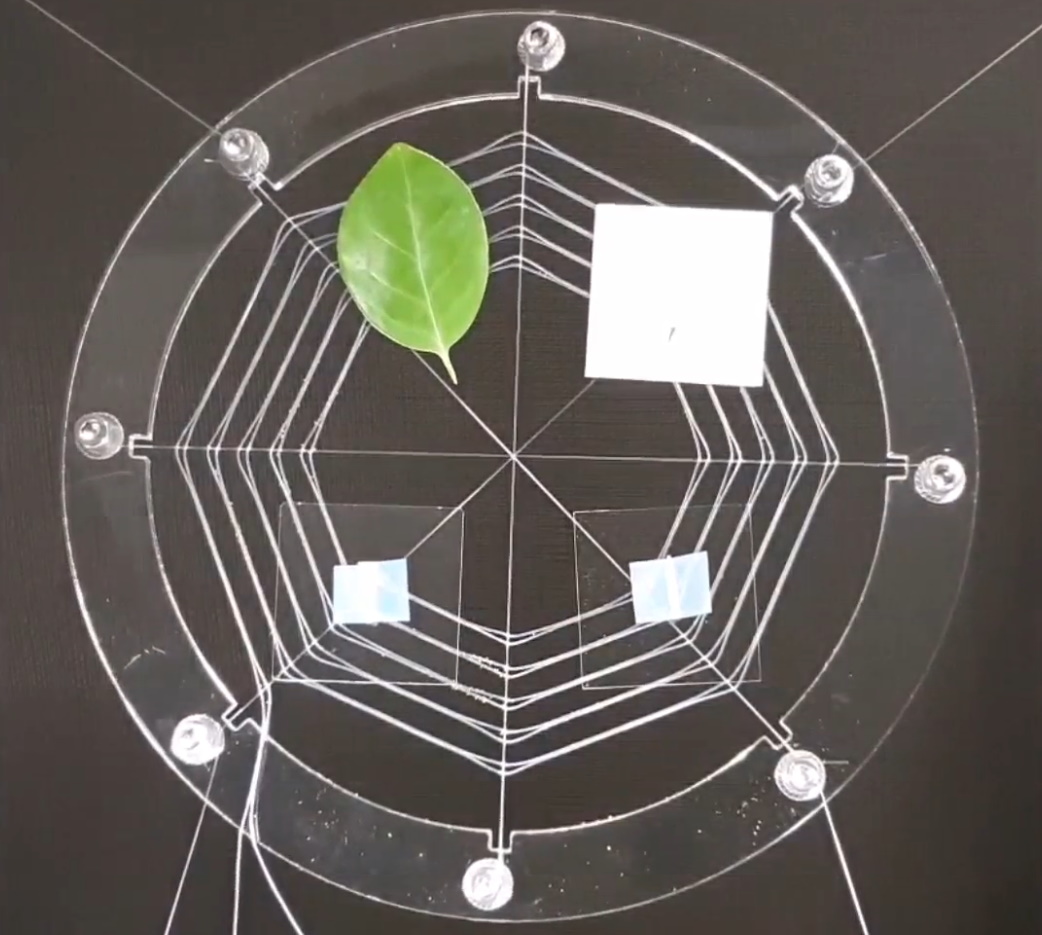

Last is a bit of fun biomimicry from Seoul National University. Admiring the versatility and minimalism embodied in spiders’ webs, the team set out to make a sort of electronic web to which even quite heavy objects stick when a certain current runs through it, and which can be vibrated (as spiders do) to shake loose dust and other detritus.

An artificial spider web to which objects attach when a current is run through it. Image Credits: Seoul National University

The technique may have applications in robotic grippers and other situations where a variably sticky material can be put to use. What’s especially interesting to me is that the researchers, realizing at the outset that attempting to replicate the silk production and deployment methods of actual spiders was a lost cause, decided to replicate the function instead of the material, in a more electronics-native way. In the field of biomimetics, being defeated by nature is a beginning, not an ending.