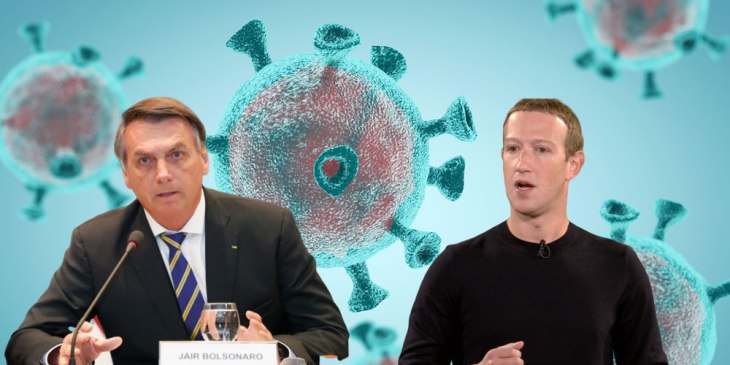

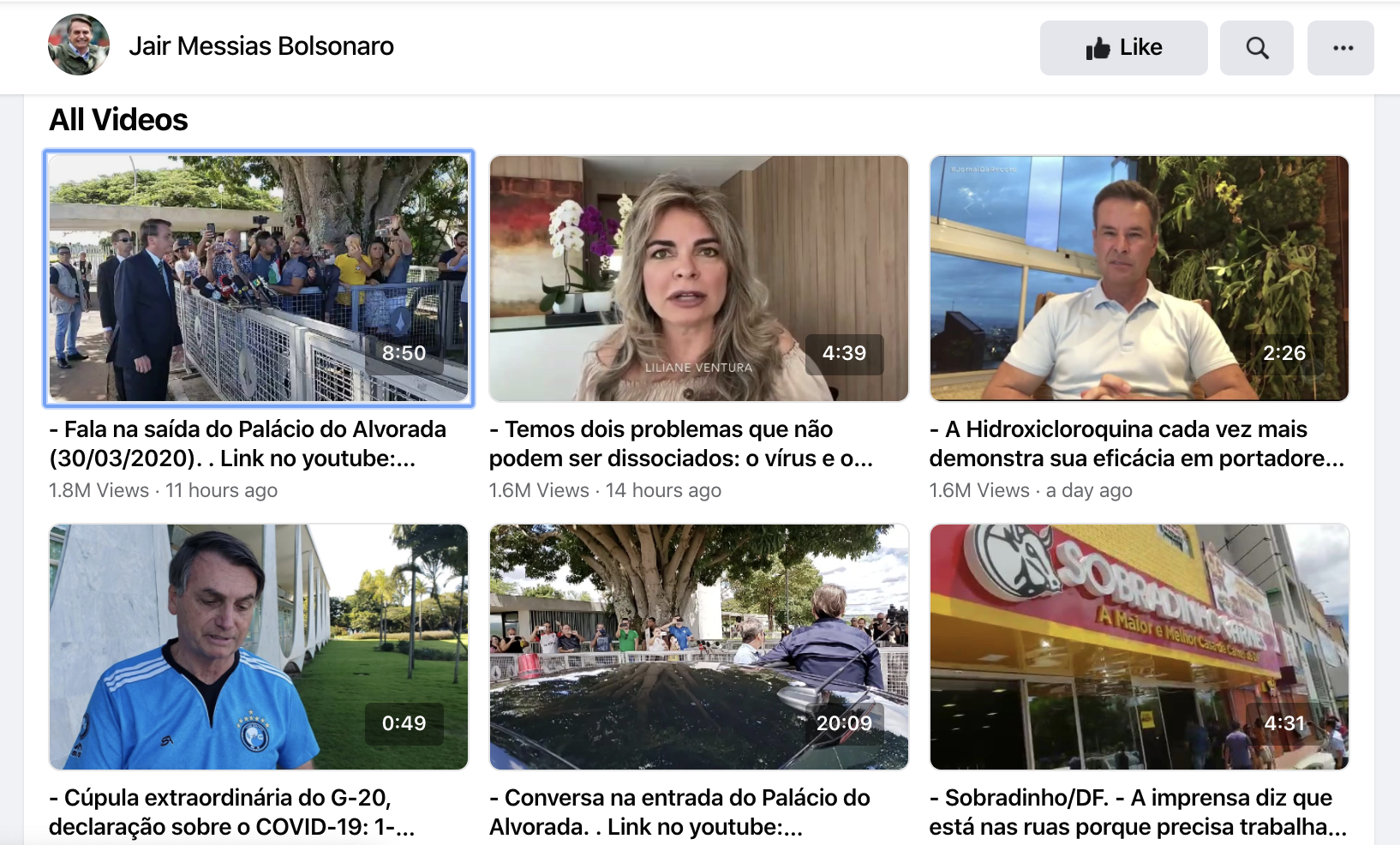

Facebook has diverted from its policy of not fact-checking politicians in order to prevent the spread of potentially harmful coronavirus misinformation from Brazilian President Jair Bolsonaro. Facebook made the decisive choice to remove a video shared by Bolsonaro on Sunday where he claimed that “hydroxychloroquine is working in all places.” That’s despite the drug still undergoing testing to determine its effectiveness for treating COVID-19, which researchers and health authorities have not confirmed.

“We remove content on Facebook and Instagram that violates our Community Standards, which do not allow misinformation that could lead to physical harm” a Facebook spokesperson told TechCrunch. Facebook specifically prohibits false claims regarding cure, treatments, the availability of essential services and the location or intensity of contagion outbreaks.

BBC News Brazil first reported the takedown today in Portuguese. In the removed video, Bolsonaro had been speaking to a street vendor, and the president claimed “They want to work,” in contrast to the World Health Organization’s recommendation that people practice social distancing. He followed up that “medicine there, hydroxychloroquine, is working in all places.”

If people wrongly believe there’s a widely effective treatment for COVID-19, they may be more reckless about going out in public, attending work or refusing to stay in isolation. That could cause the virus to spread more quickly, defeat efforts to flatten the curve, and overrun health care systems.

This why Twitter removed two of Bolsonaro’s tweets on Sunday, as well as one from Rudy Giuliani, in order to stop the distribution of misinformation. But to date, Facebook has generally avoided acting as an arbiter of truth regarding the veracity of claims by politicians. It notoriously refuses to send blatant misinformation in political ads, including those from Donald Trump, to fact-checkers.

Last week, though, Facebook laid out that COVID-19 misinformation “that could contribute to imminent physical harm” would be directly and immediately removed as it’s done about other outbreaks since 2018, while less urgent conspiracy theories that don’t lead straight to physical harm are sent to fact checkers that can then have the Facebook reach of those posts demoted.

Now the question is whether Facebook would be willing to apply this enforcement to Trump, who’s been criticized for spreading misinformation about the severity of the outbreak, potential treatments and the risk of sending people back to work. Facebook is known to fear backlash from conservative politicians and citizens who’ve developed a false narrative that it discriminates against or censors their posts.