I see far more research articles than I could possibly write up. This column collects the most interesting of those papers and advances, along with notes on why they may prove important in the world of tech and startups.

This week: crowdsourcing in space, vision on a chip, robots underground and under the skin and other developments.

The eye is the brain

Computer vision is a challenging problem, but the perennial insult added to this difficulty is the fact that humans process visual information as well as we do. Part of that is because in computers, the “eye” — a photosensitive sensor — merely collects information and relays it to a “brain” or processing unit. In the human visual system, the eye itself does rudimentary processing before images are even sent to the brain, and when they do arrive, the task of breaking them down is split apart and parallelized in an amazingly effective manner.

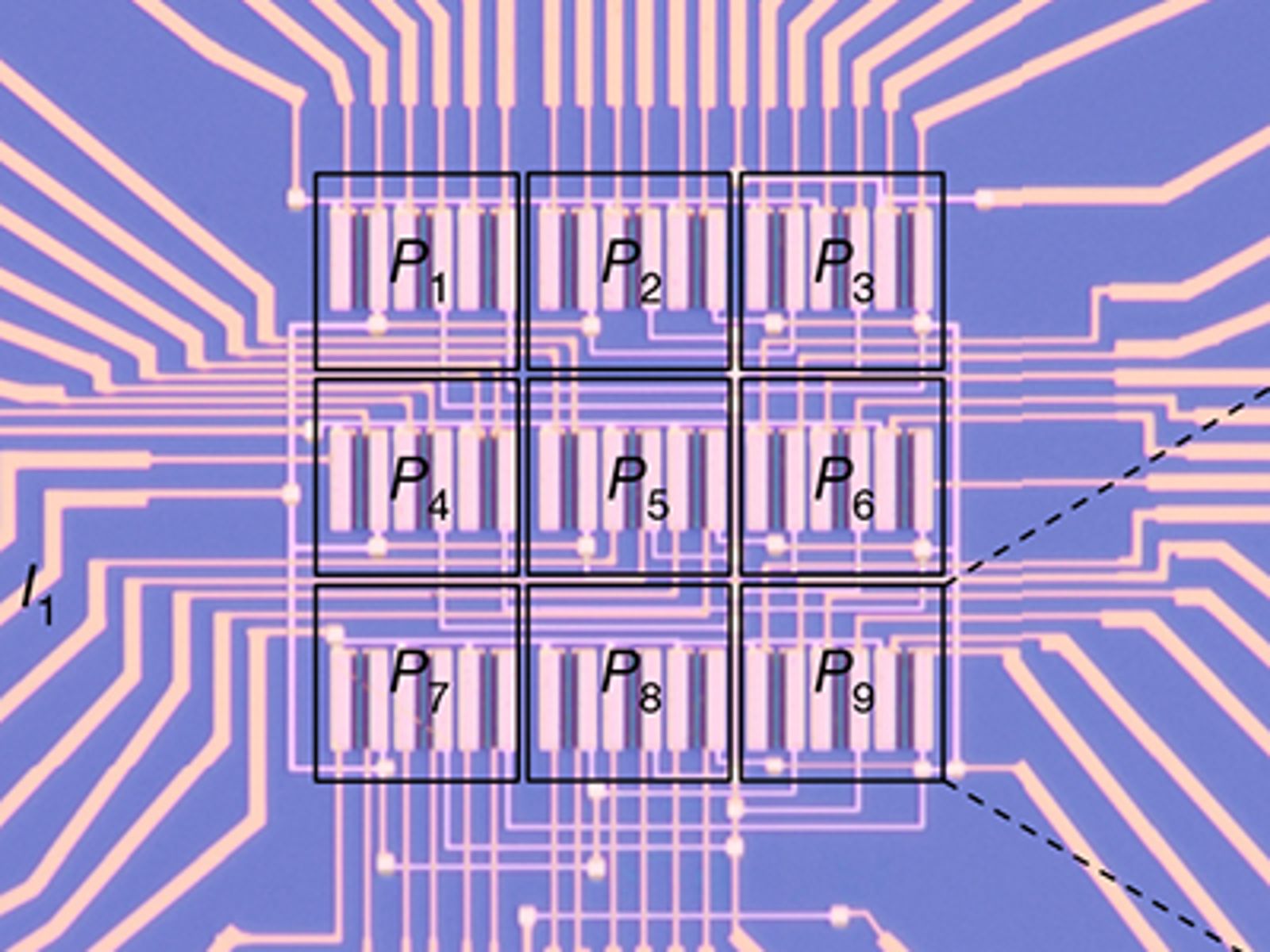

The chip, divided into several sub-areas, which specialize in detecting different shapes

Researchers at the Vienna University of Technology (TU Wien) integrate neural network logic directly into the sensor, grouping pixels and subpixels into tiny pattern recognition engines by individually tuning their sensitivity and carefully analyzing their output. In one demonstration described in Nature, the sensor was set up so that images of simplified letters falling on it would be recognized in nanoseconds because of their distinctive voltage response. That’s way, way faster than sending it off to a distant chip for analysis.

Much like bits of our visual system are hard-wired to detect certain angles, or faces, or quickly growing shapes (duck!), this type of specialized sensor could be set up to perform ultra-fast recognition of arbitrary patterns. The future of technology is code, of course, and this is just one more way to add code to the capture process. Sensor manufacturers are seeing this research and slapping their foreheads right now.

The brain is the eye

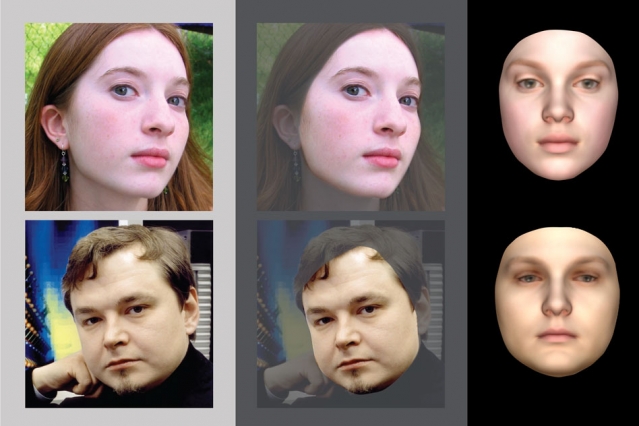

In a strange reversal of the above research, MIT researchers leverage century-old theories of dream structures in the brain to produce a novel facial recognition method. Ever wonder where the faces, locations and other images in your dreams come from? Drawn from memory in some ways, perhaps, but generated in mysterious fashion by a process in the brain called “inverse graphics” first theorized by Hermann von Helmholtz.

The researchers thought that the brain’s ability to synthesize and project entire scenes may be related to how we manage to internalize and perceive a real scene so quickly. So they built a machine-learning system that recognizes faces in a similarly reverse fashion — starting with a simple 2D image, distorting that with imaginary textures and curves, then matching the resulting 3D image to the real face in a given scene.

It’s nowhere near traditional facial recognition algorithms, but new approaches tend to expose the weaknesses of the older ones and spur innovation, if only by shedding light on the problem from a different angle.

Crowdsourcing light pollution from comsats

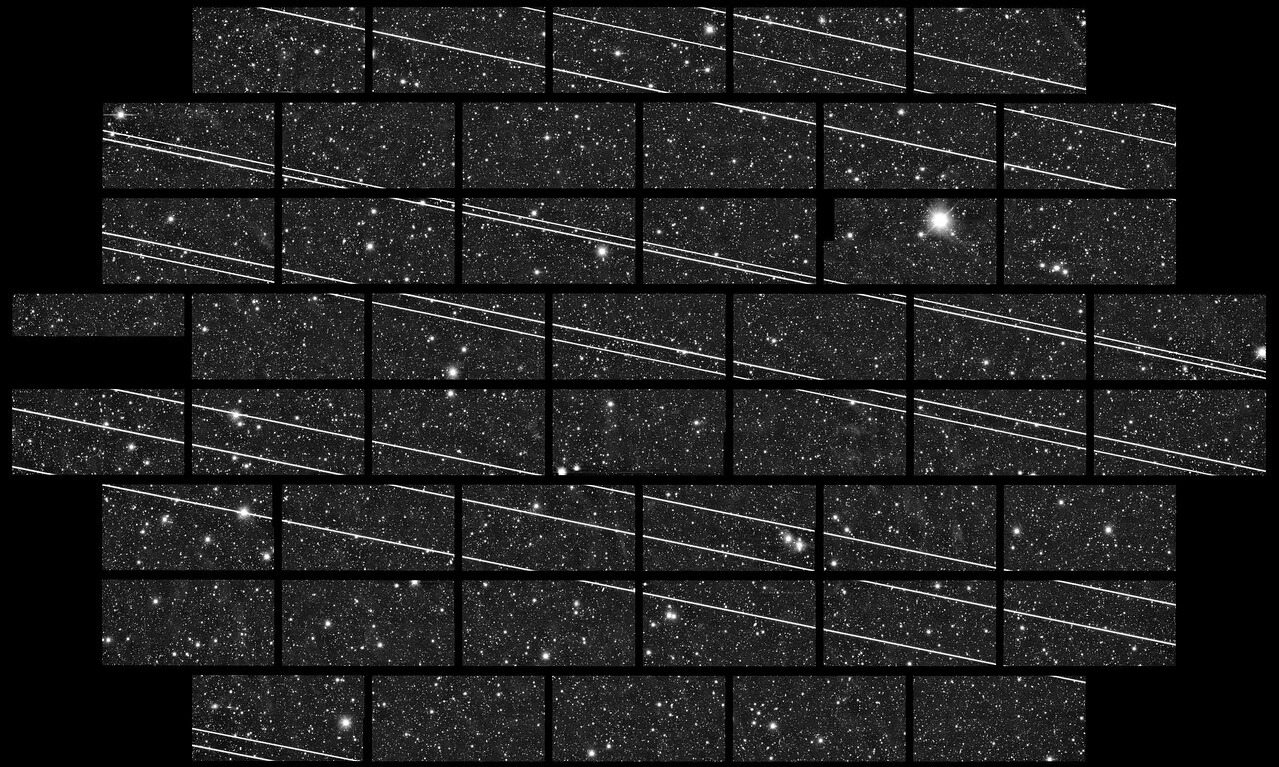

Starlink satellites streak through a telescope’s observations

The impending proliferation of communications satellites in low Earth orbit is likely to produce light pollution of a new and possibly very annoying kind — especially to astronomers and others who tend to photograph the night sky. Satellite Streak Watcher is a new crowdsourcing effort to collect as much data as possible about the visibility of satellites like Starlink’s, which may soon number in the thousands.

Participants are asked to set up their phone for basic astrophotography and point it at the sky (using a tripod, of course) during moments when a companion app tells them satellites will be overhead. They can then submit the resulting images — hopefully showing the telltale “streaks” setting the much brighter satellites apart from their deep black background. There’s a certain amount of disapproval implied by this endeavor, but who can blame them? And anyway, we need more data to be able to say with authority how bad the problem really is.

No more crowdsourcing for SETI@home

More than two decades ago, the Search for Extra-Terrestrial Intelligence began a truly ahead-of-its-time effort. It asked people to donate the spare processing power of their computers to sift through huge volumes of radio telescope data and look for signs of aliens.

This program predated the virtualized computing that has become standard issue today and helped people understand the power of the internet. After all that time, SETI@home will “go into hibernation” not because they’ve given up, but because there’s not much to gain by distributed processing of more data. Instead, they wrote, “we need to focus on completing the back-end analysis of the results we already have, and writing this up in a scientific journal paper.”

It’s the end of an era, but if you still want to compute for science, check out some of the projects using similar systems worldwide.

Hello, Voyager 2 — goodbye, Voyager 2

The Voyager spacecraft are two of the most extraordinary achievements in the history of technology. Launched in 1977, both are still functioning as intended and have entered interstellar space. Amazingly, they’re also still receiving fairly regular maintenance to troubleshoot problems, despite it taking more than a day to send a command and receive a reply.

Recently Voyager 2 had a bit of a glitch when the craft’s autonomous fault protection routines, in the process of responding to a minor power issue, turned off several of its instruments. After a bit of troubleshooting the team got things ship-shape again, but it did take more than a month.

Funnily enough, the repairs happened just in time for us to lose the ability to contact the spacecraft for some 11 months while the 70-meter radio antenna in Canberra is upgraded. Voyager 2 will simply use the time to take a nap. “It will be just fine,” said Voyager’s project manager, Suzanne Dodd.

Quantum highway

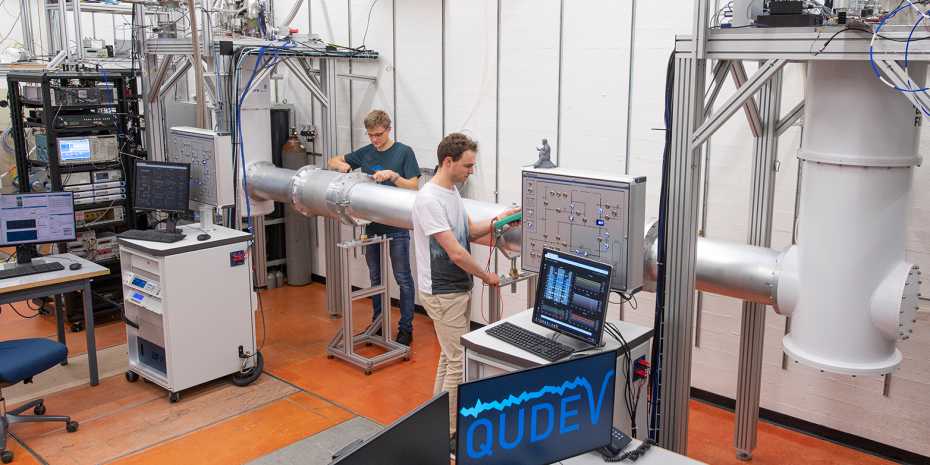

One thing I learned about quantum computing in my visit to Google’s lab for the fanfare surrounding their quantum supremacy announcement is that the whole operation is actually very mechanical. There’s a huge amount of engineering that goes into the wires, the cooling, monitoring extremely small objects without disturbing them and generally making the machine work.

ETH Zurich physicists, unfortunately shut out of the abruptly cancelled APS in Denver due to the coronavirus fears, have built the world’s longest “quantum link,” essentially a tube that connects two quantum elements and allows them to communicate their superposition states to one another. This is hardly an easy task: Like the qubits themselves, the connections need to be cooled to near absolute zero and painstakingly designed to prevent any form of interference or leakage. Doing it at micrometer scales is hard — doing it at multi-meter scales is very hard.

But the team made it happen, entangling two microwave photon quantum elements at a distance of five meters through a tube about as thick as a lamppost. Next up: 30 meters and the promise of a “quantum LAN.”