Adobe CTO Abhay Parasnis sees a shift happening.

A shift in how people share content and who wants to use creative tools. A shift in how users expect these tools to work — especially how much time they take to learn and how quickly they get things done.

I spoke with Parasnis in December to learn more about where Adobe’s products are going and how they’ll get there — even if it means rethinking how it all works today.

“What could we build that makes today’s Photoshop, or today’s Premiere, or today’s Illustrator look irrelevant five years from now?” he asked.

In many cases, that means a lot more artificial intelligence; AI to flatten the learning curve, allowing the user to command apps like Photoshop not only by digging through menus, but by literally telling Photoshop what they want done (as in, with their voice). AI to better understand what the user is doing, helping to eliminate mundane or repetitive tasks. AI to, as Parasnis puts it, “democratize” Adobe’s products.

We’ve seen some hints of this already. Back in November, Adobe announced Photoshop Camera, a free iOS/Android app that repurposes the Photoshop engine into a lightweight but AI-heavy interface that allows for fancy filters and complex effects with minimal effort or learning required of the user. I see it as Adobe’s way of acknowledging (flexing on?) the Snapchats and Instas of the world, saying “oh, don’t worry, we can do that too.”

But the efforts to let AI do more and more of the heavy lifting won’t stop with free apps.

“We think AI has the potential to dramatically reduce the learning curve and make people productive — not at the edges, but 10x, 100x improvement in productivity,” said Parasnis.

“The last decade or two decades of creativity were limited to professionals, people who really were high-end animators, high-end designers… why isn’t it for every student or every consumer that has a story to tell? They shouldn’t be locked out of these powerful tools only because they’re either costly, or they are more complex to learn. We can democratize that by simplifying the workflow.”

“Take Photoshop — perfect example. It’s unbelievably powerful. You can literally create anything on that canvas that you can imagine,” said Parasnis.

“The problem is that to get to that level of mastery takes years, and there are only a few million people on the planet after a couple of decades who have mastered it at a level where they can extract the magic out of that tool. Now we are asking the disruptive question: can we bring that level of magic out of Photoshop without somebody spending years, or even months, or even weeks? Can they just approach this tool and using the power of AI and some breakthroughs in user experience, be productive and produce in Photoshop?”

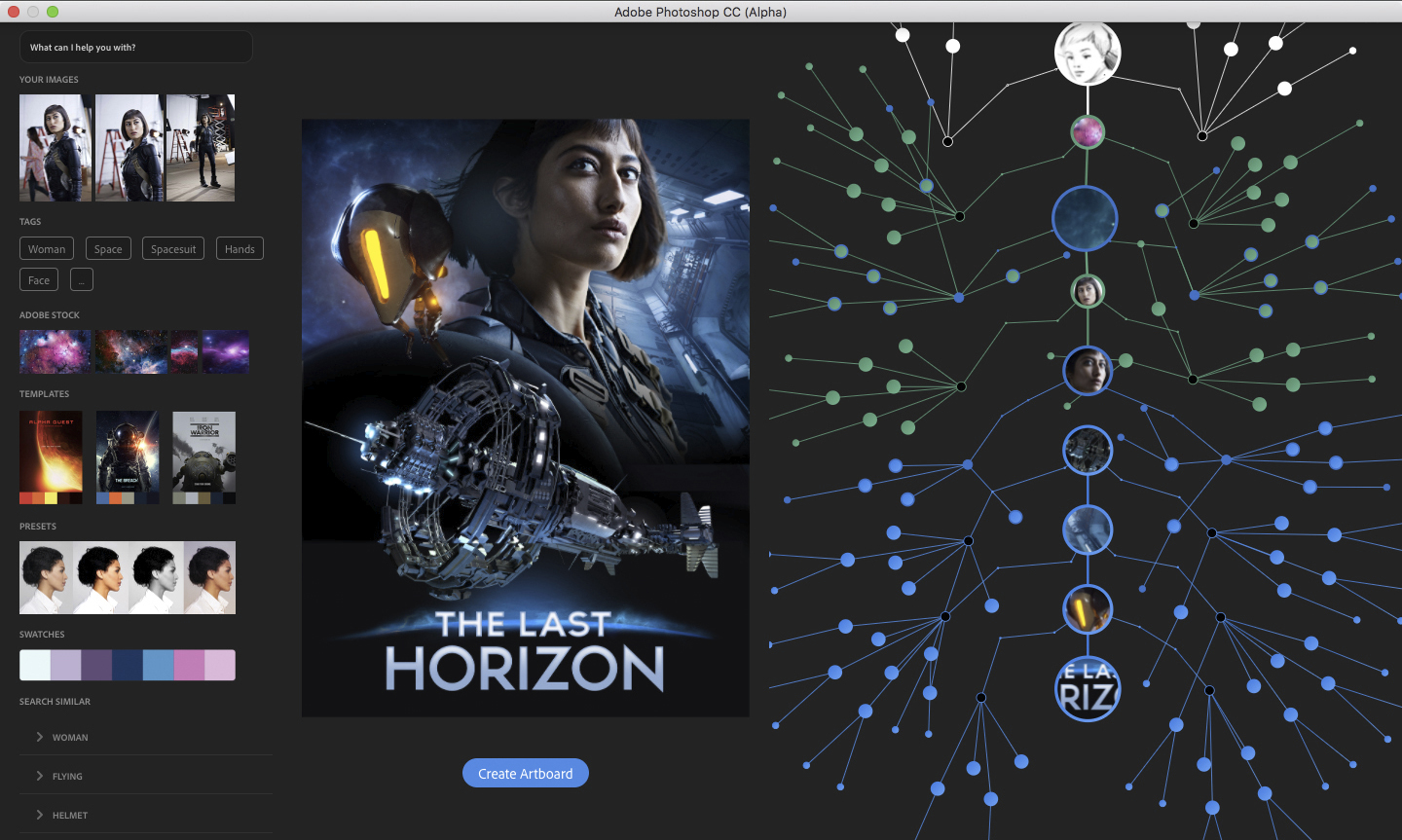

When I asked what this could actually look like, he took out a tablet and placed it on the table before clicking into an app labeled “Hey Sensei” that’s effectively a prototype build of Photoshop with an AI-driven assistant built in.

“This is actually something we’ve been working on. Infusing AI deeply into Photoshop, and, in the process, just completely changing the learning curve.”

He pulled up a sketch of a billboard concept — a hand-drawn mockup of an advertisement for Burberry. A model in a trench coat stood beneath a streetlight, backdropped by a nearly empty city street.

“[Right now] if you want to go from this level of hand sketch into a polished, Times Square billboard-worthy poster created in Photoshop, it takes weeks. Hopefully, you’ll see, I’m going to show it to you in five minutes.”

A blue dot appeared beneath the sketch, which meant that AI system Sensei, was ready to get to work.

He tapped a button and spoke: “find images based on my sketch.”

Within seconds, the app began pulling together a catalog of relevant assets based on objects it identified in the sketch; models in trench coats, streetlights, city scenes. Some assets were pulled from the theoretical artist or company’s private repository; others from Adobe’s stock catalog.

Sliders above each group of photos allowed him to drill down each category based on criteria the AI deems relevant: the city scene… should it be morning, noon, or night? The model… facing left, right, or staring straight at the camera?

He selected one of the city scenes; a contrasty, sepia-toned shot of a quiet London street.

He then chose a full-color image of a female model in a trench coat, dragging the stock photo onto the canvas. Shot in a studio, it had its own background that needed removing and looked bizarre against the monotone backdrop. The lighting on the model, captured beneath studio lights, didn’t match the city scene behind her at all.

He tapped the AI button again. “Mask it,” he said. The background disappeared, leaving just the model.

After tapping another button, the color composition of the model photo shifted from full color to a golden hue, better matching the tone of the city scene. Another tap, and all of the shadows and highlights on the model shifted to match the lighting behind her.

“It will analyze, in real time, the background lighting, the concept, and the color composition to match” said Parasnis. “It literally changed the lighting effects, the shadow effects, to match where the lights are.”

“Lets put some text on it.”

He types out the name of the brand. Photoshop picked a handful of fonts it predicted would look good with this type of image. Another button press, and it automatically placed the text roughly where it was in that original sketch, now sized and positioned to avoid unsightly overlaps.

Within about five minutes and maybe ten clicks, Parasnis went from a sketch to something pitch-ready. It’s clearly a demo he’s done before and knew would work well, but it did a good job conveying where he wants Photoshop to go: less clicking around a mountain of menus to learn all the nuances, more doing.

“If I gave this to a [professional] creative artist today, they would be thrilled,” he added. “But this is what I mean by ‘democratizing’ — now a student could do this without knowing anything about Photoshop.”

A screenshot of the prototype Photoshop interface, shared by Adobe. The branches on the right represent repeatable/swappable actions the Sensei AI took at each step.

But what about everyone who already knows how to use Photoshop? Don’t they hate the idea of having their hard-learned workflows turned into a button press or a voice command?

“We internally debate a lot,” said Parasnis. “What are the implications of this? We are very mindful of it, and we think a lot about it.”

“The creative community has been championing this, saying ‘give me these tools!'” he said, “Because if something that takes them three hours today, they can now do in thirty seconds… it frees them up to do more.”

As an example of how it could save time for established pros, he returns to the billboard of the woman in the coat standing beneath the street lamp. What if the artist has to present multiple different versions — with, say, the model facing the opposite direction, or featuring a male model rather than a female?

He tapped the image of the model, and all the alternative photos the AI originally suggested return. He flipped the search criteria from female to male, and picked an image of a male model wearing a similar trench coat. It’s dropped into the scene — but all of the other steps, like background removal, and color matching, and lighting adjustments, are already done. The AI remembers what he asked of it, reapplying those same steps when something is swapped in.

“There is no real creative endeavor in repeating this poster four times,” said Parasnis. “And that just saved hours.”

“This notion of ‘hey, that is going to replace me and my job with automated robots’…. that’s one version of the story. The other version of the story, though, is that it made [them] that much more productive, and [they] can do way more stuff and focus on truly creative expression.”

So why start down this path now? After decades of Photoshop working one way, why reimagine what it could look like?

Partially, because it’s only now becoming possible after years of AI research. Also, after hundreds of millions of user hours put into Photoshop and other tools, Adobe has a deep understanding of what users spend time doing over and over again in each project. But a big part is the aforementioned shift in user expectations.

“There is an undeniable shift towards more and more people wanting to do more and more sophisticated tasks, on devices where they are not just tethered to a desk. That’s not a surprise,” said Parasnis. “What is increasingly interesting is the [changing] time window from when you get an idea in your head to where you want to share that with the whole world…. that time is just shrinking dramatically.”

“It used to be I had some idea, I’ll draw a sketch, maybe someday I’ll go sit in front a computer screen for a few hours. Now… like, I have a 14-year-old… he’d want to capture it instantly, do the editing he needs to do, and instantly share it. I think there is a dramatic acceleration towards everything becoming real time.”

I asked him about how much of this is driven by consumers finding their “real time” solutions in free apps like Snapchat or Instagram — hardly full-blown Photoshop replacements, but enough to satisfy most user’s desires for a one-click ‘make my picture pretty’ button.

“If somebody else is going to push the limits of what’s possible, we don’t want to be the company that suddenly wakes up and says ‘oh, we didn’t see that shift coming.'” he said. “If you walk down the halls here… we basically try to drive a healthy level of insecurity and paranoia around being the company that reimagines what the next app is.”

And that doesn’t stop with Photoshop. Across the board, Parasnis told me, they’re looking at how their products work and how AI will fit into the mix.

In Premiere, Adobe’s video editing tool, that’ll mean things like using AI to adjust videos for different screen shapes/sizes while automatically keeping the subject in view.

In Lightroom, it’ll mean using AI to better identify people, items, and places the user might be searching for in their photos. You could ask it something like “Find a picture of my dog,” later tacking on additional criteria like “running” or “at the beach.”

This same AI-driven mission extends beyond their visual creative products, as well. In a project Parasnis said he expects to discuss publicly soon, Adobe has been working on using AI to analyze and adapt the countless PDFs that already exist out in the wild. They’re cracking away at tools that can take an existing PDF and use AI to reformat it on the fly for easier consumption on smaller screens, or chew through a 50-page document and spit out a two page summary.

Wrapping up, I just had to ask: with all of the AI research that Adobe is doing, does … any of it ever freak him out?

“As a technologist, I have an optimistic mind view that you have to be mindful of the downsides. You have to be mindful of avoiding pitfalls. Right now we see a lot of that, with unanticipated uses or unanticipated consequences,” he answered. “You have to be vigilant, you have to be mindful — but I do believe more often than not, these breakthroughs will have far more positive impact than not.”