Facebook this morning issued a lengthy breakdown of recent research into BCI (brain-computer interface) as a means with which to control future augmented reality interfaces. The piece coincides with a Facebook-funded UCSF research paper published in Nature today entitled, “Real-time decoding of question-and-answer speech dialogue using human cortical activity.”

Elements of the research have fairly humane roots, as BCI technology could be used to assist people with conditions such as ALS (or Lou Gehrig’s disease), helping to communicate in ways that their body is no longer naturally able.

Accessibility could certainly continue to be an important use case for the technology, though Facebook appears to have its sights set on broader applications with the creation of AR wearables that eliminate the need for voice or typed commands.

“Today we’re sharing an update on our work to build a non-invasive wearable device that lets people type just by imagining what they want to say,” Facebook AR/VR VP Andrew “Boz” Bosworth said on Twitter. “Our progress shows real potential in how future inputs and interactions with AR glasses could one day look.”

“One day” appears to be a key aspect in all of this. A lot of the key caveats in all of this note that the technology is still on a relatively distant horizon. “It could take a decade,” Facebook writes in the post, “but we think we can close the gap.”

Among the strategies the company is exploring is the use of a pulse oximeter, monitoring neurons’ consumption of oxygen to detect brain activity. Again, that’s still a ways off.

“We don’t expect this system to solve the problem of input for AR anytime soon. It’s currently bulky, slow, and unreliable,” the company writes. “But the potential is significant, so we believe it’s worthwhile to keep improving this state-of-the-art technology over time. And while measuring oxygenation may never allow us to decode imagined sentences, being able to recognize even a handful of imagined commands, like ‘home,’ ‘select,’ and ‘delete,’ would provide entirely new ways of interacting with today’s VR systems — and tomorrow’s AR glasses.”

Obviously there are some red flags here for privacy advocates. There would be with any large tech company, but Facebook in particular presents lots of built-in privacy and security concerns. Remember the uproar when it launched a smart screen with built-in camera and microphones? Now apply that to a platform that’s designed to tap directly into your brain and you’ve got a good idea of what we’re dealing with here.

Facebook addresses this concern in the piece.

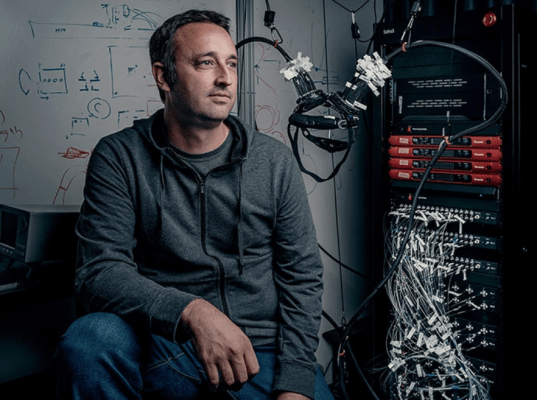

“We can’t anticipate or solve all of the ethical issues associated with this technology on our own,” Facebook Reality Labs Research Director Mark Chevillet says in the piece. “What we can do is recognize when the technology has advanced beyond what people know is possible, and make sure that information is delivered back to the community. Neuroethical design is one of our program’s key pillars — we want to be transparent about what we’re working on so that people can tell us their concerns about this technology.”

Facebook seems intent on getting out in front of those concerns a decade or so ahead of time. Users have seemingly been comfortable giving away a lot of private information, as long as it’s been part of a slow, steady trickle. By 2029, maybe the notion of letting the social network plug directly into our grey matter won’t seem so crazy after all.