If you’ve been experiencing “502 Bad Gateway” notices all morning, for better or worse, you’re not alone. Cloudflare has been experiencing some major outages this morning, leaving many sites reeling in its wake. In fact, the company’s System Status page, which collects global incidents, reads like a laundry list of every major city across the globe.

Cloudflare has acknowledged what looks to be an extremely widespread issue, and appears to be working to address the issue. “Cloudflare has implemented a fix for this issue and is currently monitoring the results,” the company writes. “We will update the status once the issue is resolved.” We’ve reached out to the company for more information and will do the same.

For now, maybe go take a walk around the block. It’s nice outside.

Update: Cloudflare co-founder and CEO Matthew Prince offers up some insight into what’s going on here. “Massive spike in CPU usage caused primary and backup systems to fall over. Impacted all services. No evidence yet attack related. Shut down service responsible for CPU spike and traffic back to normal levels. Digging in to root cause.”

Everything appears to be back to normal. The company is now offering more insight into what happened, blaming its own software, rather than an attack as some initially speculated.

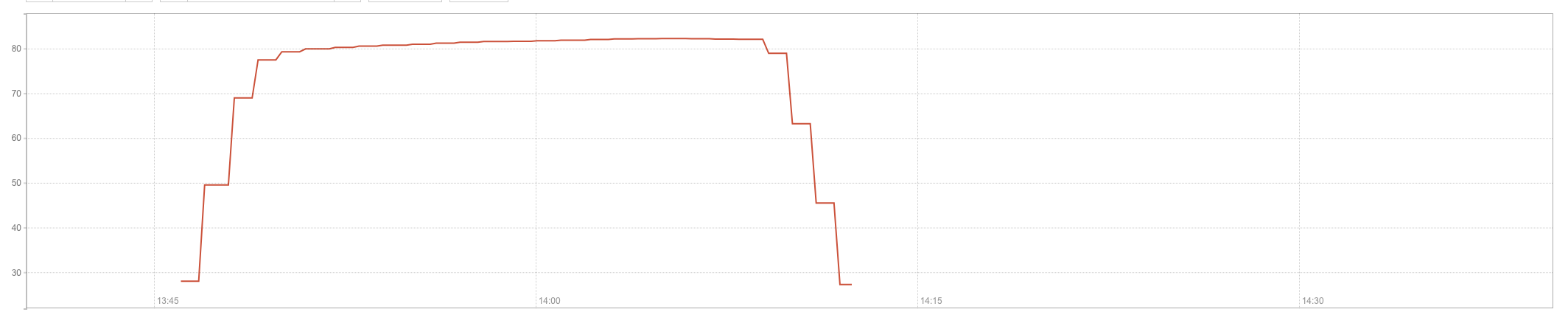

“For about 30 minutes today, visitors to Cloudflare sites received 502 errors caused by a massive spike in CPU utilization on our network,” the company writes in a blog post. “This CPU spike was caused by a bad software deploy that was rolled back. Once rolled back the service returned to normal operation and all domains using Cloudflare returned to normal traffic levels. This was not an attack (as some have speculated) and we are incredibly sorry that this incident occurred. Internal teams are meeting as I write performing a full post-mortem to understand how this occurred and how we prevent this from ever occurring again.”

In a further post, the company has apologized for the issue, writing, “We recognize that an incident like this is very painful for our customers. Our testing processes were insufficient in this case and we are reviewing and making changes to our testing and deployment process to avoid incidents like this in the future.”