If AI-powered robots are ever going to help us out around the house, they’re going to need a lot of experience navigating human environments. Simulators, virtual worlds that look and behave just like real life, are the best place for them to learn, and Facebook has created one of the most advanced such systems yet.

Called Habitat, Facebook’s new, open-source simulator was briefly mentioned some months ago but today received the full expository treatment, to accompany a paper on the system being presented at CVPR.

Teaching a robot to navigate a realistic world and accomplish simple tasks is a process that takes a considerable amount of time, so doing it in a physical space with an actual robot is impractical. It might take hundreds of hours, even years of real time, to learn over many repetitions how best to get from one place to another, or how to grip and pull a drawer.

Instead, the robot’s AI can be placed in a virtual environment that approximates the real one, and the basics can be hashed out as fast as the computer can run the calculations that govern that 3D world. That means you can achieve hundreds or thousands of hours of training in just a few minutes of intense computing time.

Habitat is not itself a virtual world, but rather a platform on which such simulated environments can run. It is compatible with several existing systems and environments (SUNCG, MatterPort3D, Gibson and others), and is optimized for efficiency so researchers can run it at hundreds of times real-world speeds.

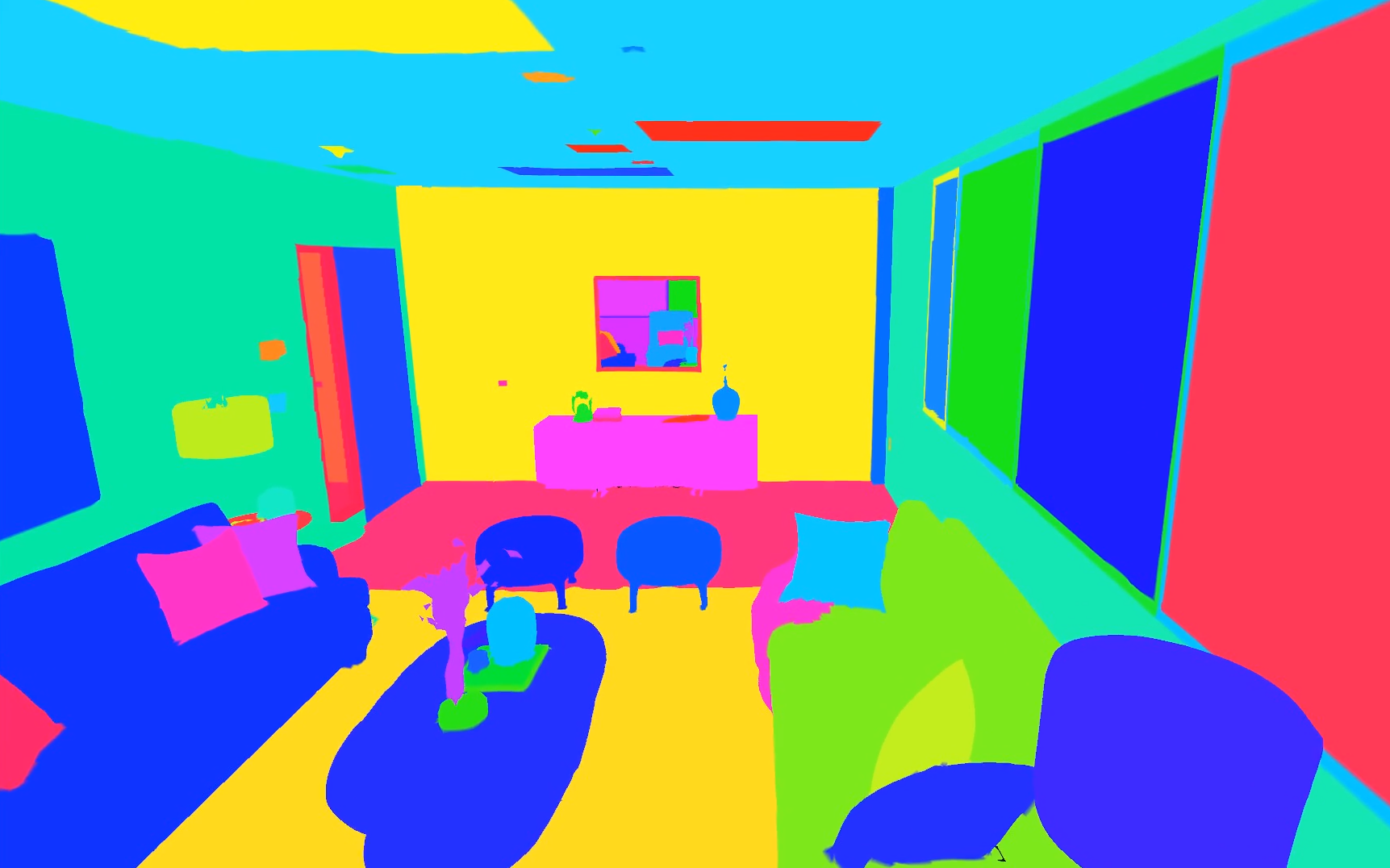

But Facebook also wanted to advance the state of the art in virtual worlds, and so created Replica, a database for Habitat that includes a number of photorealistic rooms organized into a whole house: a kitchen, bathroom, doors, a living room with couches, everything. It was created by Facebook’s Reality Labs, and is the result of painstaking photography and depth mapping of real environments.

[gallery ids="1843686,1843683,1843682"]

The detail with which these are recreated is high, but you may notice some artifacts, especially along ceilings and inaccessible edges. Those areas aren’t a focus probably because AI vision agents don’t rely on detail in ceilings and distant corners for navigation — shapes like chairs and tables, or the way the walls define a hallway, are much more important.

Even more important, however, are the myriad annotations the team has done on the 3D data. It’s not enough to simply capture the 3D environment — the objects and surfaces must be consistently and exhaustively labeled. That’s not just a couch — it’s a grey couch, with blue pillows. And depending on the logic of the agent, it might or might not know that the couch is “soft,” that it’s “on top of a rug,” that it’s “by the TV” and so on.

But including labels like this increases the flexibility of the environment, and a comprehensive API and task language allows agents to perform complex multi-step problems like “go to the kitchen and tell me what color the vase on the table is.”

After all, if these assistants are meant to help out, say, a disabled person who can’t easily get around their home, they’ll need a certain level of savvy. Habitat and Replica are meant to help create that savvy and give the agents the practice they need.

Despite its advances, Habitat is only a small step along the road to truly realistic simulator environments. For one thing, the agents themselves aren’t rendered realistically — a robot might be tall or small, have wheels or legs, use depth cameras or RGB. Some logic won’t change — your size doesn’t influence the distance from the couch to the kitchen — but some will; a small robot might be able to walk under a table, or be unable to see what’s on top of it.

Furthermore, although Replica and many other 3D worlds like it are realistically rendered in a visual sense, they are almost completely non-functional in terms of physics and interactivity. You could tell an agent to go to the bedroom and locate the second drawer of the wardrobe — but there’s no way at all to open it. There is in fact no drawer — just a piece of the scenery labeled as such, which can’t be moved or touched.

Other simulators focus more on the physical aspect rather than the visuals, such as THOR, a simulator meant to let AIs practice things like opening that drawer, which is an amazingly difficult task to learn from scratch. I asked two developers of THOR about Habitat. They both praised the platform for providing a powerfully realized place for AIs to learn navigation and observational tasks, but emphasized that lacking interactivity, Habitat is limited in what it can teach.

Obviously, however, there’s a need for both, and it seems that for now one can’t be the other — simulators can be either physically or visually realistic, not both. No doubt Facebook and others in AI research are hard at work creating one that can.