Artificial intelligence applied to information security can engender images of a benevolent Skynet, sagely analyzing more data than imaginable and making decisions at lightspeed, saving organizations from devastating attacks. In such a world, humans are barely needed to run security programs, their jobs largely automated out of existence, relegating them to a role as the button-pusher on particularly critical changes proposed by the otherwise omnipotent AI.

Such a vision is still in the realm of science fiction. AI in information security is more like an eager, callow puppy attempting to learn new tricks – minus the disappointment written on their faces when they consistently fail. No one’s job is in danger of being replaced by security AI; if anything, a larger staff is required to ensure security AI stays firmly leashed.

Arguably, AI’s highest use case currently is to add futuristic sheen to traditional security tools, rebranding timeworn approaches as trailblazing sorcery that will revolutionize enterprise cybersecurity as we know it. The current hype cycle for AI appears to be the roaring, ferocious crest at the end of a decade that began with bubbly excitement around the promise of “big data” in information security.

But what lies beneath the marketing gloss and quixotic lust for an AI revolution in security? How did AL ascend to supplant the lustrous zest around machine learning (“ML”) that dominated headlines in recent years? Where is there true potential to enrich information security strategy for the better – and where is it simply an entrancing distraction from more useful goals? And, naturally, how will attackers plot to circumvent security AI to continue their nefarious schemes?

How did AI grow out of this stony rubbish?

The year AI debuted as the “It Girl” in information security was 2017. The year prior, MIT completed their study showing “human-in-the-loop” AI out-performed AI and humans individually in attack detection. Likewise, DARPA conducted the Cyber Grand Challenge, a battle testing AI systems’ offensive and defensive capabilities. Until this point, security AI was imprisoned in the contrived halls of academia and government. Yet, the history of two vendors exhibits how enthusiasm surrounding security AI was driven more by growth marketing than user needs.

In the broader market, interest in AI was already markedly raring. Google’s acquisition of DeepMind in 2014 proved that venture capitalists could make money from AI investments; funding of AI startups jumped 302% from 2013 to 2014. By 2016, that figure surged to just over $1.0 billion. Through that lens, information security was notably tardy to the AI party.

Cylance, an endpoint protection company recently acquired by BlackBerry, was the first notable startup to trumpet AI in their product pitch – as far back as 2013. However, the information security industry’s interest in AI seems to follow the legacy of Darktrace, a network traffic analysis startup, more closely.

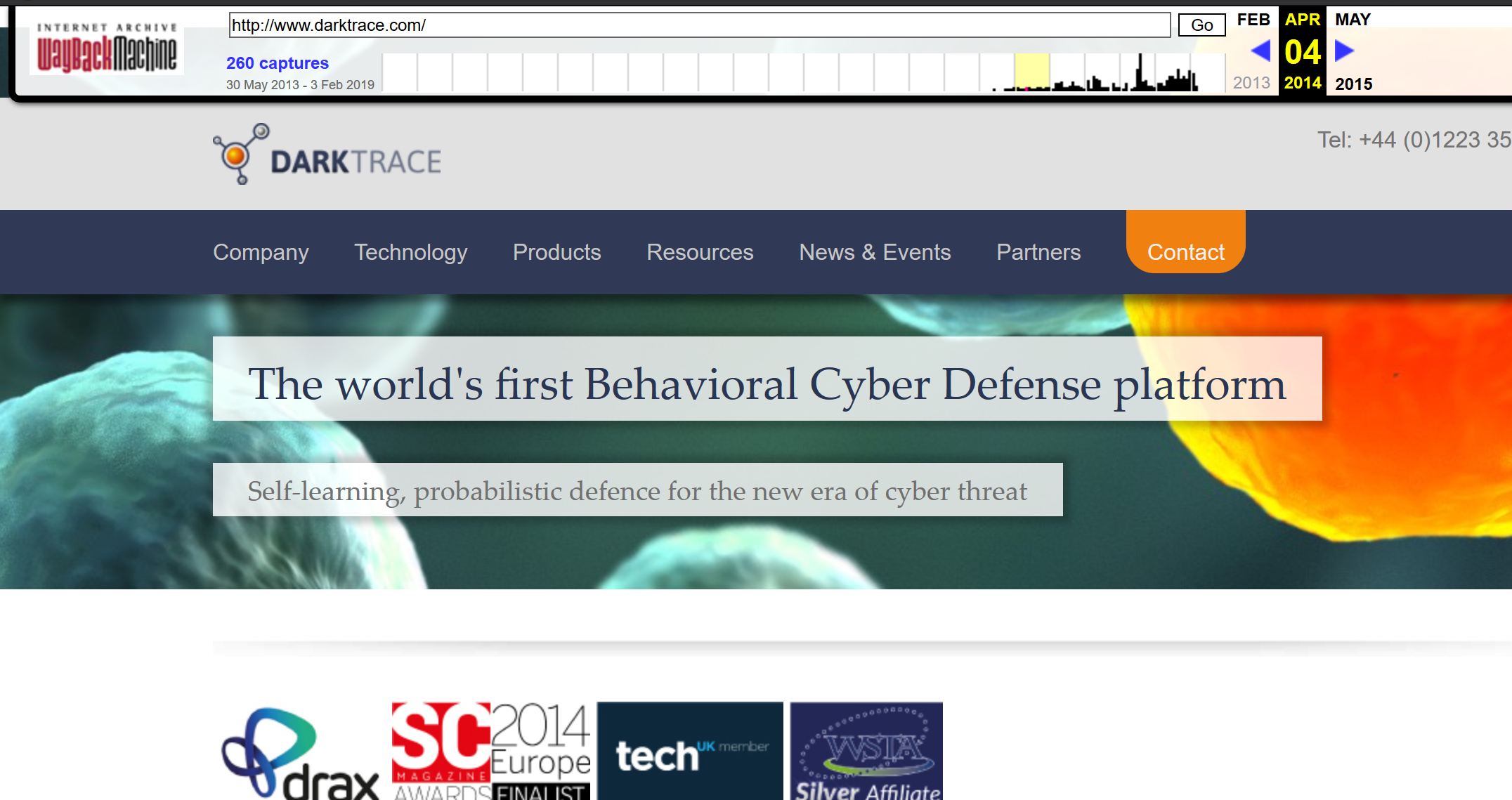

Darktrace began its marketing quest by touting “behavioral cyber defense,” with references to “learning” but a distinct lack of AI or ML. By early 2014, it was “self-learning, probabilistic defense.” Later that year and into 2015, it quietly referenced “machine learning,” but led with its “Enterprise Immune System” approach. By 2016, “machine learning” was front and center alongside “Enterprise Immune System” – coinciding with Darktrace’s $65 million Series C funding round.

Darktrace’s next – and largest – funding round in 2017 marked the inauguration of “artificial intelligence” next to “machine learning” in their core message, paired like bargain wine and cheese that overpowers the senses. Their product was now apparently driven by AI, although it also claimed its “unique, unsupervised machine learning” as its analysis engine elsewhere. By 2018, Darktrace was the “world leader in Cyber AI” – the claim that stands on their site today.

A journey through history on Google shows that Darktrace drove a considerable amount of buzz on the topic. Albeit to a lesser extent, Cylance also contributed to the “AI will solve security problems” narrative, as did smaller entrants to the market that followed such as Deep Instinct, PatternEx, and SparkCognition.

From that fateful turning point in 2017 until now, companies previously highlighting their machine learning approaches – which were often already an exaggeration of their capabilities – simply rebranded as AI-powered products. Even worse, the narrative around AI became quickly – and perhaps intentionally – entwined with the chorus of Chief Information Security Officers (“CISOs”) wishing for ways to automate their security program.

At present, AI is met with groans and side-eyes from security professionals who have seen first-hand how these technologies fail to live up to their purportedly revolutionary potential. Even those who stickied their hands attempting to turn cotton candy into a rope pulley are left confused as to what exactly security AI is supposed to do – and countless more, both inside and outside information security, are equally as perplexed.

Image via Wayback / Darktrace

What even is AI?

AI constitutes programs that can continually learn from context to take actions that optimize for success, whatever the goal may be. Machine learning, although more commonly cited in information security, is technically a subset of AI. As seen with Darktrace, companies previously riding hype waves of ML often simply rebranded as AI – which is a bit like saying squares began rebranding themselves as rectangles.

This confusion frustrates practitioners, making it harder to navigate the vendor landscape. Mike Johnson, former CISO at Lyft, concurs that the industry discussion is lacking clarity, “We don’t have an agreed upon definition of AI. Every discussion seems to be ‘AI/ML,’ as if they are the same thing. We need to define what we’re actually talking about.” Despite the notably different approaches between ML, deep learning, and the sparkly vision of AI as human-level decision-makers, vendors refer to them all as “AI” in their pitches.

Harini Kannan, Data Scientist at Capsule8, laments that the term ‘AI’ is used so inscrutably and to cover too broad a spectrum of topics, “I tend to assume the buzzword ‘AI’ is used to refer to Neural Networks (used in deep learning). But, there seems to be a lack of transparency, especially in information security, regarding what algorithm or technique is being used.”

Defenders like Johnson agree, arguing that, “We need honesty and transparency. A lot of ‘AI/ML’ solutions out there seem to be regular expressions at scale.” Regular expressions (aka “regex”) are a specific set of characters used to find patterns – for instance, “defen(c|s)e” would match both the British (defence) and American (defense) spelling. A conglomerate of “if-then” statements can similarly be deemed AI, although it is a far cry from what people imagine when they think of a machine imitating intelligent human thinking.

Fundamentally, any AI system works by “learning.” The system is fed a pool of historical data, referred to as “training data.” On top of this, there is a question that informs how the system analyzes the data – as does the type of required answer. For instance, “When should people get ice cream?”, combined with a history of when New Yorkers bought ice cream, might approve a proposed ice cream date in the summer on a Friday or Saturday evening.

There is also the tragically twisted equivocation of automation and AI within the gossamer land of product pitches. Automation and AI are not the same; automation may leverage AI, but it does not have to. A simple rule can block network connections from a specific country without human effort required each time. Similarly, AI can produce results without triggering an action itself – the human determines the necessary course of action based on the AI’s output.

The forceful blending of these terms renders the term AI somewhat meaningless. While it may be a marketing boon short term to generate cheap buzz, longer-term, vendors who are truly developing decision-making systems as intelligent as humans will struggle to maintain credibility through the glitter-drenched AI swamp. Even worse, information security professionals will be increasingly distrustful of these mislabeled tools and shun the category altogether.

How does “cyber AI” get misapplied?

It is debatable whether “true” AI has been deployed in security, and AI seems overwhelmingly misapplied to security challenges. As Johnson notes, “I have seen no implementations of AI that fit the definition of AI in the information security world. Everything I’ve seen in infosec has been static machine learning models.” Only in the exceptionally sloppy sense of AI serving an umbrella term for any machines that learn is security AI being actively used in organizations.

Even when considering the broadest notion of algorithms described as AI, a foundational truth is that AI is designed for an ordered and rule-driven world. Of course, the information security world is anything but ordered or rule-driven. As a complex system, information security involves many intertwined relationships between attackers, defenders, governments, software providers, and users – none of which behave in a perfectly rational, orderly way.

Security Engineer Jamesha “Jam” Fisher highlights the human element being an unavoidable wrinkle when considering AI in information security. “There’s always going to be something that can’t just be caught, at least for now, with data or machine-learning analysis. If I’ve learned anything about information security, it’s that humans will find interesting and unexpected ways to use technology.”

If AI tools excel in an ordered and rule-driven world, it means their ability to detect “unknowns” is limited in the messy, chaotic information security world. As Gollnick explains, “If events are truly random or truly novel, then there is no reliable and repeatable way to use data to learn from them or about them. Defensive security AI solutions are less valuable when they are employed against rare or sporadic events.”

Through this lens, the arguably worst use case for AI in information security is intrusion detection – but it is the use case most discussed and funded. Both ML and AI require training data, which is an inherently historical data set, in order to “learn” whatever function is specified. The strength of these tools thus is in classification – in finding activity similar to what was observed before, not in finding previously unknown activity. A classic example of classification is recommendation algorithms.

Recommendation engines thrive on matching items you have previously viewed with the preferences of other users, determining which items are most commonly linked. For instance, Netflix might suggest watching its “Daredevil” or “Jessica Jones” shows if you previously watched and thumbs-up’d the “Avengers” movies. It is supremely unusual to see implementations looking to find the movie you would thus hate the most – the anomalies in your preferences.

This is one of the drivers of the most frustrating element to practitioners using machine learning-based tools – the enormous rate of false positives. Because false negatives – classifying a real attack as benign – are entirely unacceptable in information security, vendors overcorrect their models, leading to false positives.

Each false positive that becomes an alert is a new time sink for a security team. In a typical security operations program, analysts will investigate alerts to determine whether the underlying event is worth investigating. This process takes, at minimum, thirty seconds, though often much longer. When false positives outweigh real events worth investigating, it is natural for security teams to try to minimize the amount of time spent processing alerts – leading to analyst burnout and true positives being missed.

The underlying assumption behind most AI and ML-driven detection tools is that malicious activity exhibits characteristics unobserved in normal usage of a system. However, AI can only detect activity seen previously. If the activity is not in the data from which AI learns, it cannot detect it. Thus, if you train an AI model to recognize an attack, it can only be because you caught the attack before and possessed it in your data, begetting the question: “Why do you need AI?”

A paper from nearly a decade ago by Paxton and Sommer discussed the considerable pitfalls when applying ML to intrusion detection. Among these are the tendency for ML to generate numerous false positives, attack-free data for training being difficult to procure, and attackers evading detection by making the system “learn” malicious activity as part of its assessment of what is benign – a risk for which there is a growing body of research.

Image via veriflow.net

Cursed magic: adversarial AI

Much of information security’s history is an arm’s race; each time there is a defensive innovation, offensive research quickly blossoms to mitigate these new defenses. In the realm of AI, offensive techniques are already being brainstormed for how to turn pure-hearted AI magic into dark witchcraft.

As more AI and ML systems are implemented in organizations, the juicier these systems appear to attackers. As Fisher points out, “Most people don’t realize how pervasive AI and machine learning is getting in all of our day-to-day. It seems like every company is either thinking about or has plans to develop a data-centric focus these days.”

The powerful wizardry of AI and ML systems is surprisingly easy to corrupt. Evasion represents the simplest method of bypassing AI and ML, whereby attackers tweak their content to avoid being classified as “malicious.” This can be alluring to attackers as it does not require interfering with the model nor reconstructing training data – simple trial and error can be effective.

One example most familiar to users is the case of spam filtering. An attacker can determine what keywords or phrases a spam filter has learned as part of evaluating whether email is spam or not. Once the attacker discovers what these features might be, such as a keyword like “Viagra,” they can move to misspellings like “V1agra” or “Vigara” to bypass this filter.

Beyond evasion, attackers will usually have two goals in mind when attacking AI or ML systems. They either want to force the model to generate incorrect output, or they want to steal the model or training data. In the case of information security, corrupting the model itself to make wrong predictions can help ensure their attacks sneak by unnoticed by AI-driven security systems.

Ariel Herbert-Voss, a PhD student in Computer Science at Harvard University and co-founder of the DEFCON AI Village, researches proposed attacks on ML systems to show how they perform in the real world. She notes that attackers achieve their goals through two primary means, namely by “poisoning the training data over a period of time so that the model starts to associate certain data points with incorrect predictions, and extracting enough predictions through an open API that the attacker can reconstruct the training data.”

Poisoning the AI data waters does not necessarily require much skill – implicit bias can even be fed into machine data by its operators, let alone a motivated, ill-intentioned adversary. As Fisher cautions, “It could result in unintended biases based off country, race, and identity without even realizing it. Keeping those biases in mind is very important, especially with security being ever-changing as technology and people evolve.”

While this sort of data corruption that twists statistical models away from their goals is feasible today, other adversarial AI methods often seen in blazing and aghast headlines are still firmly bound to the realms of academic research and Hollywood. Herbert-Voss explained that academic papers have shown novel attacks, like generating stickers and 3D objects to fool AI systems, but they require artificial environments in which the attacker has more control than they would in realistic scenarios.

Much like software vulnerabilities, the actual danger that adversarial AI attack vectors pose is limited to the extent that they can be exploited. As Herbert-Voss advises, “A lot of security issues with AI or ML systems can be avoided by considering how to manage failure modes through system architecture decisions.” Thus, it is a mistake to conflate dramatic, overblown fears of niche attack vectors with the very real necessity of threat modeling AI systems.

If attackers continue being advanced and persistent, then it is inevitable that they will carefully study security AI used defensively to determine how to contaminate such tools to facilitate their iniquitous plans. The entire ecosystem of security AI needs to carefully consider how to protect access to training data, to avoid selection bias, to prevent data reconstruction – lest the AI itself creates a security or privacy incident.

How do you do AI + security correctly?

Security programs do not fail because they lack AI to better detect something; they fail because they struggle to routinely and efficiently patch, threat model, or apply configuration management changes. Security teams drown in manual, eternally-urgent tasks on a ceaseless treadmill of fires to extinguish. They seek more bodies and more technology to solve the problem of their processes choking them – trying to create new paths of airflow rather than avoid the hand grasping their throat in the first place.

This is where AI might assist, behaving like a finely-tuned Clippy, the ill-fated Microsoft Word assistant of yore. It is akin to automating the process of flossing and brushing your teeth based on environmental factors like time of day and whether you ate, rather than alerting that there is an unknown type of food particle in your teeth that might lead to a novel dentistry issue. It is less sexy, alleviating a time-consuming pain point rather than surprising and delighting with its intellectual freshness.

Image via Getty Images / STAN HONDA / Stringer

The equivalent of flossing and tooth-brushing in information security too often feels like “solved” problems. We know the solutions, even if they are slow and inefficient, draining time (and thus money). Finding unknown threats, however, does not feel solved, and thus feels more urgent – even though it is less impactful to a security program than speeding up the basics. We gravitate towards the unique and strange but are crippled by the inexpediency of manual tasks with which we are all too familiar.

Workflow assistance in the form of intelligent suggestions could help humans make smarter choices, faster. The goal is not to fully automate human decision-making but augment it. As Gollnick suggests, “AI tools and techniques are automation of a process. They do not generate new knowledge or new facts.” AI cannot automate processes out of thin air; there must be existing manual processes that are important enough – whether due to repetition or impact – to invest time in automating them.

The burgeoning wealth of scripts created by enterprise security teams serve as examples of where automation is successful – and therefore where automated decision-making might prove even more valuable. One example is Securitybot by Slack, which automatically contacts the user associated with a sketchy event to ask them if it was indeed sketchy – like getting a home security alert while you are away and texting your friend to ensure it was them entering your home.

Metta by Uber simulates attackers to test the efficacy of security tools and incident response – like a controlled fire to test if firefighters are appropriately following procedure and that firehoses are working correctly. Security Monkey by Netflix spots when Amazon Web Services (“AWS”) or Google Cloud Platform (“GCP”) accounts are configured insecurely or when policies change – like the digital equivalent of ensuring you are not leaving the stove on or flagging when you decide to triple your monthly restaurant expenses.

None of these tools need AI to be useful. They solve problems that did have solutions, albeit involving a lot of manual, time-consuming effort. The sweet spot for AI is in tackling the arduous, repetitive tasks that also require intellectual effort and expertise. Crucially, these tasks must exist within somewhat orderly bounds, where the realm of what is possible is known.

The root of trust

For those who dare dive into the murky waters of AI, they may come away with from the affair relating to the famous quote by the philosopher Friedrich Nietzsche, “I am affected, not because you have deceived me, but because I can no longer believe in you.”

While finding the right use case for security AI is its own barrier, trustworthiness remains a towering hurdle that casts a long shadow. Even AI executing flawlessly will not be fit for adoption if its underlying methodology cannot be understood by its human operators. As Johnson cautions, “The models and mechanisms need to be well understood. How can you deploy a solution without knowing how it works? ‘Magic’ is not an acceptable response here.”

Those outside of security, including DevOps and infrastructure teams, may find the promise of security AI tempting, a way to bolster defenses without becoming experts. But how can anyone similarly beguiled determine trustworthiness? Gollnick suggests a litmus test for AI-driven products: “Extraordinary claims require extraordinary evidence. The best type of evidence for an AI-product implementation is a live, successful demonstration on data that has never been previously seen by an algorithm.”

Regrettably, security AI vendors often supply their own environments with pre-selected attacks to catch – a purposeful move so algorithms can be tuned to catch these attacks for demonstration purposes. P-hacking, also known as data fishing, is the repeated analysis of data until it appears to support a desired conclusion. Gollnick warns, “Someone who is p-hacking would try different algorithms, modify features slightly, change the way null values are handled, train and test a model again… and again and again.”

The line between repeated analysis towards stronger modeling and p-hacking is exceptionally blurry, making it difficult even for experts to identify when it has been employed. This leads to a need to request transparency around these AI approaches. As Johnson counsels, “Customers need to understand what algorithms are being applied and how they work, otherwise, they are simply deploying a magic box and hoping it works.”

Ideally, the onus would not solely be on customers to dig through the twinkling magic dust to uncover the specifics of how an AI-based tool works. Kannan advocates for greater transparency by vendors as well, “I think what’s missing is an honest discussion about practical AI, and not just playing buzzword bingo. Being transparent about what’s being done, which models are being used against what kind of attacks, so AI in information security as a whole can move forward and become trustworthy.”

But until vendors move from brandishing a magic wand to honest discussions about their AI approaches, practitioners should be vigilant in minimizing the blast radius of an ill-fated decision at the hands of AI. Fisher believes anyone – even those who are not security savvy, should understand how an AI-driven tool works and to consider, “Do you know if the solution will impact availability of a product? How are you going to respond to potential concerns that users may have?”

If AI makes the decision to deny access to the CEO, or triages an event as low-importance when it is high-importance, how damaging is it to the organization’s security? Security teams may not have a sense of which actions are immediately unacceptable or instead acceptable if reversed – or those that are acceptable if reversed only within a given time frame.

The humans cannot shrug or point fingers at each other, else any counteraction to mitigate the damage from AI-driven decisions becomes less efficient. As Kannan advises, “It’s easy to build ML models with literally all the features you have and get a decent result in a controlled environment. But deployment of the model in production requires answering questions about performance issues, defense against adversarial models, testing for false positives leading to alert fatigue, and more.”

Additionally, as privacy concerns grow due to cases like Cambridge Analytica and rumblings around privacy legislation in the United States, privacy also must be considered when evaluating AI-based tools. Fisher notes, “Even though privacy isn’t information security, it should always be included in the discussion, especially as organizations get more data-focused in their operations. Thinking about the moral and ethical implications of how organizations handle the data, or the resulting actions AI produces from that data, is tantamount.”

Using AI-driven security tools thus requires its own form of threat modeling, just as security teams would perform when their organization wants to release a new feature to customers or adopt new accounting software. If any amount of decision-making is involved, an AI-powered tool’s systemic effects must be fully understood, whether its impact on other systems or on the security team itself.

Image via GM-NASA

Conclusion

At present, AI in information security is less a marvelously powerful magic wand than an overly whittled stick that, under a rare blood moon, will point out the demon amongst the flock. Black-box AI powering intrusion detection sows more confusion and catalyzes more manual effort – antithetical to its potential. However, there exists flowering opportunity in the meadow of boring, daily, but cognitively-intensive tasks that are a necessary part of security work.

The true dream for AI in information security is building systems that can clearly explain their decision-making by articulating which factors led to chosen actions. Such a system would help security teams refine their own assumptions and audit decisions, engendering trustworthiness in the system’s ability to represent the team’s strategic thinking. A black box approach will never provide the level of trust that is required in a domain fraught with paranoia like information security, when decisions are often time-sensitive and of critical importance.

Until the gilded, extravagant veil of lavish promises falls to reveal the true face of security AI, all too many will keep being bamboozled. Information security, and its neighbors in operations, must insist on evidence of how the underlying system works – embracing skepticism and holding fast to the maxim: “never trust, always verify.” Magical outcomes may indeed come to fruition, but they can only come about through tying this exalted fancy math to the cold, wild ground of reality.