Released this month 20 years ago, “The Matrix” went on to become a cultural phenomenon. This wasn’t just because of its ground-breaking special effects, but because it popularized an idea that has come to be known as the simulation hypothesis. This is the idea that the world we see around us may not be the “real world” at all, but a high-resolution simulation, much like a video game.

While the central question raised by “The Matrix” sounds like science fiction, it is now debated seriously by scientists, technologists and philosophers around the world. Elon Musk is among those; he thinks the odds that we are in a simulation are a billion to one (in favor of being inside a video-game world)!

As a founder and investor in many video game startups, I started to think about this question seriously after seeing how far virtual reality has come in creating immersive experiences. In this article we look at the development of video game technology past and future to ask the question: Could a simulation like that in “The Matrix” actually be built? And if so, what would it take?

What we’re really asking is how far away we are from The Simulation Point, the theoretical point at which a technological civilization would be capable of building a simulation that was indistinguishable from “physical reality.”

[Editor’s note: This article summarizes one section of the upcoming book, “The Simulation Hypothesis: An MIT Computer Scientist Shows Why AI, Quantum Physics and Eastern Mystics All Agree We Are in a Video Game.“]

From science fiction to science?

But first, let’s back up.

“The Matrix,” you’ll recall, starred Keanu Reeves as Neo, a hacker who encounters enigmatic references to something called the Matrix online. This leads him to the mysterious Morpheus (played by Laurence Fishburne, and aptly named after the Greek god of dreams) and his team. When Neo asks Morpheus about the Matrix, Morpheus responds with what has become one of the most famous movie lines of all time: “Unfortunately, no one can be told what The Matrix is. You’ll have to see it for yourself.”

Even if you haven’t seen “The Matrix,” you’ve probably heard what happens next — in perhaps its most iconic scene, Morpheus gives Neo a choice: Take the “red pill” to wake up and see what the Matrix really is, or take the “blue pill” and keep living his life. Neo takes the red pill and “wakes up” in the real world to find that what he thought was real was actually an intricately constructed computer simulation — basically an ultra-realistic video game! Neo and other humans are actually living in pods, jacked into the system via a cord into his cerebral cortex.

Who created the Matrix and why are humans plugged into it at birth? In the two sequels, “The Matrix Reloaded” and “The Matrix Revolutions,” we find out that Earth has been taken over by a race of super-intelligent machines that need the electricity from human brains. The humans are kept occupied, docile and none the wiser thanks to their all-encompassing link to the Matrix!

But the Matrix wasn’t all philosophy and no action; there were plenty of eye-popping special effects during the fight scenes. Some of these now have their own name in the entertainment and video game industry, such as the famous “bullet time.” When a bullet is shot at Neo, the visuals slow down time and manipulate space; the camera moves in a circular motion while the bullet is frozen in the air. In the context of a 3D computer world, this make perfect sense, though now the camera technique is used in both live action and video games. AI plays a big role too: in the sequels, we find out much more about the agents pursuing Neo, Morpheus and the team. Agent Smith (played brilliantly by Hugo Weaving), the main adversary in the first movie, is really a computer agent — an artificial intelligence meant to keep order in the simulation. Like any good AI villain, Agent Smith (who was voted the 84th most popular movie character of all time!) is able to reproduce itself and overlay himself onto any part of the simulation.

“The Matrix” storyboard from the original movie. (Photo by Jonathan Leibson/Getty Images for Warner Bros. Studio Tour Hollywood)

The Wachowskis, creators of “The Matrix,” claim to have been inspired by, among others, science fiction master Philip K. Dick. Most of us are familiar with Dick’s work from the many film and TV adaptations, ranging from Blade Runner, Total Recall and the more recent Amazon show, The Man in the High Castle. Dick often explored questions of what was “real” versus “fake” in his vast body of work. These are some of the same themes we will have to grapple with to build a real Matrix: AI that is indistinguishable from humans, implanting false memories and broadcasting directly into the mind.

As part of writing my upcoming book, I interviewed Dick’s wife, Tessa B. Dick, and she told me that Philip K. Dick actually believed we were living in a simulation. He believed that someone was changing the parameters of the simulation, and most of us were unaware that this was going on. This was of course, the theme of his short story, “The Adjustment Team” (which served as the basis for the blockbuster “The Adjustment Bureau,” starring Matt Damon and Emily Blunt).

A quick summary of the basic (non-video game) simulation argument

Today, the simulation hypothesis has moved from science fiction to a subject of serious debate because of several key developments.

The first was when Oxford professor Nick Bostrom published his 2003 paper, “Are You Living in a Simulation?” Bostrom doesn’t say much about video games nor how we might build such a simulation; rather, he makes a clever statistical argument. Bostrom theorized that if a civilization ever got the Simulation Point, it would create many ancestor simulations, each with large numbers (billions or trillions?) of simulated beings. Since the number of simulated beings would vastly outnumber the number of real beings, any beings (including us!) were more likely to be living inside a simulation than outside of it!

Other scientists, like physicists and Cosmos host Neil deGrasse Tyson and Stephen Hawking weighed in, saying they found it hard to argue against this logic.

Bostrom’s argument implied two things that are the subject of intense debate. The first is that if any civilization every reached the Simulation Point, then we are more likely in a simulation now. The second is that we are more likely all AI or simulated consciousness rather than biological ones. On this second point, I prefer to use the “video game” version of the simulation argument, which is a little different than Bostrom’s version.

Video games hold the key

Let’s look more at the video game version of the argument, which rests on the rapid pace of development of video game and computer graphics technology over the past decades. In video games, we have both “players” who exist outside of the video game, and “characters” who exist inside the game. In the game, we have PCs (player characters) that are controlled (you might say mentally attached to the players), and NPCs (non-player characters) that are the simulation artificial characters.

It’s much easier to see a path from today’s VR to something like “The Matrix” than it was in 1999 when the movie was released. With games like Fortnite and League of Legends having millions of online players interacting in a shared online world, the idea that we might actually be in a shared connected simulated world doesn’t seem so far-fetched.

Elon Musk himself gave the video game version of the simulation argument at the Code Conference in 2016, which spurred renewed interest in this topic over the past few years. Forty years ago, said Musk, we had Pong, which was basically two lines and a dot. Today we have sophisticated 3D MMORPGs; if this pace continues, what will games be like in one, two or 10 decades?

As a video game designer, I started to think about this topic more seriously after what I call my own “conversion” experience with virtual reality. That same year (2016), I was playing a VR ping-pong game built by a startup in Sausalito (Free Range Games). As I played the opponent, the motions I went through felt so real that I momentarily forgot I was in a room with a VR headset holding HTC Vive controllers. In fact, at the end of the game I placed the paddle on the table and leaned forward against the table. Of course, paraphrasing another famous line from “The Matrix” about there being no spoon: There was no paddle and there was no table! They were just information that was being rendered as pixels on my headset.

On the road to the Simulation Point

While Bostrom and Musk talk at a high level about living inside such a simulation, they don’t get into much detail about how such a simulation would be built.

One way to conjecture about the possibility of this hypothesis is to see if we (or any civilization) could build such an all-encompassing simulation. Table 1 lists the stages of technology development, starting from the simplest video games and moving through critical milestones, that a civilization would need to surpass to reach the Simulation Point.

| Stage | Technology | Time frame | |

| 0 | Text Adventures | 1970s-1980s | |

| 1 | Graphical Arcade Games | 1970s-1980s | |

| 2 | Graphical RPG Games | 1980s | |

| 3 | 3D Rendered MMORPGs and Virtual Worlds | 1990s-2000s | |

| 4 | Immersive Virtual Reality | 2010s-2020s | |

| 5 (*) | Photo-realistic Augmented and Mixed Reality | 2020s | |

| 6 (*) | Real World Rendering: Light Fields and 3d Printing | 2010s-2020s | |

| 7 (*) | Mind Interfaces | 2020s-? | |

| 8 (*) | Implanted Memories | 2030s-? | |

| 9 (*) | Artificial Intelligence and NPCs | 2010s-? | |

| 10 (*) | Downloadable Consciousness | 2040s-? | |

| 11 | The Simulation Point | 2100? |

A quick glance at this table suggests that we are at about half-way to reaching the Simulation Point. You’ll also notice the stages do not need to occur in serial fashion — in fact, many of the key technologies have been, and will be developed in parallel. If you look at the history of video games, text adventure games (stage 0) developed in parallel with graphical arcade video games (stage 1), before they merged into graphical RPGs (role-playing games, stage 2).

Ironically, if you look at the stages we have yet to master, identified with asterisks (*), you’ll realize that getting from here to the Simulation Point is not just a matter of increased pixel resolution, it is rather one of optimization and how we build interfaces between humans and computers, so it may rely not on Moore’s Law but on other innovations.

A look back, from text adventures to MMORPGs

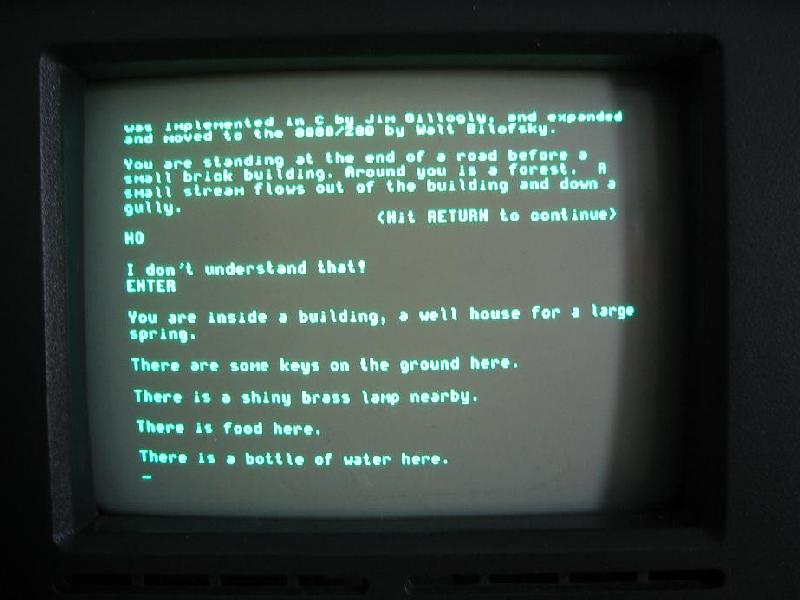

Let’s look at these stages in a little more detail. Stage 0, text adventures, began with the creation of Colossal Cave Adventure in the 1970s, and it reached its zenith in the 1980s with MIT spin-off Infocom, which made games such as Zork and Planetfall. These adventures introduced many concepts that are still used in today’s games, including: the fundamental idea of a virtual “game world,” issuing commands to move around and explore this virtual game world and the existence of NPCs (or non-player characters).

Even though these games had no graphics at all, they were really relying on the most sophisticated graphics engine of all: the human brain. Ironically, if you look at the advanced stages of tech needed to reach the Simulation Point, we will need to move beyond our graphics rendering technology to beam directly into the brain, starting with Stage 7 (mind interfaces). What this means is that we are back to using the most advanced rendering engine we have, the same one used by text adventures: the human brain!

Stage 1, arcade games, began with the release of Pong in 1972 by Atari, the first widely available arcade game. This stage reached its zenith with classic coin-op games in the 1980s, including Space Invaders and Pac-Man. These games showed that you could create a graphical world with its own geometry and physics using pixels, a significant technical accomplishment. In Asteroids, for example, you could go “beyond” the edge of the screen, and the ship would reappear on the other side, revealing a wraparound geometry.

In the 1980s, these two nascent stages merged to form Stage 2, 2D graphical adventure games, like King’s Quest and Ultima. In these games, you played a character and could visually explore the game world, which was revealed one screen at a time. This genre of graphical adventures eventually reached its height with games like Legend of Zelda on the Nintendo system, which introduced better fidelity 16-bit systems, only to disappear before it was resurrected by companies like Telltale games.

In the 1990s, two major trends converged to reach Stage 3: 3D graphics and internet connectivity. One of the first hits to introduce 3D perspective wasn’t an RPG at all. Doom made the technical leap to be able to render the 3D world quickly enough so that players felt like they were “in the game.” MMORPGs have now evolved to the likes of World of Warcraft and Fortnite, hosting millions of 3D avatars (of all shapes, colors and sizes) in an online-shared 3D virtual world.

Anyone who has studied the history of video games will realize that in addition to an increase in processing power, progress is made when new optimization techniques are created. A key technique that made 3D worlds possible is conditional rendering, which only showed the pixels that were needed at any given time, given your character’s position in the game world. A whole industry has grown up around 3D modeling and rendering, ranging from texturing tools, game engines and even particle simulators and light-ray based rendering. While the result is ever more realistic rendering in video games, the real goal of this optimization is to be able to see the world and objects as information, and then only render that which can be observed by the player’s character!

This links back to one of the other main arguments for the simulation hypothesis, which relies on one of the strangest findings in quantum physics, called quantum indeterminacy. This property shows that very possible state of a particle exists (called a probability wave), and only when this wave collapses does the universe actually show us a concrete possibility. Like Schrodinger’s Cat, which is supposed to be both dead and alive until someone opens up the box observes it, video games advanced by adopting the golden rule of simulations: only render what can be observed.

Getting to full immersion: VR, AR, MR

Building on top of 3D MMORPGs, today virtual reality (VR) and augmented reality (AR) are starting to bring science fiction into reality. In last year’s Ready Player One, the OASIS was a fully immersive VR world that everyone used to escape from the real world! Students went to school and lived virtual lives, complete with battles and romance in this virtual world.

Today’s AR systems, like Magic Leap and the Microsoft HoloLens, use the same 3D modeling techniques from video games to render objects in the world around us. Like today’s VR systems, today’s AR still relies on clumsy glasses, but they are evolving rapidly. It is very possible with additional light-field technology (which simulates how light rays bounce off objects) that in the next 10 to 20 years we will see photo-realistic AR being rendered in real time in the environment.

The key to photo-realism in VR and AR isn’t simply a matter of number of pixels. As special effects from movies like Blade Runner 2049 show us, we have the resolution to create realistic objects in CGI that blend in with real-world objects. Today’s digital movies are delivered at 2K resolution and our computers are already capable of displaying 4K resolution. The problem is one of optimization (rendering only those pixels that are needed) and real-time re-rendering, and the volume of information that is needed to render a full, three-dimensional world.

Future tech: Mind interfaces and implanted memories

Now we move beyond where we are today into more speculative areas: Stage 7 (mind interfaces) and Stage 8 (implanted memories). One of the main reasons the Matrix was so convincing to humans like Neo was that the images were beamed directly into their brains, in this case via a wire that attached to the cerebral cortex. Basically, the brain was tricked into thinking the experience was real.

To build something like this, we will need to bypass today’s VR and AR goggles and interface directly with the brain to read our intentions and to visualize the game world.

Advances made in the last decade suggest that mind interfaces are not as far off as we might think. Startups in this field include Neurable, a Boston-based startup that is working on BCI (Brain Computer Interfaces) for controlling virtual reality. They’ve partnered with game development companies to create games that are controlled by your mind. You have to focus on a specific object with your eyes and mind to pick it up — no implants required — just a headset!

Another startup, Neuralink, funded by Elon Musk, claims to develop “high bandwidth and safe” brain-machine interfaces. In another nod to science fiction, Musk says the technology is based on the concept of “neural lace,” a digital layer that exists above the cerebral cortex, and which was coined as part of the Culture, a fictional civilization created by science fiction writer Iain Banks. This technology relies on an implant through a vein and then allows interfacing through broadband with other objects in the world around us. This fits into a megatrend of transhumanism, or augmenting the human body/mind with technology.

How do these mind interfaces work? There are many different techniques that are being experimented with today for “mind reading.” Within VR, tracking of eye movements is another area that is being used to discern a user’s intention. Going one step deeper includes reading brain waves (alpha, beta, theta) in a kind of biofeedback, which has been utilized by many products. A non-invasive approach is to use statistics to narrow down what the intentions are based on these factors. Another approach is to read vibrations in the vocal area that occur when we have certain intentions. And then of course, there is the implanting path, which may lead down the road to the Simulation Point.

There’s another method that taps into other strange findings of quantum physics that seem to imply that the mind is connected to what we observe in the world around us. This almost sounds like magic, because as science fiction writer Arthur C. Clark was famous for saying, “any sufficiently advanced technology is indistinguishable from magic.” Adam Curry, a former researcher at Princeton’s Advanced Engineering Research Institute (PEAR), has based several products on the lab’s unusual findings that the mind can influence random number generators to be, well, less random. These products, extending the findings of PEAR’s Global Consciousness Project, monitor the flow of random number generators (RNGs) and use deviations from the standard to identify changes in the minds of the participants. One product is a mood lamp, which changes colors as you think about them. Another is a mobile app, Entangled, to see how large numbers of RNGs deployed in an area change with mental and environmental conditions of the participants.

So, we are well on the road to being able to read intentions and interpret them. How about the opposite — broadcasting into the mind?

Experiments done in the 1950s by Penfield suggest that memories can be triggered inside the brain by electrical signals. But, in another nod to Philip K. Dick’s science fiction, to truly make progress on the road to the Simulation Point, we may need to be able to plant false memories. This was vividly shown in the movie “Blade Runner,” when the android Rachel (played by Sean Young) thought she was human because she “remembered” growing up.

Can false memories be implanted into the mind? Sociological research done in high-profile court cases shows that memories can be falsified by using a combination of suggestion and immersion.

In 2013, a team of researchers at MIT, while researching Alzheimer’s, found that they could implant false memories in the brains of mice. According to Susumu Tonegawa, professor of biology and neuroscience at MIT, these false memories end up having the same neural structure as real memories. This has been done in a very limited way to date, but the techniques are promising.

If memories can be falsified, then determinism breaks down, and the idea that we are in a fixed physical reality with a definite history is no longer a given. Stephen Hawking, speaking about information loss that occurs when particles go into black holes, warned us at a lecture at Harvard that the breakdown of determinism had profound implications: “The history books and our memories could just be illusions. It is the past that tells us who we are. Without it, we lose our identity.”

Does nature already have the answer?

We are still far away from these technologies being in a form that can be readily used, and much more study is needed. Like many scientists in the past, we may want to turn to nature for inspiration.

It turns out that there is already a ready-made biological technology that meets almost all the criteria of having arrived at the Simulation Point. It happens every night when we dream, which are like mini-simulations!

Consider what happens when we dream:

- The “player” lays down physically, and their body goes to “sleep” (Stage 10, downloading of consciousness into a simulated body).

- Pictures and sounds are projected directly into the player’s mind’s eye (Stage 7, mind interfaces).

- The player is so immersed they forget that the world is not real (Stage 11, the simulation point).

- The player is conscious at some level, even though they are not aware of their consciousness outside of the dream (full immersion).

- The dream reads the players’ intentions directly from the mind (Stage 7, mind interfaces).

- There are non-player characters who may or may not be real (Stage 9, artificial intelligence).

- In the dream state, sometimes it seems there are memories associated with the dream that are not part of the person’s physical life (Stage 8, false memories).

It may just be that when a civilization reaches the Simulation Point, it just means they have learned to reproduce, with computation and video game technology, a process that every single human being already does naturally!

One of the traditions that has studied consciousness and dreams intensively is the Tibetan Buddhist traditions. In Dream Yoga, participants are trained to “recognize” when they are in a dream. This is similar to the modern practice of lucid dreaming, with one exception. The whole purpose of recognizing a dream as an “illusion” is so that monks can recognize that even when we are awake, we are in an illusory dream-like world — what’s called maya or illusion. Technology developed by Stephen LaBerge, formerly of Stanford’s sleep lab, has been used to bolster the ability to induce lucid dreams, raising the specter that we could use similar technology to recognize that we are in a simulation!

In the Tibetan traditions, there is an even more esoteric, secret practice that might shed light on how we might download or upload consciousness. The Six Yogas of Naropa, which include Dream Yoga, also include a secret seventh Yoga, of consciousness transference and forceful projection, kept secret for obvious reasons. The idea is that consciousness leaves the body at death; a person adept at conscious transference can go directly from this life into the next. A person adept at forceful projection can actually project this consciousness into another biological being. In what might sound like something out of a horror movie, the Tibetans tell stories of yogis who would go to grave sites with recently deceased younger bodies and forcefully project their consciousness into these bodies, which would then wake up (in ancient times only the wealthy cremated their bodies).

More study would need to be done on the techniques espoused by the Tibetan traditions — but if we are looking to enhance the capabilities of the mind and body, it makes sense to look at those who have claimed to do it for thousands of years!

Simulated versus real consciousness?

Which brings us to an important question: What is consciousness and how might it be simulated? AI is in vogue today, but the history of AI is caught up with the history of games. One of the first AIs ever created was a chess-playing computer by MIT professor Claude Shannon. One of Shannon’s contemporaries, Alan Turing, described the now-famous “Turing Test,” a conversation game where an AI is indistinguishable from a “real” human.

NPCs within video games can’t pass the Turing Test yet, but AI is advancing rapidly, already giving humans serious competition in traditional games like Chess and Go. China’s Xinhua news agency recently introduced virtual news anchors — a male and a female one — that can read the news like real humans.

One of the leaders of the transhumanist movement, futurist Ray Kurzweil (and others) believes we are approaching the singularity in another way — downloading consciousness to silicon-based devices, preserving our minds forever. Kurzweil and those of this ilk believe it is simply a matter of duplicating the neurons and neural connections of the brain — 1,012 neurons through 1,015 synapses. While this task seemed insurmountable 20 years ago, today, teams have already simulated the neurons in a rat’s brain using a much smaller number of neurons and connections.

Others believe consciousness is more complicated, bordering on the philosophical and religious discussion. Most of the world’s religions (Eastern and Western traditions) already teach of transmission of consciousness: downloading it at birth and uploading it at death of the body! In the Eastern traditions, it is then beamed down into additional bodies through multiple lives.

Bostrom’s original simulation argument seemed to imply that we are all simulated, artificial beings rather than biological entities. However, if we think of our own world as a giant version of the Matrix, what I like to call the Great Simulation, then the video game metaphor is more apt.

The video game metaphor raises the possibility that there are both PCs (player characters) and NPCs (non-player characters) that are purely artificial. This may be what the religions have been telling us all along, but our science and technology haven’t been able to catch up to downloading and uploading consciousness!

The Simulation Point and the world as information

While VC Marc Andreessen famously said that “software is eating the world,” a more appropriate statement in the long run is that as each science advances, it is revealed that information is at the heart of it. Once upon a time, physics and biology were thought of as the study of physical objects; today, physicists and biologists are coming to the conclusion that information is the key to unlocking their sciences. Genes, for example, are nothing if not a way to store information inside biological computers. Physicist John Wheeler, and physics in general, went through several phases where they thought objects were material (discrete particles), then they thought they were fields (of probability), but eventually he came to the conclusion that everything in physics comes down to information. Before his death, Wheeler came up with a slogan that embodies this: “It from bit.”

If the world and consciousness can be represented as information, then we can make progress down the road to the Simulation Point. We are already well down this road with the 3D technology used in video games to present a “rendered world” and information stored on the server that is outside the rendered world. With the recent developments in AI and brain-computer interfaces, I believe we can get there in decades or a century at the most.

What would we do if we got to that point? In the movie, “The Matrix,” the simulation was created by super-intelligent machines in order to keep humans in bondage and utilize the electricity of their minds. I would like to think we would be much more benign with our creations! Perhaps we would just want to play better video games!

Nick Bostrom from Oxford argued that if such technology can ever be created, then chances are it has already been created by some advanced civilization somewhere in the universe!

If that’s the case, then who is to say that we aren’t, as Philip K. Dick believed, already in such a simulation?

On the 20th anniversary of the release of “The Matrix,” I’m reminded of the words of Havelock Ellis, who once said, “Dreams are real while they last. Can we say any more of life?”

Can we indeed?