From Elon’s Neuralink to Bryan Johnson’s Kernel, a new wave of businesses are specifically focusing on ways to access, read and write from the brain.

The holy grail lies in how to do that without invasive implants, and how to do it for a mass market.

One company aiming to do just that is New York-based CTRL-Labs, who recently closed a $28 million Series B. The team, comprising over 12 PHDs, is decoding individual neurons and developing an electromyography-based armband that reads the nervous signals travelling from the brain to the fingers. These signals are then translated into desired intentions, enabling anything from thought-to-text to moving objects.

Scientists have known about electrical activity in the brain since Hans Berger first recorded it using an EEG in 1924, and the term “brain computer interface” (BCI) was coined as early as the 1970s by Jacques Vidal at UCLA. Since then most BCI applications have been tested in the military or medical realm. Although it’s still the early innings of neurotech commercialization, in recent years the pace of capital going in and company formation has picked up.

For a conversation with Flux I sat down with Thomas Reardon the CEO of CTRL-Labs and discussed his journey to founding the company. Reardon explained why New York is the best place to build a machine learning based business right now and how he recruits top talent. He shares what developers can expect when the CTRL-kit ships in Q1 and explains how a brain control interface may well make the smartphone redundant.

An excerpt is published below. Full transcript on Medium.

AMLG: I’m excited to have Thomas Reardon on the show today. He is the co-founder and CEO of CTRL-Labs a company building the next generation of non-invasive neural computing here in Manhattan. He’s just cycled from uptown — thanks for coming down here to Chinatown. Reardon was previously the founder of a startup called Avegadro, which was acquired by Openwave. He also spent time at Microsoft where he was project lead on Internet Explorer. He’s one of the founders of the Worldwide Web Consortium, a body that has established many of the standards that still govern the Web, and he’s one of the architects of XML and CSS. Why don’t we get into your background, how you got to where you are today and why you’re the most excited to be doing what you’re doing right now.

W3 is an international standards organization founded and led by Tim Berners Lee.

TR: My background — well I’m a bit of an old man so this is a longer story. I have a commercial software background. I didn’t go to college when I was younger. I started a company at 19 years old and ended up at Microsoft back in 1990, so this was before the Windows revolution stormed the world. I spent 10 years at Microsoft. The biggest part of that was starting up the Internet Explorer project and then leading the internet architecture effort at Microsoft so that’s how I ended up working on things like CSS and XML, some of the web nerds out there should be deeply familiar with those terms. Then after doing another company that focused on the mobile Internet, Phone.com and Openwave, where I served as CTO, I got a bit tired of the Web. I got fatigued at the sense that the Web was growing up not to introduce any new technology experience or any new computer science to the world. It was just transferring bones from one grave to another. We were reinventing everything that had been invented in the 80s and early 90s and webifying it but we weren’t creating new experiences. I got profoundly turned off by the evolution of the Web and what we were doing to put it on mobile devices. We weren’t creating new value for people. We weren’t solving new human problems. We were solving corporate problems. We were trying to create new leverage for the entrenched companies.

So I left tech in 2003. Effectively retired. I decided to go and get a proper college education. I went and studied Greek and Latin and got a degree in classics. Along the way I started studying neuroscience and was fascinated by the biology of neurons. This led me to grad school and doing a Ph.D. which I split across Duke and Columbia. I’d woken up some time in like 2005 2006 and was reading an article in The New York Times. It was something about a cell and I scratched my head and said, we all hear that term we all talk about cells and cells in the body, but I have no idea what a cell really is. To the point where a New York Times article was too deep for me, and that almost embarrassed me and shocked me and led me down this path of studying biology in a deeper almost molecular way.

AMLG: So you were really in the heart of it all when you were working at Microsoft and building your startup. Now you are building this company in New York — we’ve got Columbia and NYU and there’s a lot of commercial industries — does that feel different for you, building a company here?

TR: Well let’s look at the kind of company we’re building. We’re building a company which is at its heart about machine learning. We’re in an era in which every startup tries to have a slide in their deck that says something about ML, but most of them are a joke in comparison. This is the place in the world to build a company that has machine learning at its core. Between Columbia and NYU and now Cornell Tech, and the unbelievably deep bench of machine learning talent embedded in the finance industry, we have more ML people at an elite level in New York than any place on earth. It’s dramatic. Our ability to recruit here is unparalleled. We beat the big five all the time. We’re now 42 people and half of them are Ph.D. scientists. For every single one of them we were competing against Google, Facebook, Apple.

AMLG: Presumably this is a more interesting problem for them to work on. If they want to go work at Goldman in AI they can do that for a couple of years, make some dollars and then come back and do the interesting stuff.

TR: They can make a bigger salary but they will work on something that nobody in the rest of the world will ever get to hear about. The reason why people don’t talk about all this ML talent here is when it’s embedded in finance you never get to hear about it. It’s all secret. Underneath the waters. The work we’re doing and this new generation of companies that have ML at their core — even a company like Spotify is, on the one hand fundamentally a licensing and copyright arbitrage company, but on the other hand what broke out for Spotify was their ML work. It was fundamental to the offer. That’s the kind of thing that’s happening in New York again and again now. There’s lots of companies — like a hardware company — that would be scary to build in New York. We have a significant hardware component to what we’re doing. It is hard to recruit A team world-class hardware folks in New York but we can get them. We recently hired the head of product from Peloton who formerly ran Makerbot.

AMLG: We support that and believe there’s a budding pool here. And I guess the third bench is neuro, which Columbia is very strong in.

Larry Abbott helped found the Center of Theoretical Neuroscience at Columbia

TR: Yes as is NYU. Neuroscience is in some sense the signature department at Columbia. The field breaks across two domains — the biological and the computational. Computational neuroscience is machine learning for real neurons, building operating computational models of how real neurons do their work. It’s the field that drives a lot of the breakthroughs in machine learning. We have these biologically inspired concepts in machine learning that come from computational neuroscience. Colombia has by far the top computational neuroscience group in the world and probably the top biological neuroscience group in the world. There are five Nobel Prize winners in the program and Larry Abbott the legend of theoretical neuroscience. It’s its an unbelievably deep bench.

AMLG: How do you recruit people that are smarter than you? This is a question that everyone listening wants to know.

Patrick Kaifosh, Thomas Reardon, Tim Machado the co-founders of CTRL-Labs

TR: I’m not dumb but I’m not as smart as my co-founder and I’m not as smart as half of the scientific staff inside the company. I affectionately refer to my co-founder as a mutant. Patrick Kaifosh, who’s chief scientist. He is one of the smartest human beings I’ve ever known. Patrick is one of those generational people that can change our concept of what’s possible, and he does that in a first principles way. The recruiting part is to engage people in a way that lets them know that you’re going to take all the crap away that allows them to work on the hardest problems with the best people.

AMLG: I believe it and I’ve met some of them. So what was the conversation with Kaifosh and Tim when when you first sat down and decided to pursue the idea?

TR: So we were wrapping up our graduate studies, the three of us. We were looking at what it would be like to stay in academia and the bureaucracy involved in trying to be a working scientist in academia and writing grants. We were looking around at the young faculty members we saw at Columbia and thought, that doesn’t look like they’re having fun.

AMLG: When you were leaving Columbia it sounds like there wasn’t another company idea. Was it clear that this was the idea that you wanted to pursue at that time?

TR: What we knew is we wanted to do something collaborative. We did not think, let’s go build a brain machine interface. We don’t actually like that phrase, we like to call them neural interfaces. We didn’t think about neural interfaces at all. The second idea we had, an ingredient we put into the stew and started mixing up was, was that we wanted to leverage experimental technologies from neuroscience that hadn’t yet been commercialized. In some sense this was like when Genentech was starting in the mid 70s. We had found the crystal structure of DNA back in the late 40s, there had been 30 years of molecular biology, we figured out DNA then RNA then protein synthesis then ribosome. Thirty years of molecular biology but nobody had commercialized it yet. Then Genentech came along with this idea that we could make synthetic protein, that we could start to commercialize some of these core experimental techniques and do translation work and bring value back to humanity. It was all just sitting there on the shelf ready to be exploited.

We thought OK what are the technologies in neuroscience that we use at the bench that could be exploited? For instance spike sorting, the ability to listen with a single electrode to lots of neurons at the same time and see all the different electrical impulses and de-convolve them. You get this big noisy signal and you can see the individual neurons activity. So we started playing with that idea, lets harvest the last 30 or 40 years of bench experimental neuroscience. What are the techniques that were invented that we could harvest?

AMLG: We’ve been reading about these things and there’s been so much excitement about BMI but you haven’t really seen things in market things that people can hack around with. I don’t know why that gap hasn’t been filled. Does no one have the balls to go take these off the shelf and try and turn them into something or is it a timing question?

The brain has upper motor neurons in the cortex which map to lower motor neurons in the spinal cord, which send long axons down to contact the muscles. They release neurotransmitters that turn individual muscle fibres on and off. Motor units have 1:1 correspondence with motor neurons. When motor neurons fire in the spinal cord, an output signal from the brain, you get a direct response in the muscle. If those EMG signals can be decoded, then you can decode the zeros and ones of the nervous system — action potential

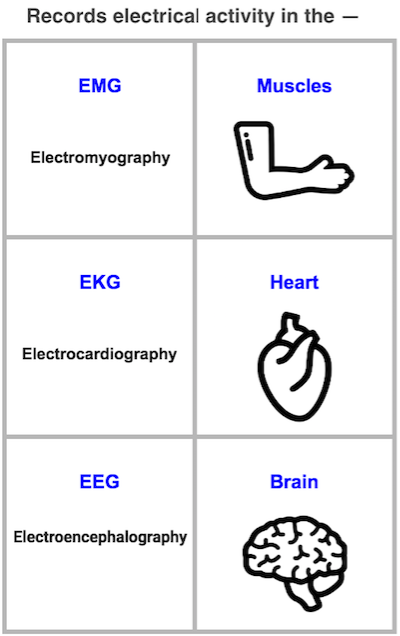

TR: Some of this is chutzpah and some of it is timing. The technologies that we are leveraging weren’t fully developed for how we’re using them. We had to do some invention since we started the company three years ago. But they were far enough along that you could imagine the gap and come up with a way to cross the gap. How could we, for instance, decode an individual neuron using a technology called electromyography. Electromyography has been around for probably over a century and that’s the ability to —

AMLG: Thats what we call EMG.

TR: EMG. Yes you can record the electrical activity of a muscle. EKG electrocardiography is basically EMG for the heart alone. You’re looking at the electrical activity of the heart muscles. We thought if you improve this legacy technology of EMG sufficiently, if you improve the signal to noise, you ought to be able to see the individual fibers of a muscle. If you know some neuroanatomy what you figure out is that the individual fibers correspond to individual neurons. And by listening to individual fibers we can now reconstruct the activity of individual neurons. That’s the root of a neural interface. The ability to listen to an individual neuron.

EEG toy “the Force Trainer”

AMLG: My family are Star Wars fans and we had a device one Christmas that we sat around playing with, the force trainer. If you put the device around your head and stare long enough the thing is supposed to move. Everything I’ve ever tried has been like that has been like that Force Trainer, a little frustrating —

TR: Thats EEG, electroencephalography. That’s when you put something on your skull and record the electrical activity. The waves of activity that happen in the cortex, in the outer part of your brain.

AMLG: And it doesn’t work well because the skull is too thick?

TR: There’s a bunch of reasons why it doesn’t work that well. The unfortunate thing is that when most people hear about it that’s one of the first things they think about like, oh well all my thinking is up here in the cortex right underneath my skull and that’s what you’re interfacing with. That is actually —

AMLG: A myth?

TR: Both a myth and the wrong approach. I’m going have to go deep on this one because it’s subtle but important. The first thing is let’s just talk about the signal qualities of EEG versus what we’re doing where we listen to individual neurons and do it without having to drill into your body or place an electrode inside of you. EEG is trying to listen to the activity of lots of neurons all at the same time tens of thousands hundreds of thousands of neurons and kind of get a sense of what the roar of those neurons is. I liken it to sitting outside of Giant Stadium with a microphone trying to listen to a conversation in Section 23 Row 4 seat 9. You can’t do it. At best you can tell is that one of the teams scored you hear the roar of the entire stadium. That’s basically what we have with EEG today. The ability to hear the roar. So for instance we say the easiest thing to decode with EMG is surprise. I could put a headset on you and tell if you’re surprised.

AMLG: That doesn’t seem too handy.

TR: Yup not much more than that. Turns out surprise is this global brain state and your entire brain lights up. In every animal that we do this in surprise looks the same — it’s a big global Christmas tree that lights up across the entire brain. But you can’t use that for control. And this cuts to the name of our company, CTRL-Labs. I don’t just want to decode your state. I want to give you the ability to control things in the world in a way that feels magical. It feels like Star Wars. I want you to feel like the Star Wars Emperor. What we’re trying to do is give you control and a kind of control you’ve never experienced before.

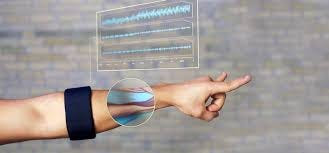

The MYO armband by Canadian startup Thalmic Labs

AMLG: This is control over motion right? Maybe you can clarify — where I’ve seen other companies like MYO, which was an armband, it was really motion capture where people were capturing how you intended to gesture, rather than what you were thinking about?

TR: Yeah. In some sense we’re a successor to MYO (Thalmic Labs) — if Thalmic had been built by neuroscientists you would have ended up on the path that we’re on now.

Thomas Reardon demonstrating Myo control

We have two regimes of control, one we call Myo control and the other we call Neuro control. Myo control is our ability to decode what ultimately becomes your movements. The electrical input to your muscles that cause your muscles to contract, and then when you stop activating them they slowly relax. We can decode the electrical activity that goes into those muscles even before the movement has started and even before it ends and recapitulate that in a virtual way. Neuro control is something else. It’s kind of exotic and you have to try it to believe it. We can get to the level of the electrical activity of neurons — individual neurons — and train you rapidly on the order of seconds to control something. So imagine you’re playing a video game and you want to push a button to hop like you’re playing Sonic the Hedgehog. I can train you in seconds to turn on a single neuron in your spinal cord to control that little thing.

AMLG: When I came to visit your lab in 2016 the guy had his hand out here. I tried it — it was an asteroid field.

TR: Asteroids, the old Atari game.

Patrick Kaifosh playing Asteroids — example of Neuro Control [from CTRL-Labs, late 2017]

AMLG: Classic. And you’re doing fruit ninja now too? It gets harder and harder.

TR: It does get harder and harder. So the idea here is that rather than moving you can just turn these neurons on and off and control something. Really there’s no muscle activity at that point you’re just activating individual neurons, they might release a little pulse, a little electrical chemical transmission to the muscle, but the muscle can’t respond at that level. What you find out is rather than using your neurons to control say your five fingers, you can use your neurons to control 30 virtual fingers without actually moving your hand at all.

AMLG: What does that mean for neuroplasticity. Do you have to imagine the third hand fourth hand fifth hand, or your tail like in Avatar?

TR: This is why I focus on the concept of control. We’re not trying to decode what you’re “thinking.” I don’t know what a thought is and there’s nobody in neuroscience who does know what a thought is. Nobody. We don’t know what consciousness is and we don’t know what thoughts are. They don’t exist in one part of the brain. Your brain is one cohesive organ and that includes your spinal cord all the way up. All of that embodies thought.

Inside Out (2015, Pixar). Great movie. Not how the brain, thoughts or consciousness work

AMLG: That’s a pretty crazy thought as thoughts go. I’m trying to mull that one over.

TR: It is. I want to pound that home. There’s not this one place. There’s not a little chair (to refer to Dan Dennett) there’s not like a chair in a movie theater inside your brain where the real you sits watching what’s happening and directing it. No, there’s just your overall brain and you’re in there somewhere across all of it. It’s that collection of neurons together that give you this sense of consciousness.

What we do with Neuro Control and with CTRL-kit the device that we’ve built is give you feedback. We show you by giving you direct feedback in real time, millisecond level feedback, how to train a neuron to go move say a cursor up and down, to go chase something or to jump over something. The way this works is that we engage your motor nervous system. Your brain has a natural output port — a USB port if you will — that generates output. In some sense this is sad for people, but I have to tell you your brain doesn’t do anything except turn muscles on and off. That’s the final output of the brain. When you’re generating speech when you’re blinking your eyes at me when you’re folding your hands and using your hands to talk to me when you’re moving around when you’re feeding yourself. Your brain is just turning muscles on and off. That’s it. There is nothing else. It does that via motor neurons. Most of those are in your spine. Those motor neurons, it’s not so much that they’re plastic — they’re adaptive. So motor control is this ability to use neurons for very adaptive tasks. Take a sip of water from that bottle right in front of you. Watch what you’re doing.

Intention capture — rather than going through devices to interact, CTRL-Labs will take the electrical activity of the body and decode that directly, allowing us to use that high bandwidth information to interact with all output devices. [Watch Reardon’s full keynote at O’Reilly]

AMLG: Watch me spill it all over myself —

TR: You’re taking a sip. Everything you just did with that bottle you’ve never done that before. You’ve never done that task. In fact you just did a complicated thing, you actually put it around the microphone and had to use one hand then use the other hand to take the cap off the bottle. You did all of that without thinking. There was no cognitive load involved in that. That bottle is different than any other bottle, its slippery it’s got a certain temperature, the weight changes. Have you ever seen these robots try to pour water. It’s comical how difficult it is. You do it effortlessly, like you’re really good —

AMLG: Well I practiced a few times before we got here.

TR: Actually you did practice! The first year two years of your life. That’s all you were doing was practicing, to get ready for what you just did. Because when you’re born you can’t do that. You can’t control your hands you can’t control your body. You actually do something called motor babbling where you just shake your hands around and move your legs and wiggle your fingers and you’re trying to create a map inside your brain of how your body works and to gain control. But gain flexible, adaptive control.

AMLG: That’s the natural training that babies do, which is sort of what you’re doing in terms of decoding ?

TR: We are leveraging that same process you went through when you were a year to two years old to help you gain new skills that go beyond your muscles. So that was all about you learning how to control your muscles and do things. I want to emphasize what you did again is more complex than anything else you do. It’s more complex than language than math than social skills. Eight billion people on earth that have a functioning nervous system, every other one of them no matter what their IQ can do it really well. That’s the part of the brain that we’re interfacing with. That ability to adapt in real time to a task skillfully. That’s not plasticity in neuroscience. It’s adaptation.

AMLG: What does that mean in terms of the amount of decoding you’ve had to do. Because you’ve got a working demo. And I know that people have to train for their own individual use right?

Myo control attempts to understand what each of the 14 muscles in the arm are doing, then deconvolve the signal into individual channels that map out to muscles. If they can build an accurate online map CTRL-Labs believes there is no reason to have a keyboard or mouse

TR: In Myo control it works for anybody right out of the box. With Neuro control it adjusts to you. In fact the model that’s built is custom to you, it wouldn’t work on anybody else it wouldn’t work on your twin. Because your twin would train it differently. DNA is not determinative of your nervous output. What you have to realize is we haven’t decoded the brain — there’s 15 billion neurons there. What we’ve done is created a very reduced but highly functional piece of hardware that listens to neurons in the spinal cord and gives you feedback that allows you to individually control those neurons.

When you think about the control that you exploit every day it’s built up of two kinds of things what we call continuous control — think of that as a joystick, left and right, and much left how much right. Those are continuous controls. Then we have discrete controls or symbols. Think of that as button pushing or typing. Every single control problem you face, and that’s what your day is filled with whether taking a sip of water walking down the street getting in a car driving a car. All of the control problems reduce to some combination of continuous control (swiping) and discrete control (button pushing.) We have this ability to get you to train these synthetic forms of up down left right dimensions if you will, that allows you to control things without moving but then allow you to move beyond the five fingers in your hand and get access to say 30 virtual fingers. What that opens up? Well think about everything you control.

AMLG: I’m picturing 30 virtual fingers right now —and I do want to get into VR, there’s lots of forms one can take in there. The surprising thing to me in terms of target uses and there’s so many uses you can imagine for this in early populations, was that you didn’t start the company for clinical populations or motor pathologies right? A lot of people have been working on bionics. I have a handicapped brother— I’ve been to his school and have seen the kids with all sorts of devices. They’re coming along, and obviously in the army they’ve been working on this. But you are not coming at it from that approach?

TR: Correct. We started the company almost ruthlessly focused on eight billion people. The market of eight billion. Not the market of a million or 10 million who have motor pathologies. In some sense this is the part that’s informed by my Microsoft time. So in the academy when you’re doing neuroscience research almost everybody focuses on pathologies, things that break in the nervous system and what we can do to help people and work around them. They’ll work on Parkinsons or Alzheimers or ALS for motor pathologies. What commercial companies get to do is bring new kinds of deep technology to mass markets, but which then feed back to clinical communities. By pushing and making this stuff work at scale across eight billion people, the problems that we have to solve will ultimately be the same problems that people who want to bring relief to people with motor pathologies need to solve. If you do it at scale lots of things fall out that wouldn’t have otherwise fallen out.

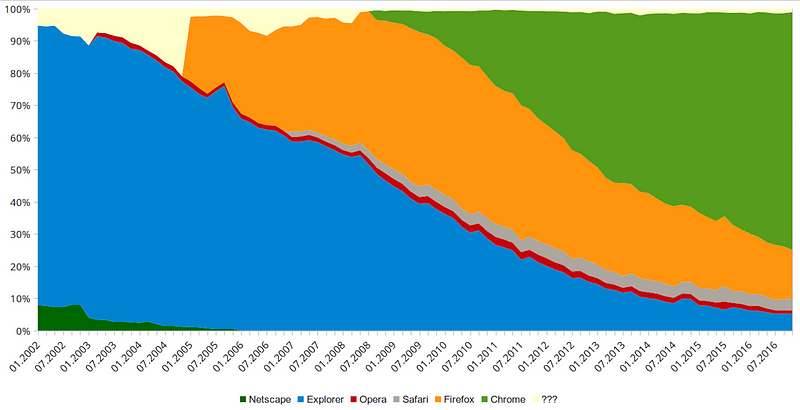

AMLG: It’s fascinating because you’re starting with we’re gonna go big. You’ve said you would like your devices, whether sold by you or by partners, to be on a million people within three or four years. A lot of things start in the realm of science but don’t get commercialized on a large scale. When you launched Explorer, at one point it had 95 percent market share so you’ve touched that many people before —

Internet Explorer browser market share, 2002–2016

TR: Yes and it’s addicting, when you’ve been able to put software into a billion plus hands. That’s the kind of scale that you want to work on and that’s the kind of impact that I want to have and the team wants to have.

AMLG: How do you get something like this to that scale?

TR: One user at a time. You pick segments in which there are serious problems to solve and proximal problems. You’ve talked about VR. We think we solve a key problem in virtual reality augmented reality mixed reality. These emerging, immersive computing paradigms. No immersive computing technology so far has won. There is no default. There’s no standard. Nobody’s pointing at any thing and saying “oh I can already see how that’s the one that’s going to win.” It’s not Oculus it’s not Microsoft Hololens it’s not Magic Leap. But the investment is still happening and we’re now years into this new round of virtual realities. The investment is happening because people still have a hunger for it. We know we want immersive computing to work. What’s not working? It’s kind of obvious. We designed all of these experiences to get data, images, sounds into you. The human input problem. These immersive technologies do breakthrough work to change human input. But they’ve done nothing so far to change human output. That’s where we come in. You can’t have a successful immersive computing platform without solving the human output problem of how do I control this? How do I express my intentions? How do I express language inside of virtual reality? Am I typing or am I not typing?

AMLG: Everyone’s doing the iPad right now. You go into VR and you’re holding a thing that’s mimicking the real world.

TR: What we call skeuomorphic experiences that mimic real life, and that’s terrible. The first developer kits for the Oculus Rift you know shipped with an Xbox controller. Oh my god is that dumb. There’s a myth that the only way to create a new technology is to make sure it has a deep bridge to the past. I call bullshit on that. We’ve been stuck in that model and it’s one of the diseases of the venture world, “we’re Uber for neurons” and it’s Uber for this or that.

AMLG: Well ironically people are afraid to take risks in venture. If you suddenly design a new way of communicating or doing human output it’s, “that’s pretty risky, it should look more like the last thing.”

TR: I’m deeply thankful to the firms that stepped up to fund us, Spark and Matrix and most recently Lux and Google Ventures. We’ve got venture folks who want to look around the bend and make a big bet on a big future.