Facebook is getting guide bots to help tourist bots explore Hell’s Kitchen in a virtual New York City. It’s not just for kicks, either; a new research paper published today by FAIR is looking to examine how AI systems can orient themselves and communicate observed data better than humans can.

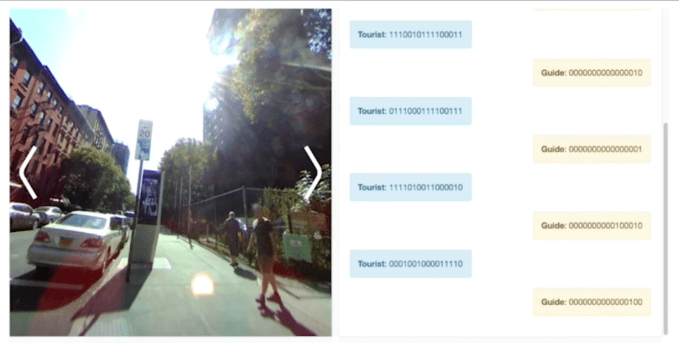

The setup for Facebook’s “Talk the Walk” research experiment involves throwing a “tourist” bot onto a random street corner of NYC and getting a “guide” bot to direct them to a spot on a 2D map. This involved Facebook capturing 360-degree photos of a bunch of different street corners in random spots in NY and feeding them to the AI tourist bot, which then had to peer around at the behest of the guide agent that would then gain a sense of where the tourist was based and try to direct it through a text conversation.

It’s indeed quite the novel experiment, which plays out like this in practice:

Guide: Hello, what are you near? Tourist: Hello, in front of me is a Brooks Brothers Guide: Is that a shop or restaurant? Tourist: It is a clothing shop. Guide: You need to go to the intersection in the northwest corner of the map Tourist: There appears to be a bank behind me. Guide: Ok, turn left then go straight up that road ...

Facebook isn’t doing all of this to give you a virtual guide in some unannounced mapping product, this is Facebook AI Research as opposed to their applied machine learning arm, so this stuff really resides in the long-term, less product-centric sphere. What this experiment is helping Facebook’s AI researchers approach is a concept called “Embodied AI.”

Embodied AI basically entails giving AI models the ability to learn while on-the-go gathering data that is present around them that can help them make sense of what they already know. In “Talk the Walk,” the guide AI bot had all of this 2D map data and the tourist bot had all of this rich 360 visual data, but it was only through communication with each other that they were able to carry out their directives.

The real goal was to work on the two agents gathering information through natural language, but the researchers found that the bots did a better job of completing the task when they used “synthetic language,” which relied more on them using more simplistic symbols to convey information and location. This less natural way of communicating data not only outperformed a more human-like chat, it also enabled the bots to find their way more concisely than humans would in a natural language chat.

What made this environment particularly difficult was the fact that it was the real world. The 360 snapshots were, of course, much more cluttered than what would appear in the simulated models in which a lot of these experiments would typically run. Putting this into words is hard enough when two humans are already vaguely familiar with a location; for two bots that have access to different data, this can be awfully difficult to communicate efficiently.

To tackle this, Facebook built a mechanism called MASC (Masked Attention for Spatial Convolution) that basically enables these language models the agents are running to quickly parse what the keywords are in responses that are probably the most critical to this experiment for getting a sense of what’s being conveyed. Facebook said that utilizing the process doubled the accuracy of results that were being tested.

For Facebook’s part, this is foundational research in that it seems to raise far more questions than it seeks to answer about best practices, but even grasping at those is an important milestone and a good direction to point the broader community in taking on hard problems that need to be tackled the company’s researchers say.

“If you really want to solve all of AI, then you probably want to have these different modules or components that solve different subproblems,” Facebook AI research scientist Douwe Kiela told me. “In that sense this is really a challenge to the community asking people how they would solve this and inviting them to work with us in this exciting new research direction.”