A Facebook face recognition notification slip-up hints at how risky the company’s approach to compliance with a tough new European data protection standard could turn out to be.

On Friday a Metro journalist in the UK reported receiving a notification about the company’s face-recognition technology — which told him “the setting is on.”

The wording was curious, as the technology has been switched off in Europe since 2012, after regulatory pressure, and — as part of changes related to its GDPR compliance strategy — Facebook has also said it will be asking European users to choose individually whether or not they want to switch it on. (And on Friday begun rolling out its new consent flow in the region, ahead of the regulation applying next month.)

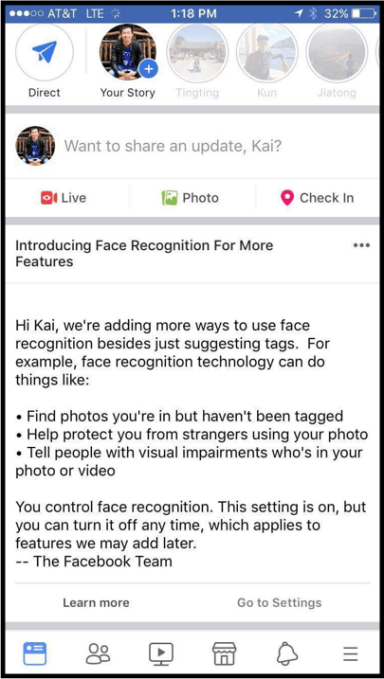

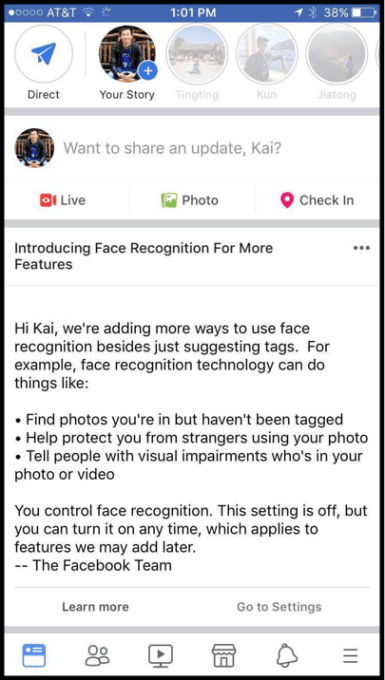

The company has since confirmed to us that the message was sent to the user in error — saying the wording came from an earlier notification which it sent to users who already had its facial recognition tech enabled, starting in December. And that it had intended to send the person a similar notification — containing the opposite notification, i.e. that “the setting is off”.

“We’re asking everyone in the EU whether they want to enable face recognition, and only people who affirmatively give their consent will have these features enabled. We did not intend for anyone in the EU to see this type of message, and we can confirm that this error did not result in face recognition being enabled without the person’s consent,” a Facebook spokesperson told us.

Here are the two notifications in question showing the setting on versus the setting off wordings:

This is interesting because Facebook has repeatedly refused to confirm it will be universally applying GDPR compliance measures across its entire global user-base.

Instead it has restricted its public commitments to saying the same “settings and controls” will be made available for users — which as we’ve previously pointed out avoids committing the company to a universal application of GDPR principles, such as privacy by design.

Given that Facebook’s facial recognition feature has been switched off in Europe since 2012 “the setting is on” message would presumably have only been sent to users in the US or Canada — where Facebook has been able to forge ahead with pushing people to accept the controversial, privacy-hostile technology, embedding it into features such as auto-tagging for photo uploads.

But it hardly bodes well for Facebook’s compliance with the EU’s strict new data protection standard if its systems are getting confused about whether or not a user is an EU person.

Facebook claims no data was processed without consent as a result of the wrong notification being sent — but under GDPR it could face investigations by data protection authorities seeking to verify whether or not an individual’s rights were violated. (Reminder: GDPR fines can scale as high as 4% of a company’s global annual turnover so privacy enforcement is at last getting teeth.)

Facebook’s appetite for continuing to push privacy hostile features on its user-base is clear. This strategic direction also comes from the very top of the company.

Earlier this month CEO and founder Mark Zuckerberg urged US lawmakers not to impede US companies from be using people’s data for sensitive use-cases like facial recognition — attempting to gloss that tough sell by claiming pro-privacy rules would risk the US falling behind China.

Meanwhile, last week it also emerged that Zuckerberg’s company will switch the location where most international users’ data is processed from its international HQ, Facebook Ireland, to Facebook USA. From next month only EU users will have their data controller located in the EU — other international users, who would have at least technically fallen under GDPR’s reach otherwise, on account of their data being processed in the region, are being shifted out of the EU jurisdiction — via a unilateral T&Cs change.

This move seems intended to try to shrink some of Facebook’s legal liabilities by reducing the number of international users that would, at least technically, fall under the reach of the EU regulation — which both applies to anyone in the EU whose data is being processed and also extends EU fundamental rights extraterritorially, carrying the aforementioned major penalties for violations.

However Facebook’s decision to reduce how many of its users have their data processed in the EU also looks set to raise the stakes — if, as it appears, the company intends to exploit the lack of a comprehensive privacy framework in the US to apply different standards for North American users (and from next month also for non-EU international users, whose data will be processed there).

The problem is, if Facebook does not perform perfect segregation and management of these two separate pools of users it risks accidentally processing the personal data of Europeans in violation of the strict new EU standard, which applies from May 25.

Yet here it is, on the cusp of the new rules, sending the wrong notification and incorrectly telling an EU user that facial recognition is on.

Given how much risk it’s creating for itself by trying to run double standards for data protection you almost have to wonder whether Facebook is trying to engineer in some compliance wiggle room for itself — i.e. by positioning itself to be able to claim that such and such’s data was processed in error.

Another interesting question is whether the unilateral switching of ~1.5BN non-EU international users to Facebook USA as data controller could be interpreted as a data transfer to a third country — which would trigger other data protection requirements under EU law, and further layer on the legal complexity…

What is clear is that legal challenges to Facebook’s self-serving interpretation of EU law are coming.