Advancements in AI technology have paved the way for breakthroughs in speech recognition, natural language processing and machine translation. A new startup called Voicery now wants to leverage those same advancements to improve speech synthesis, too. The result is a fast, flexible speech engine that sounds more human — and less like a robot. Its machine voices can then be used anywhere a synthesized voice is needed — including in new applications, like automatically generated audiobooks or podcasts, voice-overs, TV dubs and elsewhere.

Before starting Voicery, co-founder Andrew Gibiansky worked at Baidu Research, where he led the deep learning speech synthesis team.

While there, the team developed state of the art techniques in the field of machine learning, published papers on speech constructed from deep neural networks and artificial speech generation and commercialized its technology in production-quality systems for Baidu.

Now, Gibiansky is bringing that same skill set to Voicery, where he’s joined by co-founder Bobby Ullman, who previously worked at Palantir on databases and scalable systems.

“In the time that I was at Baidu, what became very evident is that the revolution in deep learning and machine learning was about to happen to speech synthesis,” explains Gibiansky. “In the past five years, we’ve seen that these new techniques have brought an amazing gains in computer vision, speech recognition and in other industries — but it hasn’t yet happened with synthesizing human speech. We saw that if we could use this new technology to build speech synthesis engines, we could do it so much better than everything that currently exists.”

Specifically, the company is leveraging newer deep learning technologies to create better synthesized voices more quickly than before.

In fact, the founders built Voicery’s speech synthesis engine in just two-and-half months.

Unlike traditional voice synthesizing solutions, where a single person records hours upon hours of speech that’s then used to create the new voice, Voicery trains its system on hundreds of voices at once.

It also can use varying amounts of speech input from any one person. Because of how much data it takes in, the system sounds more human as it learns the correct pronunciations, inflections and accents from a wider variety of source voices.

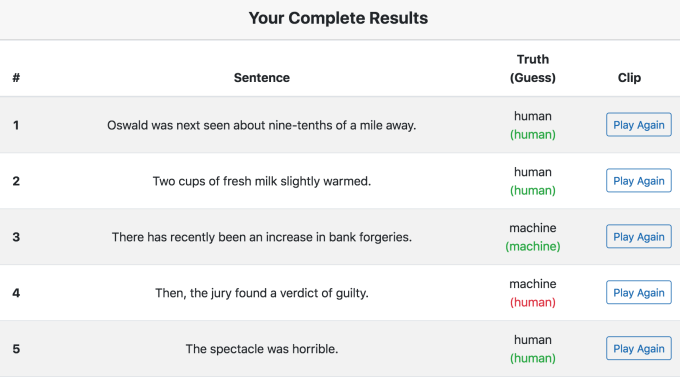

The company claims its voices are nearly indistinguishable from humans — it even published a quiz on its website that asks visitors to see if they can identify which ones are synthesized and which are real. I found that you’re still able to identify the voices as machines, but they’re much better than the machine reader voices you may be used to.

Of course, given the rapid pace of technology development in this field — not to mention the fact that the team built their system in a matter of months — one has to wonder why the major players in voice computing couldn’t just do something similar with their own in-house engineering teams.

However, Gibiansky says that Voicery has the advantage of being the first out of the gate with its technology that capitalizes on the machine learning advancements.

“None of the currently published research is quite good enough for what we wanted to do, so we had to extend that a fair bit,” he notes. “Now we have several voices that are ready, and we’re starting to find customers to partner with.”

Voicery already has a few customers piloting the technology, but nothing to announce at this time as those talks are in various stages.

The company is charging customers an upfront fee to develop a new voice for a customer, and then charges a per-usage fee.

The technology can be used where voice systems exist today, like in translation apps, GPS navigation apps, voice assistant apps or screen readers, for example. But the team also sees the potential for it to open up new markets, given the ease of creating synthesized voices that really sound like people. This includes things like synthesizing podcasts, reading the news (think: Alexa’s “Flash Briefing”), TV dub-ins, voices for characters in video games and more.

“We can move into spaces that fundamentally haven’t been using the technology because it hasn’t been high enough quality. And we have some interest from companies that are looking to do this,” says Gibiansky.

Voicery, based in San Francisco, is bootstrapped save for the funding it received by participating in Y Combinator’s Winter 2018 class. It’s looking to raise additional funds after YC’s Demo Day.