Robots are great at doing what they’re told. But sometimes inputting that information into a system is a far more complex process than the task we’re asking them to execute. That’s part of the reason they’re best suited for simple/repetitive jobs.

A team of researchers at Brown University and MIT is working to develop a system in which robots can plan tasks by developing abstract concepts of real-world objects and ideas based on motor skills. With this system, the robots can perform complex tasks without getting bogged down in the minutia required to complete them.

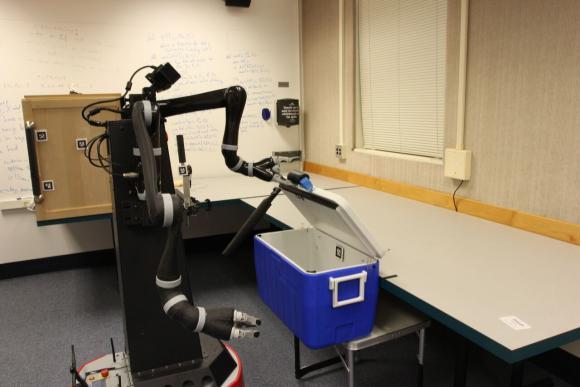

The researchers programmed a two-armed robot (Anathema Device or “Ana”) to manipulate objects in a room — opening and closing a cupboard and a cooler, flipping on a light switch and picking up a bottle. While performing the tasks, the robot was taking in its surroundings and processing information through algorithms developed by the researchers.

According to the team, the robot was able to learn abstract concepts about the object and the environment. Ana was able to determine that doors need to be closed before they can be opened.

“She learned that the light inside the cupboard was so bright that it whited out her sensors,” the researchers wrote in a release announcing their findings. “So in order to manipulate the bottle inside the cupboard, the light had to be off. She also learned that in order to turn the light off, the cupboard door needed to be closed, because the open door blocked her access to the switch.”

Once processed, the robot associates a symbol with one of these abstract concepts. It’s a sort of common language developed between the robot and human that doesn’t require complex coding to execute. This kind of adaptive quality means the robots could become far more capable of performing a greater variety of tasks in more diverse environments by choosing the actions they need to perform in a given scenario.

“If we want intelligent robots, we can’t write a program for everything we might want them to do,” George Konidaris, a Brown University assistant professor who led the study told TechCrunch. “We have to be able to give them goals and have them generate behavior on their own.”

Of course, asking every robot to learn this way is equally inefficient, but the researchers believe they can develop a common language and create skills that could be download to new hardware.

“I think what will happen in the future is there will be skills libraries, and you can download those,” explains Konidaris. “You can say, ‘I want the skill library for working in the kitchen,’ and that will come with the skill library for doing things in the kitchen.”