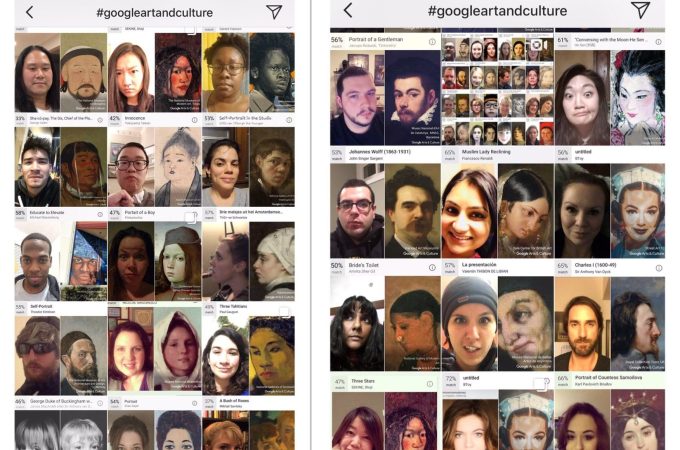

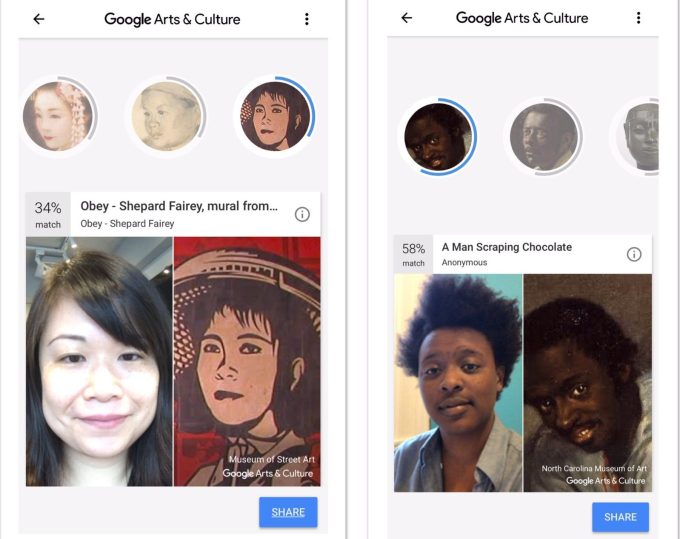

When Google Arts & Culture’s new selfie-matching feature went viral earlier this week, many people of color found that their results were limited or skewed toward subservient and exoticized figures. In other words, it pretty much captured the experience of exploring most American or European art museums as a minority.

The app was launched in 2016 by Google’s Cultural Institute, but the art selfies made it go viral for the first time. The feature is currently available only in parts of the United States (a spokesperson said Google has “no further plans to announce at this time” for other locations), but it still managed to take Google Arts & Culture to the top of the most-downloaded free apps for iOS and Android this week.

The selfie feature shows how technology can make art more engaging, but it is also a reminder of art’s historic biases. It underscores the fact that the art world, like the tech industry, still suffers from a critical lack of diversity, which it must fix in order to ensure its future.

Many people of color discovered that their results seemed to draw from relatively limited pool of artwork, as Digg News editor Benjamin Goggin noted. Others got matches filled with the stereotypical tropes that white artists often resorted to when depicting people of color: slaves, servants or, in the case of many women, sexualized novelties. A Google spokesperson told TechCrunch that the company is “limited by the images we have on our platform. Historical artworks often don’t reflect the diversity of the world. We are working hard to bring more diverse artworks online.”

The selfie feature’s race problem did not go unnoticed, prompting social media discussions and gaining coverage in Digg, Mashable, BGR, Bustle, BuzzFeed, Hyperallergic, Marketwatch and KQED Arts, among others. (Not surprisingly, the feature also raised many privacy concerns. In an interstitial message displayed before the selfie feature, Google tells users that it won’t use data from selfies for any other purpose than finding an artwork match and won’t store photos).

Some might dismiss the discussion because Google’s art selfies will soon be replaced by the next viral meme. But memes are the new capital of popular culture—and when many people feel marginalized by a meme, then it demands closer examination.

*usng the @Google Culture and Arts app*

white people: “Wow what beautiful renaissance/impressionist/european painting do I look like?

me: “Wow what racist stereotype of black people do I look like?”— jimmyNUDEtron (@liluzi_girth) January 12, 2018

Who Gets To Decide What Is Art?

Called the Google Art Project when it launched in 2011, Google Arts & Culture was almost immediately hit by charges of Eurocentrism. Most of its original 17 partner museums were located in Washington D.C., New York City or Western Europe, prompting criticism that its scope was too narrow. Google quickly moved to diversify the project by adding institutions from around the world. Now the program has expanded to a total of 1,500 cultural institutions in 70 countries.

Google Arts & Culture’s collections map, however, shows that American and European collections still dominate. It’s clear from its posts that the project is making a concerted effort to showcase diverse artists, art traditions and styles (recent topics included the Raja Ravi Varma Heritage Foundation in Bangalore and Peranakan clothing), but unraveling Eurocentrism means unraveling centuries of bias.

Even now, the management at many American museums doesn’t reflect the country’s demographics. In 2015, the Mellon Foundation released what it said was the first comprehensive survey of diversity in American art museums, which was performed with the help of the Association of Art Museum Directors and the American Alliance of Museums. It found that 84% of management positions at museums were filled by white people. Minorities were also underrepresented in the junior ranks of museum staff, which means institutions need to actively nurture young talent if they want their future leaders, including directors and curators, to be diverse, said the Mellon Foundation.

The art world’s diversity problem is pushed to the forefront when controversies erupt like the one generated by Dana Schutz’s painting of Emmett Till’s body, which was exhibited at last year’s Whitney Biennal. Many black artists were disturbed by how Schutz, who is white, presented Till’s body, saying that it both trivalized and exploited racist violence against black people. In an interview with NBC News, artist and educator Lisa Whittington blamed the Whitney Biennial leadership’s homogeneity.

“Their lack of understanding seep onto the walls of the museum, into the minds of viewers and into the society,” said Whittington. “There should have been more guidance and more thought in the direction of the selections chosen for the Whitney Biennial and there would have been African American curators and advisors included instead of an all white and all Asian curatorial staff to ‘speak’ for African Americans.”

Progress has been frustratingly slow. There are now more female than male students in art schools, but exhibitions of contemporary art are still overwhelmingly dominated by male artists. The decline in arts education since No Child Left Behind was signed into law in 2002 has disproportionately affected minority students and it was only within the past few years that the College Board reworked the Advanced Placement art history course to address the lack of diversity in its syllabus, though about 65% of the artwork used in its course is “still within the Western tradition,” according to the Atlantic.

Meanwhile, a report issued last year by the American Alliance of Museums found that not only are museum boards “tipped to white, older males—more so than at other nonprofit organizations,” they have also not taken enough action to become more inclusive.

Algorithms Aren’t Colorblind

The lack of diversity reflected in art museums creeps into our definitions of art, culture and ultimately whose experiences matter enough to be preserved. They are reinforced every time a person of color walks into a museum and realizes that the few paintings that look like them depict tired stereotypes. While well-intentioned, Google’s art selfie feature had the same impact on many people of color.

Algorithms don’t protect us from our biases. Instead, they absorb, amplify and propagate them, while creating the illusion that technology is sheltered from human prejudices. Facial recognition algorithms have already demonstrated their ability to cause harm, such as when two black users of Google Photos discovered that it labelled their photos with a “gorilla” tag (Google apologized for the error and blocked the image categories “gorilla,” “chimp,” “chimpanzee” and “monkey” from the app).

Algorithms are only as good as their benchmark datasets, and those datasets reflect their creators’ biases (conscious or not). This issue is being studied and documented by researchers including MIT graduate student Joy Buolamwini, who founded the Algorithmic Justice League to prevent bias from being coded into software, which has unsettling implications for wide-scale racial profiling and civil rights violations. In a TED talk last year, Buolamwini, who is black, recounted how some robots with computer vision did a better job of detecting her when she wore a white mask.

“There is an assumption that if you do well on the benchmarks then you’re doing well overall,” Buolamwini told The Guardian last May. “But we haven’t questioned the representativeness of the benchmarks, so if we do well on that benchmark we give ourselves a false notion of progress.”

The biases making their way into facial recognition algorithms echo the development of color film. In the 1950s, Kodak began sending cards depicting female models to photo labs to help them calibrate skin tones during processing. All of the models were nicknamed Shirley, after the first studio model used, and for decades, all of them were white. This meant that images of black people often came out over- or under-developed. In an essay for BuzzFeed, writer and photographer Syreeta McFadden described how those photos fed into racist perceptions of black people: “Our teeth and our eyes shimmer through the image, which in its turn become appropriated to imply this is how black people are, mimicked to fit some racialized nightmare that erases our humanity.”

Companies like Google now have an unprecedented opportunity to challenge racism and myopic thinking because their technology and the products built on them can transcend the limitations of geography, language and culture in a way that no other medium has been able to. Google Arts & Culture selfies have the potential to be more than a silly meme, but only if the feature openly acknowledges its limitations–which means confronting biases in art history, collection and curation more directly and perhaps educating its users about them.

For many people of color, the feature served as yet another reminder of how they have been marginalized and excluded. More than a meme or an app engagement tool, Google’s art selfies are an opportunity to look at who gets to define what is culture. Art is one of the ways by which cultures create their collective narratives, and everyone loses out when only a narrow slice of experiences are valued.