Toyota is very invested in love. The automaker has a central philosophy of making vehicles that inspire ‘Aisha,’ a concept that literally means “beloved car” in Japanese. But the nature of ‘Aisha’ is changing, necessarily, just at the nature of automobiles themselves are fundamentally changing as we usher in automated and semi-autonomous driving.

The key to making ‘Aisha’ work in this new era, Toyota believes, lies in using artificial intelligence to broaden its definition, and to transform cars from something that people are merely interested in and passionate about, into something that people can actually bond with – and even come to think of as a partner.

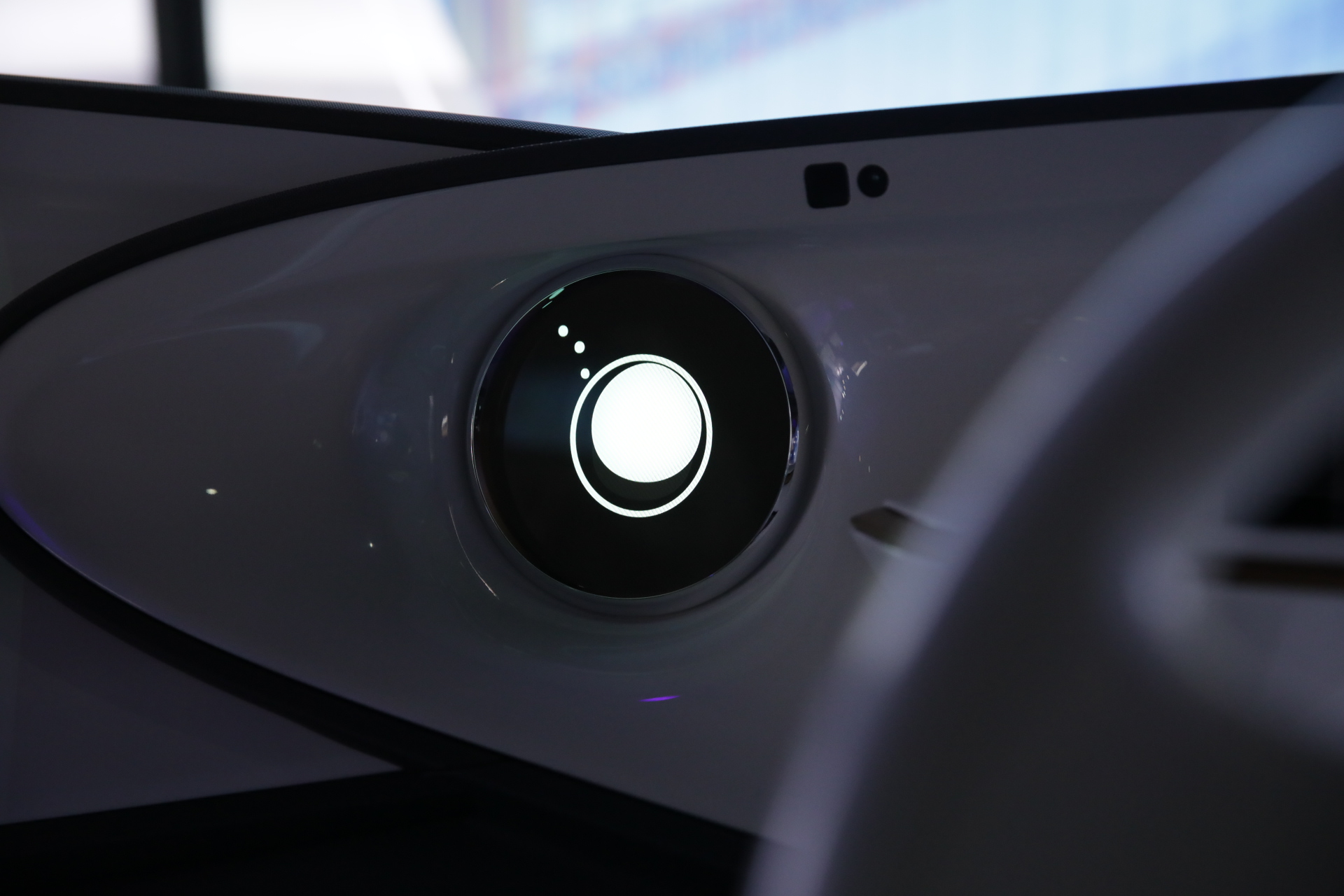

To create a bond between a person and a car that’s more than just skin (or topcoat) deep, Toyota believes that learning and understanding drivers, combined with automated driving, and an AI agent that’s more companion than virtual assistant, is key. That’s why it created ‘Yui,’ the virtual copilot it has built into all of its Concept-i vehicles, including the Walk and Ride, both of which debuted at this week’s 2017 Tokyo Motor Show.

Toyota’s using deep learning to help make this work, evaluating user attentiveness and emotional states, based on observed body language, tone of voice and other forms of expression. It’s also mining user preferences based on signals obtained from social networks including Facebook and Twitter, as well as location data from GPS and previous trips.

The goal is to combine this information to help its Yui assistant anticipate the needs of a driver, ensure their safety, and maximize their happiness with routes and destinations that fit their mood and personal preferences.

Using technology created by partner SRI International, Toyota is doing this by assessing a driver’s emotional state and classifying them as either neutral, happy, irritated, nervous or tired. Based on which of these emotions or states it detects in a driver, it’ll offer different courses of action or destination suggestions, and it can evaluate their response – even doing things like detecting momentary lapses in put-on emotional facades, such as feigned happiness.

Yui will offer up different feedback to try to guide a driver back to a preferred state, and it can employ various types of feedback to help trigger this, including different sights (cabin lights, for instance), sounds (piped through the vehicle’s stereo), touch (warmth via the steering wheel, perhaps) and even smell using scent emitters.

This isn’t just about making sure to wake up a sleepy driver if they’re in danger of nodding off – though it can do that, too. Toyota wants its agent to be able to combine information gathered about a user from social sources, with emotion recognition, to suggest topics for discussion and enter into free discussions with the user in a distraction-free manner, all with the end goal of building a bond between user and car.

A car is largely a symbol – up until now, it’s often been a conduit to freedom, and a means to an end of escape, of exploration, or of getting you where you need to go, under your own power. In future, it’s bound to become something different when we have autonomous vehicles readily available.

Dealing with virtual assistants today can often be a source of frustration (ahem, Siri), but Toyota thinks it’ll one day be the key to unlocking a new type of bond between human and machine: The carmaker thinks that the best way to keep us loving our cars in this future is to make it seem like they love us back.

Disclaimer: Toyota provided accommodations and travel for this trip to the Tokyo Motor Show.