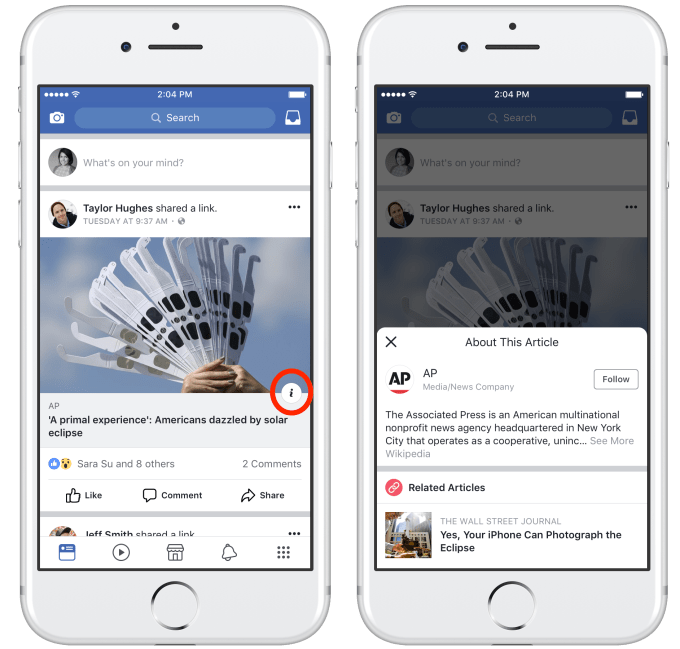

Facebook thinks showing Wikipedia entries about publishers and additional Related Articles will give users more context about the links they see. So today it’s beginning a test of a new “i” button on News Feed links that opens up an informational panel. “People have told us that they want more information about what they’re reading” Facebook product manager Sara Su tells TechCrunch. “They want better tools to help them understand if an article is from a publisher they trust and evaluate if the story itself is credible.”

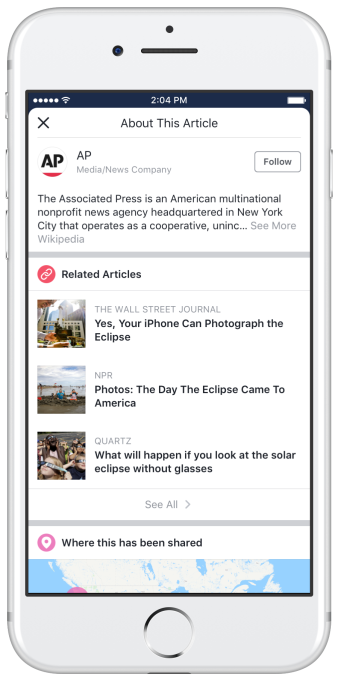

This box will display the start of a Wikipedia entry about the publisher and a link to the full profile, which could help people know if it’s a reputable, long-standing source of news…or a newly set up partisan or satire site. It will also display info from their Facebook Page even if that’s not who posted the link, data on how the link is being shared on Facebook, and a button to follow the news outlet’s Page. If no Wikipedia page is available, that info will be missing, which could also provide a clue to readers that the publisher may not be legitimate.

Meanwhile, the button will also unveil Related Articles on all links where Facebook can generate them, rather than only if the article is popular or suspected of being fake news as Facebook had previously tested. Trending information could also appear if the article is part of a Trending topic. Together, this could show people alternate takes on the same news bite, which might dispute the original article or provide more perspective. Previously Facebook only showed Related Articles occasionally and immediately revealed them on links without an extra click.

More Context, More Complex

The changes are part of Facebook big, ongoing initiative to improve content integrity

The changes are part of Facebook big, ongoing initiative to improve content integrity

Of course, whenever Facebook shows more information, it creates more potential vectors for misinformation. “This work reflects feedback from our community, including publishers who collaborated on the feature development as part of the Facebook Journalism Project” says Su.

When asked about the risk of the Wikipedia entries that are pulled in having been doctored with false information, a Facebook spokesperson told me “Vandalism on Wikipedia is a rare and unfortunate event that is usually resolved quickly. We count on Wikipedia to quickly resolve such situations and refer you to them for information about their policies and programs that address vandalism.”

And to avoid distributing fake news, Facebook says Related Articles will “be about the same topic — and will be from a wide variety of publishers that regularly publish news content on Facebook that get high engagement with our community.”

“As we continue the test, we’ll continue listening to people’s feedback to understand what types of information are most useful and explore ways to extend the feature” Su tells TechCrunch. “We will apply what we learn from the test to improve the experience people have on Facebook, advance news literacy, and support an informed community.” Facebook doesn’t expect the changes to significantly impact the reach of Pages, though publishers that knowingly distribute fake news could see fewer clicks if the Info button repels readers by debunking the articles.

Getting this right is especially important after the fiasco this week when Facebook’s Safety Check for the tragic Las Vegas mass-shooting pointed people to fake news. If Facebook can’t improve trust in what’s shown in the News Feed, people might click all its links less. That could hurt innocent news publishers, as well as reducing clicks to Facebook’s ads.

Image: Bryce Durbin/TechCrunch

Facebook initially downplayed the issue of fake news after the U.S. presidential election where it was criticized for allowing pro-Trump hoaxes to proliferate. But since then, the company and Mark Zuckerberg have changed their tunes.

The company has attacked fake news from all angles, using AI to seek out and downrank it in the News Feed, working with third-party fact checkers to flag suspicious articles, helping users more easily report hoaxes, detecting news sites filled with low-quality ads, and deleting accounts suspected of spamming the feed with crap.

Facebook’s rapid iteration in its fight against fake news shows its ability to react well when its problems are thrust into the spotlight. But these changes have only come after the damage was done during our election, and now Facebook faces congressional scrutiny, widespread backlash, and is trying to self-regulate before the government steps in.

The company needs to more proactively anticipate sources of disinformation if its going to keep up in this cat-and-mouse game against trolls, election interferers, and clickbait publishers.