Can Alexa help shoppers in large, retail stores where store associates are spread out and often hard to find? That’s the premise behind the hack, “Alexa Shop Assist,” presented today at the TechCrunch Disrupt 2017 hackathon in San Francisco.

According co-creator Lawrence Chang, the idea was prompted by real-world experiences he and fellow team members – James Xu, Dinesh Thangavel, and Justin Tai – have faced in the past, when out shopping.

“Sometimes it’s hard to find someone,” Chang said of trying to get help at a large retail store, like Home Depot. And when you do, they’re not always the right person to help you with your question. They may have to refer you to another store staffer for a different department, which takes even more time.

“But think about Alexa – there’s this whole database,” he says of the technology at the system’s disposal.

Though the hack itself – by its nature – is not a finished product, the idea is fairly innovative.

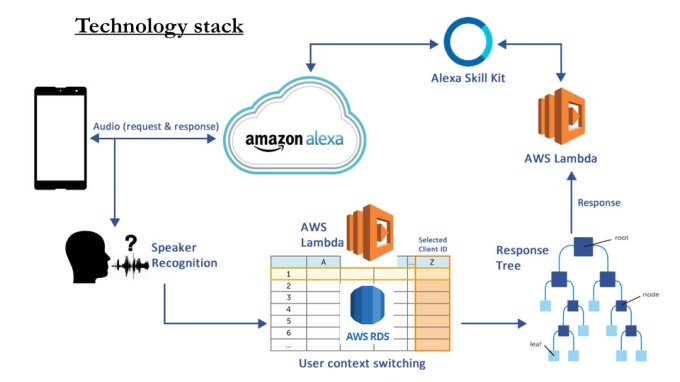

The system utilizes two AWS Lambda functions, speaker recognition, and a context switched response tree. This is all tied together through an iOS app, which uses the Alexa Skill Set and Alexa Voice Service.

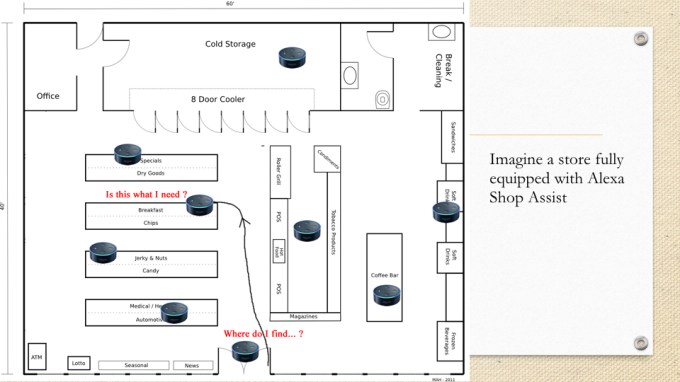

If implemented in a retail environment, the store would place Alexa-powered devices throughout. These wouldn’t necessarily have to be Echo hardware devices, as Alexa’s voice service is capable of running on any hardware.

In practice, what this means for shoppers in the store is that they could go up to one of these “virtual store assistants” and ask for help.

For example, in the demo, the team showed Alexa responding to a question: “Ask Shop Assist where can I find engine online for my Prius.”

Alexa answered, “Engine oil for Prius is in Aisle 5.”

This part of the system would simply augment the existing technology in major retailers’ apps, where, today, live store inventory is already mapped to a particular location. But instead of using an app’s search function, shoppers could just use their voice.

What’s really clever, though, is how the system is able to then follow that shopper throughout their store visit without having to collect personally identifiable information about who it is the virtual assistant is helping.

To do so, it identifies characteristics in the speaker’s voice which is then mapped to a unique ID. This means the consumer can speak to Alexa again later in their same shopping session, and the assistant could remember who that is, and be able to answer follow-up questions more easily.

To continue the same example, the shopper looking for engine oil for their Prius might be able to later ask, “Alexa, ask Shop Assist which one should I buy?,” while at the engine oil aisle, then receive a specific recommendation.

These answers could come from product information already on file – the item’s attributes in a database, or an online product description, among other things.

In that sense, Alexa wouldn’t just replace the “aisle finder” function of a retail store’s shopping app – she could actually answer the basic questions that you might otherwise ask a store employee. That’s not to say that the overall idea here is to replace store staff with technology (though that is something Amazon is experimenting with today in its concept, cashier-free “Go” stores.)

But it could help stores that are understaffed, or where staff is tasked with a number of other roles – like restocking inventory, or manning multiple departments at once, for example. After all, it’s rare to find a store employee just standing in an aisle, ready to answer shoppers’ questions these days.

We asked the team, all of whom work at Nvidia by day, if they were planning to work on the project after the hackathon wraps. Unfortunately, that answer for now is “no.”

But it wouldn’t be surprising if Amazon was thinking about something similar already – especially considering its move into physical retail by way of its Whole Foods acquisition.