While many expected Apple to push AR capabilities as the hallmark features for newly introduced iDevices, in reality, ARKit was largely a footnote in today’s Apple event as the company focused the bulk of attention on features like Face ID and Animoji.

The most notable ARKit announcement was that Apple will be bringing face-tracking support to the AR platform on iPhone X, allowing devs to gain access to front color and depth images from the cameras while tracking face position and a number of expressions in real time.

In its latest earnings call, Apple CEO Tim Cook called AR “big and profound” while referencing all the developer excitement in building ARKit experiences. The lone dedicated onstage demo relating to AR at today’s iPhone event just highlighted a tabletop gaming title from Directive Games. The title, which allowed users to physically pan their iPhone across a digital battlefield, showed a lot of similarities to existing VR gaming titles on the market, albeit one anchored in the real world.

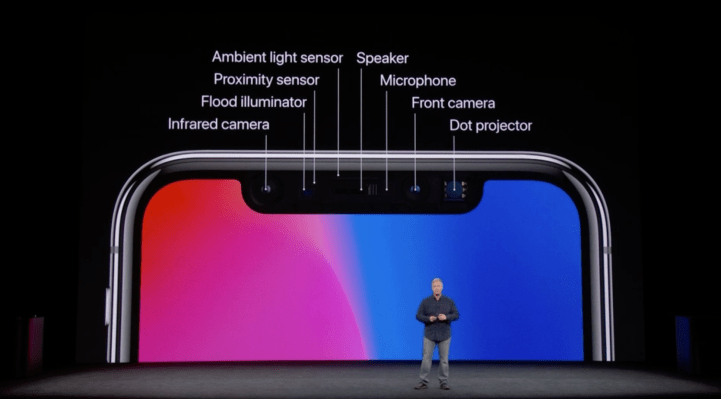

While Apple lauded that the new back-cameras on the iPhone X and iPhone 8 were “calibrated for AR,” — something undoubtedly true of any camera running ARKit — the only mentions of model-specific AR features were confined to redesigned front-facing camera tracking on the iPhone X thanks to the new camera array, which includes an infrared camera, flood illuminator and dot projector. The company demoed some Snapchat selfie filters that tracked well, but perhaps not noticeably better than existing RGB camera face-tracking in the app. The differences will become much more noticeable to companies that haven’t invested millions in RGB face-tracking tech like Snap has.

The reasoning for Apple not bringing some of these sensors to the back-camera — which could invariably benefit AR capabilities — is more than likely battery related, but could also be part of efforts by Apple to keep ARKit experiences fairly uniform across supported devices. The TrueDepth camera system likely could have brought something like an environment mesh to the platform so that the iPhone could not only detect plane surfaces but more complex shapes. Sticking with plane detection across the board will allow developers to build one-size-fits-all experiences for ARKit while users will be able to engage in the same multi-player experiences.

We’ll hopefully hear more details about how exactly the A11 chip has been optimized for AR, but details were scant during the presentation.

Last month, Google took the wraps off of ARCore, a more bare-bones version of its Tango smartphone AR platform that it first showed off in 2014. ARCore gets rid of the stringent hardware requirements on Tango, with the hopes of getting the platform onto more devices. In preview, the platform will support the Pixel and Samsung Galaxy S8; at wide launch Google claims ARCore will be on 100 million devices. Apple’s scale will be ARKit’s main advantage with the device launching on 400-500 million devices when iOS 11 goes live.