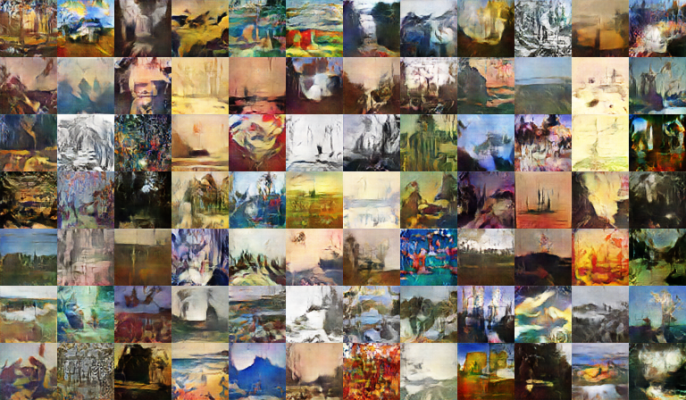

I can’t tell you for sure if we have reached peak GAN, but there are far more people messing around with them than there were a year ago, and that’s a great thing. Two undergraduates at Williams College taught themselves introductory machine learning and read about 50 papers on the now almost mainstream Generative Adversarial Network (GAN) before embarking on a project with a professor to build one that could generate art — and within a year they had basically done it.

The two students, Kenny Jones and Derrick Bonafilia, were computer science students who stumbled across the Martin Arjovsky’s Wasserstein GAN (WGAN) earlier this year. WGAN, a more stable cousin of the basic GAN, turned out to be a great tool for both learning artistic style and generating new art.

GANs traditionally involve a generator and a discriminator interacting via an adversarial relationship. The generator is trying to create an image artificially that tricks the discriminator into thinking it is real. Simultaneously, the discriminator wants to reject as many fake images as possible.

Generator versus discriminator

Unfortunately, GANs are notoriously unstable — to the detriment of anything they’re applied to. Fortunately, the Wasserstein modifications made GANs stable enough to produce recognizable art. The team used the WikiArt database of 100,000 labeled paintings as training material.

To maximize the performance of the model, the team added an extra component to the traditional discriminator to predict the genre of paintings. And to keep the model from focusing too heavily on the “real” and “fake” nature of images being generated, the team pretrained and added global conditioning. This enabled the discriminator to maintain an understanding of the differences between styles of art.

“A crucial issue is measuring success,” said Jones. “There aren’t many universal metrics. It’s not just a problem with art but it’s especially poignant with art.”

Broadly speaking, the work was a success and both Jones and Bonafilia plan to start as software engineers at Facebook next fall. In the future, Bonafilia told me that the project could benefit from additional computational resources — like those they would have access to at Facebook.

[gallery ids="1505732,1505733,1505734"]

Art has become a popular outlet for work in machine learning — Jones tells me that he thinks this is because it’s visual and relatable. Facebook drew attention last fall with its real-time style transfer models running on mobile devices. In contrast to the GANGogh work, Style Transfer modifies an existing stream rather than producing completely new and novel content.

Style transfer has found application in cinema — actress Kristen Stewart co-authored a paper back in January on the applications of machine learning to her short film Come Swim. It’s probably too early to expect sections in our modern art museums to be reserved for novel artwork generated by machines, but anything is possible in the future — you heard it here first.