Goodyear is thinking ahead to how tires – yes, tires – might change as autonomous driving technology alters vehicle design, and as available technologies like in-vehicle and embedded machine learning and AI make it possible to do more with parts of the car that were previously pretty static, like its wheels.

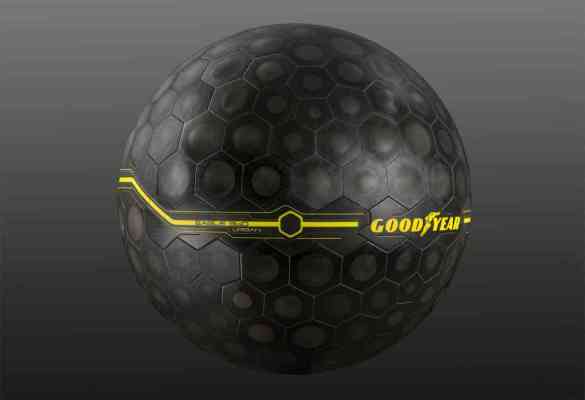

Its new Eagle 360 Urban tire concept design builds on the work it revealed last year with its Edge 360 concept tire, which re-imagined the tire as a sphere, capable of providing autonomous cars with the ability to move in any direction at a moment’s notice, without having to worry about turning arcs and altering the angle of wheels.

This new Urban version, unveiled at the Geneva Motor Show going on this week, adds a “bionic skin” to the mix, which includes embedded sensors throughout to detect things like a change in surface type for the road itself, say from asphalt to packed dirt, or to take note of things like snow and rain.

Using data collected by these sensors, the tire could then activate built-in actuators to change the shape of the surface and tread of the tire. It’s a bit like how electrical signals tell your muscles to change shape when you flex or grip, but all done at the behest of onboard virtual intelligence telling the tire what shape will best help it maintain traction and control given the current state of the road beneath it.

Imagine a future where you don’t need winter tires even if you live in Minnesota, or where your Tesla adapts to have racing slicks when you’re taking it out for a track day automatically. Intelligence and adaptability built into a tire is also another way that autonomous vehicle makers can take risk out of the equation for the most unpredictable parts of driving, including how weather impacts the road.

Localized AI applications like this are made possible by a growing category of embedded processor and GPU options from companies including Nvidia that make it possible to enact machine learning “at the edge,” or where sensing actually happens, instead of having to shuttle that information back to big data centres and then out again.