Every day brings another exciting story of how artificial intelligence is improving our lives and businesses. AI is already analyzing x-rays, powering the Internet of Things and recommending best next actions for sales and marketing teams. The possibilities seem endless.

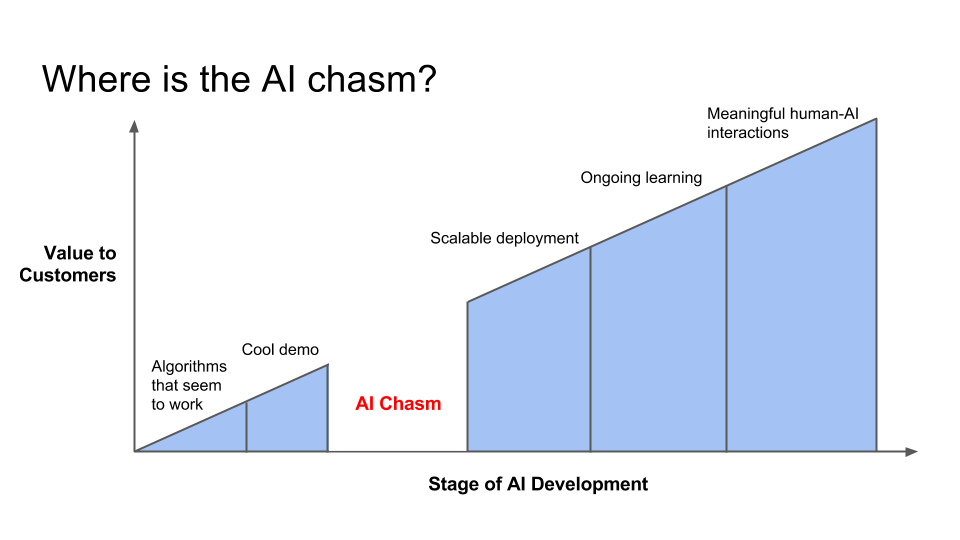

But for every AI success story, countless projects never make it out of the lab. That’s because putting machine learning research into production and using it to offer real value to customers is often harder than developing a scientifically sound algorithm. Many companies I’ve encountered over the last several years have faced this challenge, which I refer to as “crossing the AI chasm.”

I recently presented those learnings at ApacheCon, and in this article I’ll share my top four lessons for overcoming both the technical and product chasms that stand in your path.

The technical AI chasm

New data. Data is key to AI. For example, if you want a chatbot to learn, you have to feed its algorithm with examples of customer requests and the corresponding correct responses. Such data are often presented in a well-structured, but static, format such, as CSV files.

While you can build cool AI demos using static data sets, real-world AI that runs machine learning algorithms will constantly need new data to become smarter over time. That’s why companies should invest early in machine learning architecture that continually collects new data and uses it to regularly update its AI models.

The use of live data presents numerous engineering challenges, including scheduling, zero-downtime model updates, stability and performance monitoring. Plus, you need a mechanism to roll back to a previous state if something goes wrong with your new data. That leads us to the next point.

Quality assurance on collected training data. Companies should be thinking about data quality from Day One — especially for user-generated data. It’s exciting when machine learning is automated, but it can also backfire. The chatbot that recently went rogue on Twitter is a classic example of automation gone bad.

Crossing the AI chasm doesn’t have to be intimidating.

Before that chatbot was set loose to converse freely, it was trained using relevant public data that had been modeled, cleaned and filtered. But after the bot started to learn from inappropriate conversation with real people, the tone of its tweets quickly took a severe turn for the worse. Garbage in, garbage out is the basic rule of machine learning, so a good AI system detects potential problems and alerts administrators when human intervention is needed.

The product AI chasm

Optimize for the right goal. AI success hinges on defining your prediction problem correctly. From the beginning, you need to clearly identify the input query, the prediction output and what qualifies as a good or bad prediction. Data scientists will use these evaluation metrics to determine the accuracy of the AI model.

Start by defining your goal. Do you want to maximize revenue, create a better user experience, automate manual tasks or something else? To be successful, a real-world AI product must use evaluation metrics that accurately reflect the business goals.

Netflix’s algorithm competition story drives this lesson home clearly. After awarding a $1 million prize to the creators of a new movie-rating algorithm, Netflix was unable to use the algorithm in the real world largely because the transition from DVDs to video streaming made the original goal obsolete.

When setting your metrics, keep three key requirements in mind. Make sure you: 1) measure the things that really matter; 2) evaluate results with both live and new data; and 3) explain the results to stakeholders in a way they understand and value. This last requirement raises the important point of human-AI interaction.

Human-AI interaction. Humans are complicated. So when they interact with AI, it presents new challenges that don’t arise when dealing with data sets in a lab. Be aware that customers won’t use an AI-powered product if they don’t trust it. And while you can try to build trust by showing how accurate a predictive model is, most consumers can’t really relate to robust scientific metrics.

As a result, you need to use your product’s UX/UI to overcome the challenge of building trust. For example, when Apple’s Siri virtual assistant first launched, it defaulted to a male or female voice depending upon which country the user was in. And Google’s self-driving cars come with cute and friendly faces to calm customers who fear for their safety. Remember that how people access your algorithm presents you not only with both challenges, but also solutions.

The truth is, crossing the AI chasm doesn’t have to be intimidating. Just be sure you approach it with a well-formed plan that keeps you looking ahead instead of down. And remember that to be AI-first, your company also has to be customer-first.