Artificial intelligence allows machines to reason and interact with the world, and it’s evolving at a breakneck pace. It’s already driving our cars, managing our health and even competing with — and sometimes beating at our on games — our best and most talented humans.

Many advances in AI can be attributed to machine learning, which works by tapping massive computing power to crunch through enormous amounts of digitized data. Now consider that most of our data, the best minds in the business and more computing power than you could ever imagine sit with just a handful of companies. For these reasons, only a few companies in the world are best situated to understand the true potential — and the current limits — of AI.

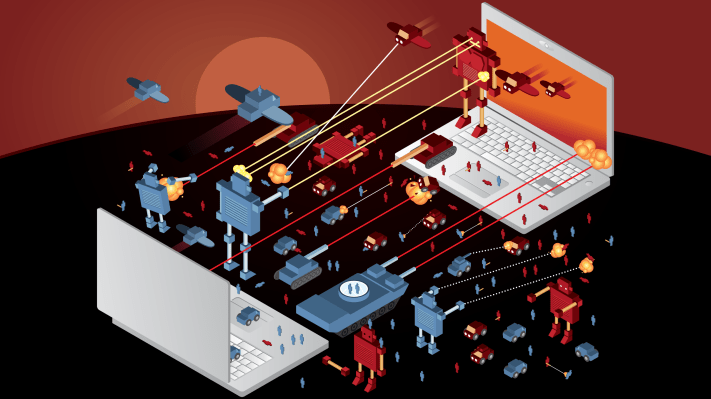

In response to AIs rapid developments, more than 8,000 leading researchers and scientists — including Elon Musk and Stephen Hawking — have signed an open letter alluding to AI’s potential pitfalls and possible detriment to humanity. Their main concern is that an existential risk faces humanity: an AI in control of autonomous weapons.

The letter goes on to state that autonomous weapons are quickly becoming the third revolution in warfare, after gunpowder and nuclear arms, and that AI researchers must focus their research on what is beneficial for humanity, and not just what is profitable. However, much of what is researched with AI may not be public knowledge, and is likely internal research that’s closely held by just a few very wealthy corporations. How can the public make informed decisions about something that is kept secret?

Luckily, there are many who are willing to help us understand the current and future potential of AI for the benefit of humanity. These folks include recent nonprofits such as The Future of Life Institute and OpenAI, with notable donors including Elon Musk, CEO of Tesla and SpaceX. OpenAI’s mission is:

…to advance digital intelligence in the way that is most likely to benefit humanity as a whole, unconstrained by a need to generate financial return. Since our research is free from financial obligations, we can better focus on a positive human impact.

OpenAI’s technical goals go on to state that they are out to solve a general AI, or an AI that can perform any task, and perhaps develop a body to go along with it. They’ve hired many notable AI experts, and raised $1 billion in investment in 2015.

OpenAI certainly has the talent to move things forward, but can a nonprofit really keep up with massive corporate innovation? If so, they’ll need to excel at the two major developments that have made machine learning a success: the exponential increase in computing power, and access to massive amounts of data.

To help provide access to the computing power, they’ll need, NVIDIA, a graphics chip manufacturer specializing in optimized ML hardware and software, that just delivered their first supercomputer to OpenAI — a $129,000 machine known as the world’s first deep learning supercomputer in a box.

Of course, it’s not hard to imagine that Google, Microsoft, Amazon or Facebook have many more orders of magnitudes of computing power than any one machine could have. That’s not to suggest that these corporations are hiding their computing power. Instead, Google for example, has open-sourced its AI library, called TensorFlow, and is quickly scaling its online cloud computing platform to enable access to state of the art equipment to include clusters of NVIDIA hardware.

More worrisome for OpenAI than keeping up with corporate computing power, however, is being able to access the same data. Every ML algorithm requires data to learn — enough data to generalize well enough to the scope of the population. Plenty of data sets for ML have been available since the early 1990s. However, it’s only in the last few years that our digital sharing economy has enabled mass collection of our personal data and behaviors, something an AI needs in order to learn. OpenAI, on the other hand, has no users to mine for data, so it must rely solely on open data sources.

Another potential concern is that OpenAI is co-chaired by Elon Musk, CEO of Tesla and SpaceX, and Sam Altman, president of Y Combinator. Will their own companies take advantage of an all-star AI research team with billions of dollars in funding? And will OpenAI employees even care if they are given stock options in Y Combinator and soon SpaceX?

Regardless of potential pitfalls, OpenAI is the only nonprofit organization dedicating itself to the humane development of AI. Now that AI is becoming more general, its developments are already surprising us. A nonprofit led by the brightest minds sounds like exactly what the world needs to help us understand the future of AI, and to lead the way toward a positive impact on society.

Rather than a corporate-driven scenario, where AI could be used for the financial advantage of a select few, the nonprofit recognizes the potential for AI to develop a better future for all. That includes medical applications that will save lives, cars that will reduce accidents and a general AI that can benefit humanity.