Over the last decade we’ve witnessed big data tackle issues such as crime, health care, climate change, and even how to select a movie.

So, with the availability of ever-growing collections of political data, more sophisticated statistical analysis techniques, and the ubiquitous presence of social media, it is tempting to think that big data should be able to give us a completely accurate prediction of major votes likes Brexit or the American presidential election.

After all, statistical error decreases with sample size, so with unbounded data at our fingertips, it’s easy to imagine that measurement error will also vanish. Indeed, being overconfident of large sample sizes is one of the most common statistical blunders we see as a big data training company.

History, however, is replete with cautionary tales of being lulled into complacency by the sweet siren call of misapplied statistics. These warnings are not just scattered anecdotes but coalesce into a pattern that reveals both the promise and potential peril of big data.

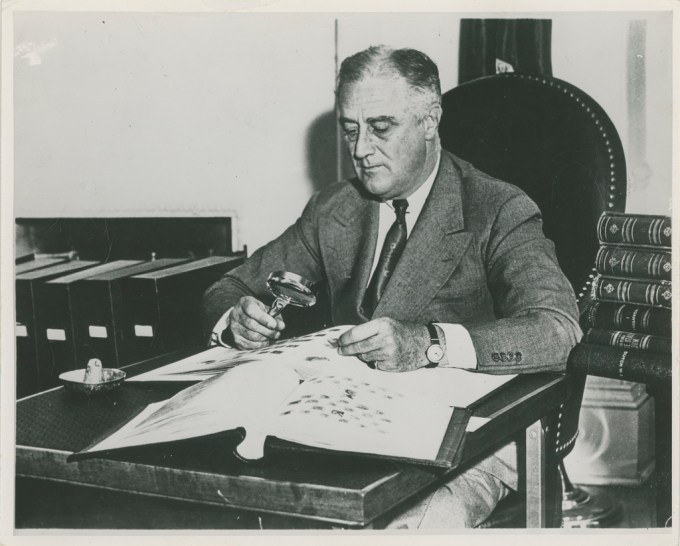

In 1936, Literary Digest’s poll predicted Republican Governor Alfred Landon of Kansas would win the upcoming presidential election by a landslide. The magazine believed in the irrefutability of its sample size: It had polled 10 million individuals — an astronomical number for any poll. Which made it all the more surprising when the Democratic incumbent, President Franklin Delano Roosevelt, defeated Landon in one of the most lopsided electoral victories in modern American history.

Despite its size, the Digest’s sample population was incredibly biased: the polling team only surveyed its own magazine subscribers and individuals in automobile and telephone directories — precisely those with disposable incomes well above the national average who were far more likely to vote Republican.

Photo courtesy of Flickr/The FDR Library & Museum.

But even surveying an unbiased population cannot guarantee polling accuracy. In the 1982 California Governor’s Race, exit polls falsely predicted Tom Bradley, the long-time mayor of Los Angeles as the election winner by a significant margin.

On election day, Bradley narrowly lost to his Republican challenger. Post-election analysis suggested that significantly fewer white voters voted for Bradley, an African American, than polls had previously predicted. The phenomenon, now known as the Bradley Effect, results from voters not revealing to pollsters their intention to vote against a non-white candidate for fear they will be thought racist.

Such effects aren’t just confined to race or U.S. elections. Polls of the 1992 UK general election fell prey to something similar when they predicted the election would produce a narrow Labour majority.

After Conservatives won the general election and a 21-seat majority in parliament, subsequent research suggested that conservative voters were more reluctant to disclose their voting intentions — which the press dubbed the “Shy Tory Factor.” A robust and random sample means nothing, then, if a disproportionate number of those polled are being less than sincere.

These examples illustrate that in polling or any other type of sampling, there are two separate components of measurement error: the statistical error (normal fluctuations caused by pure randomness) and sampling bias (error introduced by inadvertent, or unavoidable, sampling of a biased population). Big data offers the potential for vanishingly small statistical error but does nothing to eliminate the risk of sampling bias.

Consider Brexit, the referendum on the UK leaving the EU. While polls conducted online suggested the race was very close, telephone poll results projected a comfortable 18-point victory for those voting to “Stay”. The referendum narrowly passed, demonstrating the importance of sampling bias in accurately predicting election results.

In the same vein, survey results show that candidate Donald Trump performs nearly six percentage points better in online polls than in telephone ones. Some pundits have postulated a “politically-correct bias” in phone polling — a sort of generalized Bradley Effect — whereby voters are more truthful in impersonal online surveys than over the phone. Only election day will be able to definitively tell us if online results are more accurate than telephone ones for the US Presidential election.

Ironically, many of the trends in the digital revolution — like the the unplugging of landlines and the growing reliance on online polling — have made sampling bias in polling worse. More generally, the era of big data — with the divide between digital haves and have-nots and with its reliance on self-selecting social media — has made fields beyond polling more prone to sampling bias. This is not to say that big data is useless. It does underscore the importance of humans to interpret and question the results of big data.