Top cancer researchers recently reported their findings and recommendations to President Obama and Vice President Biden’s Cancer Moonshot task force. To my surprise, all 10 recommendations in the Blue Ribbon Panel’s report heavily concentrate on enabling computational infrastructure. Each recommendation included these key words: sharing data, engaging patients, precision medicine, genetic understanding, interdisciplinary, ecosystem and genomics.

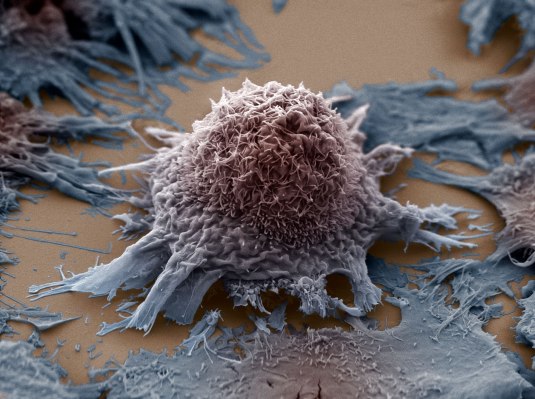

Cancer is a major health challenge of our time. Caused by abnormal cells dividing in an uncontrolled way, cancer is a leading cause of death worldwide, with more than 14 million new cases and 8 million deaths each year.

Most of us have had our lives impacted directly or indirectly by a cancer diagnosis. Ending the emotional and economic burden of cancer has been subject to many false starts over the years. But, as someone whose work focuses on computational biology, and at the intersection of biology and big data, I am confident that computational infrastructure is the vital ingredient to help the recommendations become reality. Below are four key ways that computational infrastructure can be part of the recipe for success.

Emerging technologies characterize cancer as never before

Cancer is first and foremost a genetic disease, driven by mutations, or errors, in our DNA. Identifying and characterizing these errors requires specialized machines, skilled personnel and complex data analysis.

Even today, the precise molecular causes of many cancers remain unknown. As a result, many patients with cancer are treated without having a precise genetic diagnosis. To truly understand, diagnose and treat cancer, we need a better sight of its genetics. This aligns with the panel’s recommendations to conduct retroactive analysis of biospecimens from patients treated with standard of care, to better understand tumor heterogeneity and to generate human tumor atlases.

The scale of the data used in cancer discovery means computation has a huge role in supporting research.

Governments and private industry are recognizing the research and population health benefits of genomics sequencing. Multiple large-scale sequencing projects have been initiated worldwide; for example, the U.S. government’s Million Veteran Program, the U.K. government’s 100,000 Genomes Project and the AstraZeneca-led integrated genomics initiative for drug development and discovery.

Thanks to these and other projects, millions of sequenced cancer whole genomes will be a reality. An estimated 100 million to 2 billion human genomes will have been sequenced by 2025. As the number of sequenced genomes grows, this data set will be an increasingly rich resource for the cancer research community, enabling precise characterization of the molecular changes that define cancer cells.

Cloud computing enables large-scale collaborative analysis

Two of the 10 recommendations specifically call for a direct patient engagement network and the creation of adult (and pediatric) immunotherapy clinical trials networks. However, collaboration is at the core of all 10 recommendations, as the Blue Ribbon Panel unequivocally calls for better data sharing. The scale of the data used in cancer discovery means computation has a huge role in supporting research.

Every sequenced genome requires 300-400 GB of hard disk space just to store the raw and processed files. By 2025, the amount of human genomic data is predicted to be 2-40 exabytes, exceeding the storage requirements of astronomy and YouTube (the other two major data-sprouting entities). Downloading and sharing this data will be difficult, time-consuming and costly. Working in real time on a collaborative analysis with a colleague on a different continent will be nigh on impossible.

To overcome these challenges, the cancer research community is increasingly turning to the cloud to store and analyze cancer genomic data. The U.S. National Cancer Institute (NCI) initiated Cancer Genomics Cloud Pilots to enable researchers to access The Cancer Genome Atlas (TCGA) — the world’s largest public genomic data set — containing over 2 petabytes of sequencing and other data from more than 11,000 patients.

Rather than waiting weeks to download the data, researchers can log in to a cloud-based system to explore the data and run large-scale analyses. A key value of the cloud is that collaboration is default; researchers can log in to the NCI Pilots from anywhere in the world and work together on a project.

Analytic methods continue to improve, to better understand disease

Recommendation 10 calls for the development of new enabling cancer technologies and explicitly points to the need for computational platforms that connect several layers of biomedical data.

There has never been a more favorable climate in which to do cancer research.

As the size and complexity of the data sets used in cancer research grows, it will be important to develop new data structures for representing and querying millions of cancer genomes. One possibility here is to use directed graph structures, which represent all the genetic variation of a population in a lightweight data format. This approach reduces the storage and memory demands of working with large genomic data sets, better enabling researchers to work with the data.

Massive genomic data sets will need to be analyzed alongside other molecular data, clinical data (including electronic health records) and behavioral data (for example, measures of patient behavior and risk exposure). Ideally, these data sets need to live side by side for comparisons to be made — and advances in computational layering and cloud computing can help accomplish that.

Computational biology can help immunotherapy become more cost-effective

The report emphasized the importance and potential promise of immunotherapy, which co-opts the body’s own immune system to directly attack the foreign cancer cells. Use of these therapies is made possible by detailed molecular characterization of the tumors. Silicon Valley entrepreneur Sean Parker is a prominent backer of immunotherapies, having provided a $250 million grant via the Parker Foundation to help bring together leading researchers from six of the U.S.’s major cancer research centers.

These therapies are effective, but not yet perfect, and much more development is required. However, clinical development costs can be decreased by using computational resources earlier in the R&D value chain by identifying stratified groups of patients who can benefit from genetic profiling prior to commencing clinical trials. Using computational methods in development, the immune repertoire can be profiled on a population basis and can help clinicians predict better outcomes.

Final thoughts: Support for curing cancer has never been stronger

Alongside biological and computational advances that will make possible high-resolution molecular characterization of cancer, there has never been a more favorable climate in which to do cancer research. The U.S. government is leading the high-profile fight through charismatic and committed champions such as Vice President Biden and the U.S. Precision Medicine Initiative and Cancer Moonshot.

When thinking about cancer research, people conjure up images of white coats and test tubes. We don’t immediately think of computational cores, cloud computing, APIs and Python scripts. But I believe computational infrastructure is the not-so-secret ingredient that glues together all 10 recommendations of the Blue Ribbon Panel and ensures we achieve 10 years of progress in cancer research in five years’ time.