Google has made a lot of progress on its self-driving car project in the past few years, and just this week it began ferrying journalists around Mountain View in the autonomous vehicles. The company shared with us a heap of information about the project, its history and how it came to be in light of its recent advancements – and while it’s been hard going, you might be surprised at how far they’ve come, and just how successful they’ve been in their testing to date.

Google cites the 1939 World’s Fair ‘Futurama’ ride for GM as the inception point of the passion for self-driving vehicles, but the self-driving car project kicked off at Google in 2009 under Sebastian Thrun, with an initial focus on the relatively uncomplicated (but still monumental) task of negotiating freeways. The past year or so has been focused on tackling city street driving, with improvements including pedestrian and cyclist detection, sign reading via improved computer vision, and more advanced software models to predict a full spectrum of possible roadway incidents.

To build a comprehensive model of city driving involves painstakingly slow development, however; Google says that it’s adding new streets in Mountain View every week, but that’s just a single city – presumably tackling new urban centers, and dealing with varying municipal and state driving laws and different driver behavior will involve additional work as well. Google also says it needs to do more work with situations that humans handle via social signals (including hand gestures, head movements and eye contact) like 4-way stops, lane changes and merging with other traffic.

To build a comprehensive model of city driving involves painstakingly slow development, however; Google says that it’s adding new streets in Mountain View every week, but that’s just a single city – presumably tackling new urban centers, and dealing with varying municipal and state driving laws and different driver behavior will involve additional work as well. Google also says it needs to do more work with situations that humans handle via social signals (including hand gestures, head movements and eye contact) like 4-way stops, lane changes and merging with other traffic.

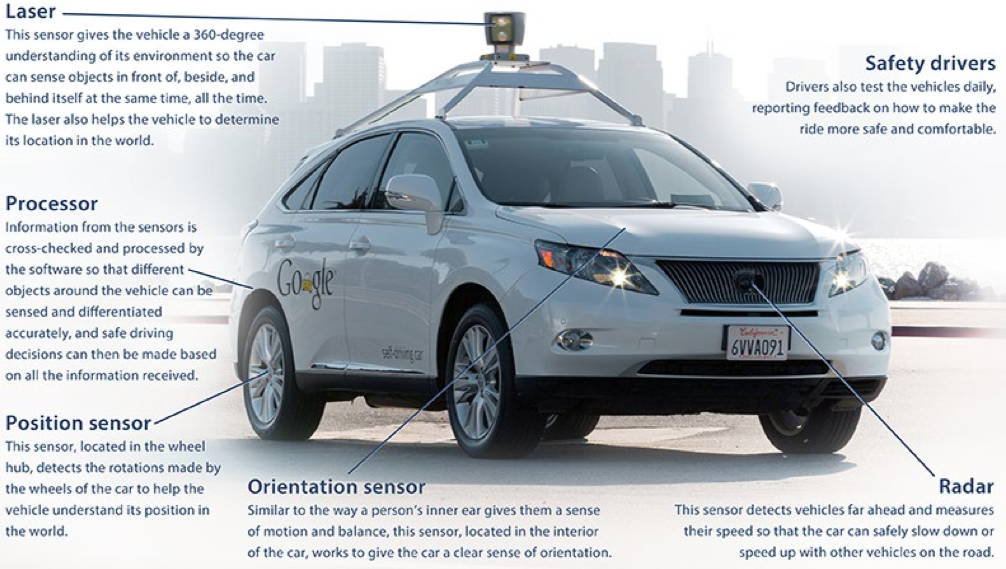

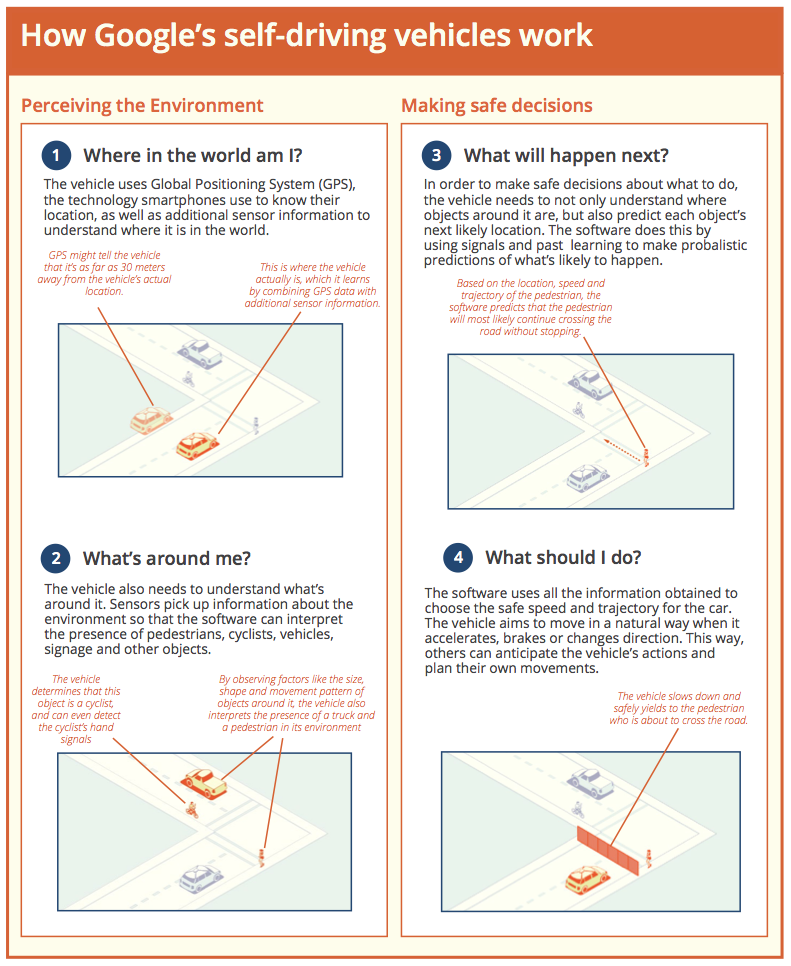

Google’s self-driving cars have other super powers that we mere humans do not, however, and that probably has something to do with the project’s 0 percent accident rate in its five-year history – yes, the autonomous vehicles have been involved in exactly no incidents or collisions over the course of the trial. For instance, a Google car can “see” 360 degrees, using a combination of lasers, positional and orientation sensors, and radar. All of this info is fed to a processor that checks it for accuracy and relevance, and then creates a detailed map of the cars surroundings and process that for event data in real-time. Take a look at how the information gathering and decision-making process, including resulting action, happens step-by-step in the graphic below.

The entire purpose of the self-driving car project is to make it possible for cars to react to their environment with 100 percent accuracy, avoiding the 93 percent of accidents that happen on the road each year due to human error. So far, it has managed around 700,000 autonomous miles driven, using specialized Lexus SUVs modified with Google’s sensor, processor and imaging tech. It’s still a long way away from being something a majority of us use everyday in place of our current cars, but Google is in talks with automotive manufacturers to begin work on production vehicles, with a projected time frame of around six years before the first (limited) model could come to market. It’s a distant dream still, but far less so than it was 75 years ago at the World’s Fair.