In a fascinating project, researchers at University of California San Diego and the University of Toronto have found that computers are far better than humans at recognizing the difference between real and fake pain in the faces of test subjects. In fact, while humans could tell the difference 55 percent of the time, robots could tell it 85 percent of the time.

“In highly social species such as humans, faces have evolved to convey rich information, including expressions of emotion and pain,” said Kang Lee, a senior author. “And, because of the way our brains are built, people can simulate emotions they’re not actually experiencing – so successfully that they fool other people. The computer is much better at spotting the subtle differences between involuntary and voluntary facial movements.”

Computers, then, can see what we miss: the tiny nuances in facial expression that mean the difference between a faked grimace and a real bout of toe-curling ouchiness. When we simulate pain, for example, we show “over-regularity” that machines can read. When we feel real pain, on the other hand, our face changes dynamically and without our control.

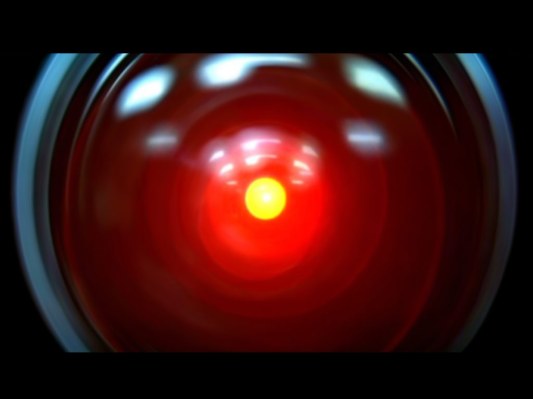

The researchers expect this technology to be helpful in assessing the moods and health of subjects by allowing machines to “see” how drivers, students, and medical patients are really feeling. Presumably it could be embedded into other systems to see if you’re happy, sad, upset, or cranky and, in a calm, steady voice, the computer could remind you that you are really upset about things and that you should take a stress pill and think things over.