Amazon Web Services announced AWS Data Pipeline at the re:Invent conference today. The new service is designed to help developers manage applications through a data driven process.

With the Data Pipeline, a customer’s workflow uses pre-made templates to flow the data to the correct destination.

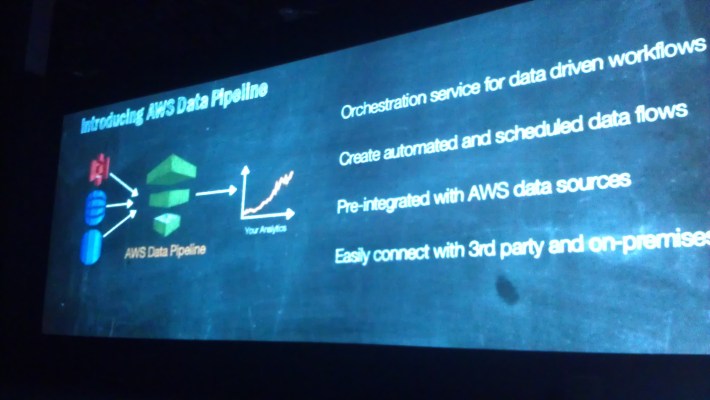

Data Pipeline includes:

- Orchestrated services for data driven workflows.

- Create automated and scheduled data workflows.

- The capability to integrate the workflows with Amazon resources.

- Third-party integration and on-premise resources.yea

In a demo, Chief Data Scientist Matt Woods showed how to create a pipeline with a drag and drop interface, using the pre-made templates. The user interface configures the source, destination and requirements. A schedule can be set to automate the process. A customer can also create a daily report that can be analyzed with AWS Map Reduce with Hadoop clusters that can be configured for pay as you go analysis. Weekly reports can also be created. (Hat tip to Aaron Delp for his excellent live blog of today’s keynote.)

The news came during CTO Werner Vogel’s keynote that focused on the philosophy that everything is a programmable resource. There are no constraints. You can build your business anywhere in the world. He described this as a cost aware architecture, which I will get into with a later post.

The Data Pipeline fits into that strategy by providing a data driven way to program applications that is optimized to the AWS infrastructure.