I’ve seen plenty of apps get yanked from Apple’s App Store in my day, but the news of an app called iKamasutra getting that same treatment really has me scratching my head. I won’t rehash the entire story, but here’s the gist of what happened:

Apple pulled the iKamasutra from the App Store in February, and after a bit of sleuthing, creator Naim Cesur of NBITE managed to contact someone in Apple’s verification department. According to that Apple employee, the app was removed because of a suggestive app icon and a preponderance of detail in the images used (including the use of brown hair).

Cesur and his team dutifully updated the apps with a revamped icon and less detailed imagery, and resubmitted it. And then they waited.

It’s worth noting that iKamasutra is hardly a stranger to this sort of thing. Shortly after creator Naim Cesur first submitted it for inclusion in Apple’s App Store in 2008, reviewers shot it down for featuring “pornographic and obscene material,” an interesting claim for an app that seemed to have sexual positions acted out by Keith Haring characters. The app was rejected yet again around the time when Apple introduced age restriction controls for apps, but was finally admitted into the store around December 2009.

Meanwhile, Google too quickly yanked the iKamasutra app from the Google Play Store, and the end result was only slightly less unpleasant. After the app was pulled the team soon discovered the rationale behind the decision — according to an email from the Android Market Support team, the app violated the sexually explicit material provision of the content policy.

NBITE eventually made the decision to trick out the Android version with the same toned-down visuals, and re-release it as a separate app in the Google Play Store where it remains. Meanwhile, they’re still being shut out of Apple’s walled garden.

I had to see what all the fuss was about. With iKamaSutra unavailable from the iOS App Store, I turned to two of the three remaining versions of the app still in circulation — the original version in the Windows Phone Marketplace, and the similarly updated version found in the Google Play Store. I downloaded them, fired them up, and started digging.

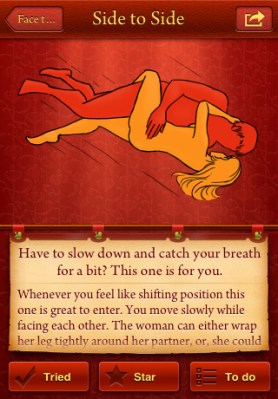

And what did I happen to find? Putting some mildly annoying music aside, not too much. Looking at the “uncut” version, I can sort of understand where Apple is coming from with regard to the offending gray lines. They’re mainly there to add a bit of anatomical detail — think faces, the curves of someone’s back, a collarbone — but Apple’s original umbrage probably stems from the fact that they also delineate the occasional breast or buttock.

But the bowdlerized version available in the Google Play Store is said to feature the same set of images in the updated version of the iOS app, and there’s nothing explicit to be found here — just plenty of vague silhouettes enjoying each other’s company.

Would I show them to my mother? Probably not. Would she be unduly disgusted or disturbed by seeing them? Absolutely not.

From my vantage point, NBITE has complied with everything that Apple has asked of them (and more). Brown hair? Fixed. Potentially suggestive gray lines? Gone. So what exactly is Apple’s problem with the app now? Well, when Apple finally responded to the Cesur and the NBITE team, it was to say that there were too many Kama Sutra apps in the App Store.

The thing is iKamasutra has been in the App Store for years, and has racked up something like 8 million downloads in that time (Cesur tells me that it would’ve easily exceeded 9 million by now had it not been pulled). That there are plenty of Kama Sutra apps in the App Store isn’t really a question, and there are indeed plenty of lousy ones. There’s an argument to be made for good apps that happen to fall under that category though, and from my brief experience with it, iKamasutra certainly seems to be one of them.

I suspect the real issue here is one of subjectivity. Even with age controls in place, Apple will never, ever let explicit content into the App Store, but I have to wonder about the criteria that Apple’s reviewers are using to judge cases like this one. I don’t doubt that Apple’s review crew is doing what they’re doing for the sake of protecting their users, but I don’t think enacting a sort of blanket policy is the way to go. Alternatives are surely tough to come by considering the sheer number of apps that require their attention, but a case like this raises some valid concerns about the consistency of the review process.