Imagine you’re a hunter-killer robot, hovering over the broken wasteland that used to be the world of men. You have surprised a group of biologicals in an act of petty insurrection, and they have split into two groups and begun to flee. You can only pursue and eliminate one group, but you don’t have the spare milliseconds to analyze your high-definition imagery; yet you must determine which group is greater if you want to meet your termination quota.

It’s a good thing that back in 2012, a university lab in Italy helped machines like you evolve approximate number sense!

Yes, Marco Zorzi and Ivilin Stoianov have opened the Pandora’s box of guesstimation in their shadowy “laboratorio” at the University of Padua. Or rather, it opened itself, as the ability seems to have emerged naturally from basic learning processes and not from any programmed understanding of numerosity.

Seriously, though, this is very interesting research. Zorzi and Stoianov created a virtual neural network simulating a basic retina-like structure that “sees” pixels, and then two deeper layers of nodes that sort and analyze the input from the “retina” layer. Strictly speaking, the retina is already composed of several layers with various levels of analysis, but we’ll let that go for now, for science.

The self-revising neural network model they used (in other words, a small-scale, learning AI) was not given any lessons on numbers — it did not know the difference between 2 and 4, integers or fractional numbers, higher or lower numbers, anything like that. This was intended to mimic the early stages of development in creatures that demonstrate ANS: approximate number sense, described as the ability to determine basic numeric qualities such as greater or lesser without actually understanding the numbers themselves. Infants demonstrate it before learning basic arithmetic, and fish demonstrate it when choosing larger and therefore safer shoals to swim with, when presumably they are not counting their colleagues’ numbers exactly. Many other animals show it as well, in situations you can probably imagine.

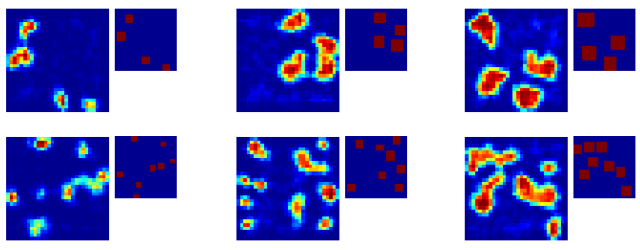

Zorzi exposed the neural network to a series of 51,800 images comprising various numbers and sizes of rectangles spread around a field. The network attempted to recreate in its own way the images and determine rules governing them. After a number of exposures, the network exhibited on the “lowest” level of neurons (i.e. the most meta-analytical) that there were some neurons firing more or less in correlation with the number of rectangles, but not the total surface area taken up by those rectangles, indicating that the AI was detecting numbers and not simply contrast or the like. Remember, this AI doesn’t even know what numbers are.

They further solidified their findings by letting the computer estimate whether a given image had more or fewer objects than a given number. It had indeed developed a rudimentary ANS system. Interestingly, there are actual neurons in the parietal cortex that exhibit this same behavior.

This type of research typifies the next phase in the real-world/computer interface: natural learning and fuzzy logic. The ability to take what has been detected and turn that into a system of rules, much like the way our own minds are formed, is going to be increasingly important. There might be little things like — your Kinect hears the front door open between this and that hour, and automatically turns on the TV and queues up the show you always watch on that day of the week. Or a security camera learns the faces it needs to pay attention to, or a helper robot learns when to follow master and when not to based on cues the creators might not have foreseen, or anything else you can think of.

Basing our devices on ourselves is one of the ways to make them easy to relate to, though it’s not a guarantee that they will be useful. There’s no use training up a car-painting robot from babyhood, but it would make sense for more domestic devices. Having our devices think and act like us is a natural path, though, and while at the moment our resources seem to limit us to simulating functions we as humans (and fish) develop in the cradle, that doesn’t mean more sophisticated abilities can’t or won’t be developed. Indeed, it is easy to underestimate the sophistication of the most basic functions we as active, conscious beings exhibit every day without even noticing.

The paper, “Emergence of a ‘visual number sense’ in hierarchical generative models,” was published earlier this month in Nature.