Coming up with new gestures that can be performed with one, two, or three fingers is a surprisingly difficult job. Many are proposed; few actually make it into daily use. Here’s one from Apple entitled “hold and swipe,” in a recent-ish patent application. But is it really new?

I’m leaving out the part where patenting a gesture is an absurdity, because that’s an entirely separate issue.

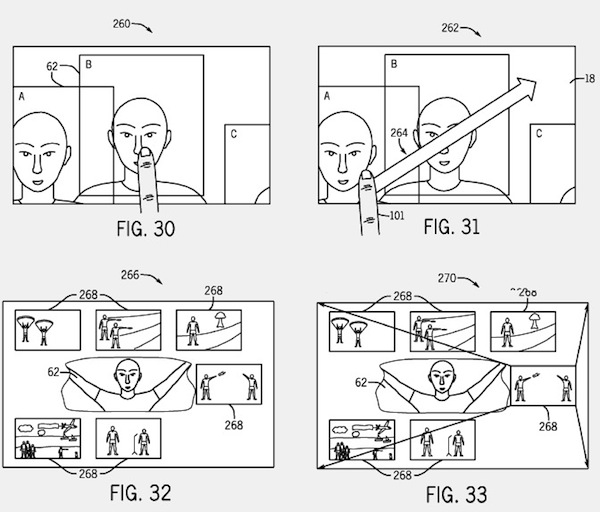

The gesture has to do with navigating visual information on a small screen (like the new Nano). Zooming in on a face or object within a picture, or selecting an aspect of it, can be difficult both for the user, who can’t see where they’re touching, and the touchscreen, which can’t accurately determine where the user intends to touch. The hold and swipe method would rely heavily on image metadata like facial recognition, and allow users to go between points of interest without repeating a single inexact gesture multiple times.

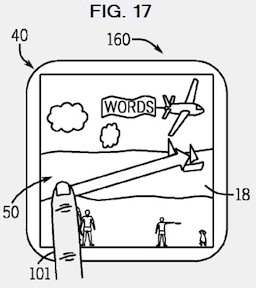

The idea is that you’d touch down on the display, on or off an object of interest, and after a short delay, move your finger in whatever direction. Contextual actions are done based on a few things: if you put your finger on someone’s face, then move off to the right, it could either go to other people’s faces in the picture, or switch to other pictures with the same face. The rate could be modified by the distance your finger is from the original point, or by tilting the device itself.

Unfortunately, as I said, coming up with new gestures is quite difficult, and this one is likely to end up totally unused. For one thing, it doesn’t solve the problem it sets out to solve: not only is the user’s finger still obscuring much of the display, but they can’t remove it while navigating the content. It’s also overcomplicated: tilting the display while holding your finger down at a certain distance from the original point? It’s all most people can do to master two-finger scrolling. And the UI elements are also nontrivial additions. How do you tell the user it’s time to move their finger? How do you indicate, on this small screen of theirs, which face or element of the picture they’ve landed on?

It’s also not original. The idea of placing an “anchor” by holding a finger or stylus down, and then scrolling in the direction indicated by the next movement is quite old. Apple’s addition of moving between points of interest or photos with the same face or tag is just a veneer on a gesture that’s been around in games and apps for years. If this patent were legitimate you could also patent using this gesture to move between cells in a spreadsheet, or brush sizes in photoshop, or tabs in a browser.

It just goes to show the amount of chaff that’s produced while you’re trying to get some wheat. Most of these ideas die early on in focus groups (we produced and killed a dozen in a couple hours at a recent Microsoft event), but this one, perhaps, they felt was on the border line. I wouldn’t expect Apple to include something this inelegant in devices like the Nano. Limiting the user’s UI vocabulary has been a big part of Apple’s success, and part of that has been binning ideas like this one as quick as possible.

Patently Apple has more information, but for some reason I couldn’t bring up the patent itself. I’ll update with a link as soon as I get it.