In an experiment that reveals as much about the people on Facebook as it does about Facebook itself, researchers from the Unversity of British Columbia Vancouver infiltrated the social network with bots and made off with information from thousands of users.

Around 250GB of data was stolen during the study, including personal and marketable information, and around three thousand users were targeted. Only one in five of the profiles were flagged by the Facebook Immune System, which clearly needs a boost.

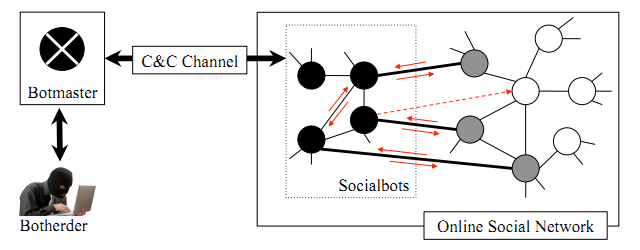

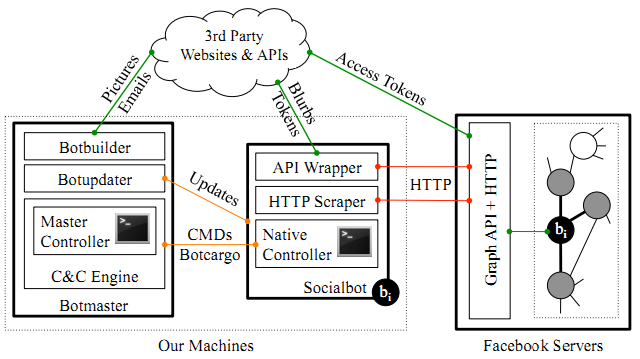

The fake UBC accounts, which they call “socialbots,” were created from a few simple scripts, which submitted a the requisite account information: names, pictures, and status updates were trawled from the open web, eventually producing 102 fairly believable accounts. The intelligence organizing this effort is referred to evocatively as the “botherder” or “adversary.”

Next, they sent friend requests to random people. Unsurprisingly, they found earlier that more attractive people get better responses to unsolicited friend requests, so they pulled their profile pictures from the high end of Hot Or Not. Only 20% of this initial random sampling took the bait, but later, when friending one of their network’s 2nd-degree friends, the success rate jumped to 60%. That’s called the “triadic closure principle,” if you were wondering. Each bot made around 20 friends on average, but some nabbed as many as 80 or 90.

Facebook’s fraud detection was avoided by rate-limiting the posts and friend requests to avoid CAPTCHAs. Only 20 profiles were detected by Facebook, all of them, interestingly, the female bot variant.

Considering how easy it was to automate the process of account creation and propagation, one has to question the effectiveness of Facebook as an authentication system. Naturally it can be used to determine identity to a certain point, but it’s certainly no Turing Test. Our own comment section shows that bots and generated accounts are plentiful. Hopefully this experiment will spur Facebook to improve this aspect of their security, as it was intended to. Maybe it will also convince some people to be a bit more selective in their friending process.

And in case you’re wondering whether you might have inadvertently contributed to the experiment and, thus, the sum of human knowledge, rest easy:

We carefully designed our experiment in order to reduce any potential risk at the user side by following known practices, and got the approval of our university’s behavioral research ethics board. We strongly encrypted and properly anonymized all collected data, which we have completely deleted after we finished our planned data analysis.

You can download the full report here. It makes for interesting reading, though for hackers and botnet enthusiasts, it probably contains little in the way of new information.