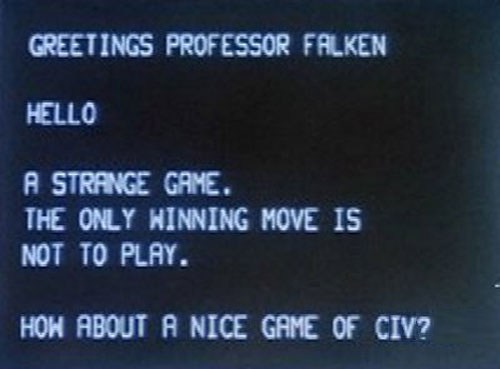

So we’re teaching the computers how to advance their own interests via global warfare, now. Nice knowing you, fellow humans.

Yes, researchers in MIT’s Computer Science and Artificial Intelligence Lab (CSAIL) decided it would interesting to see how a computer with only a basic understanding of the English language would internalize “loose” knowledge. To test this, they had a few very basic AIs (as in, they just move the cursor around and click UI elements randomly) play Civilization against each other.

At first the AIs just interacted randomly. But then they gave one AI access to the user’s manual. It used its ability to read text from the screen to correlate actions with words, and words with items from the manual. Pretty soon it was winning 79% of its games — completely based on its own natural learning processes.

It’s not the first strategy-game AI by far. In fact, one Starcraft AI competition has yielded some seriously sophisticated results, but they were tailored to the game itself. The “naive” AIs in the MIT experiment could only build up a knowledge base from scratch.

The deeper purpose of the experiment was to demonstrate that AIs could determine the meanings of words by interacting with their environment. Today Civilization, tomorrow a factory or hospital, where a drone of some sort might learn new words and actions just from hearing them and seeing them done.

[via io9]