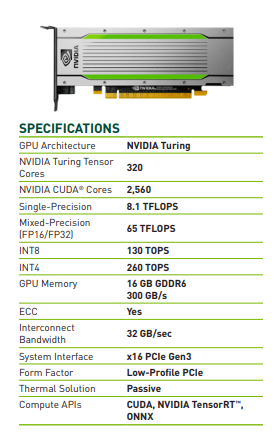

In the coming weeks, AWS is launching new G4 instances with support for Nvidia’s T4 Tensor Core GPUs, the company today announced at Nvidia’s GTC conference. The T4, which is based on Nvidia’s Turing architecture, was specifically optimized for running AI models. The T4 will be supported by the EC2 compute service and the Amazon Elastic Container Service for Kubernetes.

“NVIDIA and AWS have worked together for a long time to help customers run compute-intensive AI workloads in the cloud and create incredible new AI solutions,” said Matt Garman, vice president of Compute Services at AWS, in today’s announcement. “With our new T4-based G4 instances, we’re making it even easier and more cost-effective for customers to accelerate their machine learning inference and graphics-intensive applications.”

“NVIDIA and AWS have worked together for a long time to help customers run compute-intensive AI workloads in the cloud and create incredible new AI solutions,” said Matt Garman, vice president of Compute Services at AWS, in today’s announcement. “With our new T4-based G4 instances, we’re making it even easier and more cost-effective for customers to accelerate their machine learning inference and graphics-intensive applications.”

The T4 is also the first GPU on AWS that supports Nvidia’s raytracing technology. That’s not what Nvidia is focusing on with this announcement, but creative pros can use these GPUs to take the company’s real-time raytracing technology for a spin.

For the most part, though, it seems like Nvidia and AWS expect that developers will use the T4 to put AI models into production. It’s worth noting that the T4 hasn’t been optimized for training these models, but they can obviously be used for that as well. Indeed, with the new Cuda-X AI libraries (also announced today), Nvidia now offers an end-to-end platform for developers who want to use its GPUs fr deep learning, machine learning and data analytics.

It’s worth noting that Google launched T4 support in beta a few months ago. On Google’s cloud, these GPUs are currently in beta.