Cloud services are designed to take away a lot of the complexity associated with managing a particular process, whether that’s software or infrastructure. Today, machine learning is quickly gaining traction with developers, and AWS wants to help remove some of the obstacles associated with building and deploying machine learning models.

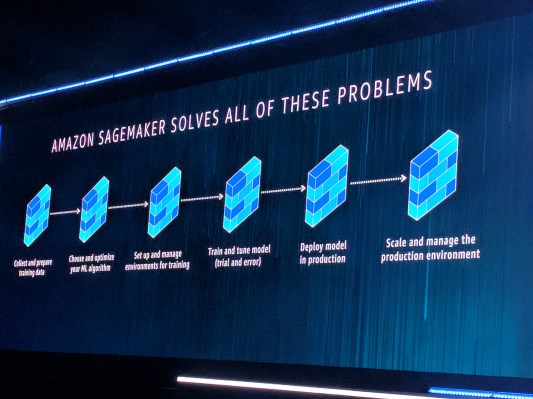

To that end, the company announced Amazon SageMaker, a new service that provides a framework for developers and data scientists to manage the machine learning model process while removing some of the heavy lifting that is typically involved.

Randall Hunt wrote in a blog post announcing the new service that the idea is provide a framework for accelerating the process of getting machine learning incorporated in new applications. “Amazon SageMaker is a fully managed end-to-end machine learning service that enables data scientists, developers, and machine learning experts to quickly build, train and host machine learning models at scale,” Hunt wrote.

As AWS CEO Andy Jassy put it while introducing the new service on stage at re:invent, “Amazon SageMaker, an easy way to train, deploy machine learning models for every day developers.”

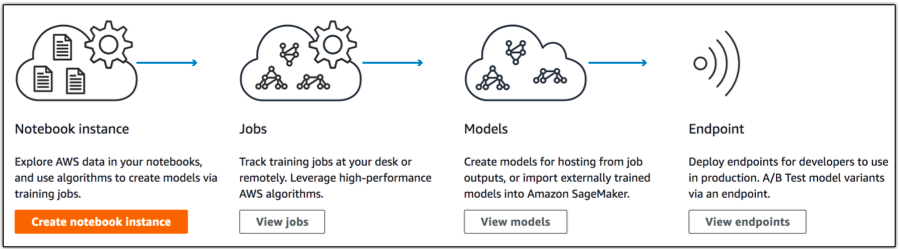

The new tool involves three main pieces.

It starts with a Notebook, which uses standard Jupyter notebooks for reviewing the data that will be the basis for your model. You can run this first step on standard instances or select GPUs for more processor-intensive requirements.

Once you have your data ready, you can begin a job to train the model. This includes the base algorithm for your model. For this part, you can bring your own such as the popular TensorFlow or you can use one of the ones AWS has pre-configured for you.

In his presentation, Jassy emphasized SageMaker’s flexibility. It provides you with out-of-the-box tools or lets you bring your own. In either case, the service has been tuned to deal with most popular algorithms, regardless of the source.

Holger Mueller, VP and principal analyst at Constellation Research says this flexibility could be a double-edged sword. “SageMaker reduces that work/education/effort significantly and will help to build these apps. But it also means that AWS is supporting the ‘polyglot’ world of many models — and really wants to keep its users and the compute/data load.”

He believes a bigger story would be if AWS had announced its own neural network like TensorFlow, but there is nothing on that front yet.

Regardless, Amazon handles all of the underlying infrastructure required to run the model including any issues like node failure, auto scaling or security patching.

Once you have your model, Jassy said you could run it from SageMaker or use it on another service, as you wish. As he put it, “This is a big deal for data scientist and developers.”

AWS is making this service available for free starting today as part of its free tier of services, but once you exceed certain levels, pricing will be based on usage and region.