Watch out, global supercomputer Top 5, there’s a new contender. Or at least there will be soon, once the $30 million Stampede 2 is up and running. With 18 petaflops peak processing capacity, the new system will stand shoulder to shoulder with Cray’s Titan and IBM’s Sequoia — though a good deal behind China’s Tianhe-2.

The idea then, as now, was to create a world-class supercomputing platform that could be accessed by any researcher with a problem requiring intense number crunching. Things like atomic and atmospheric science simulations, for instance, that would take years to grind through on a desktop but can be turned around in days on a supercomputer.

Just imagine accounting for all the movements and interactions of the 750,000 particle analogs in this simulation of a colloidal gel!

![Zia_Colloidal_Gel_Panel2[1]](https://techcrunch.com/wp-content/uploads/2016/06/zia_colloidal_gel_panel21.jpg) Or tracking the entropy of every pseudoparticle (?) in this 2,000 cubic-kilometer general relativistic magnetohydrodynamic rendering of a supernova progenitor!

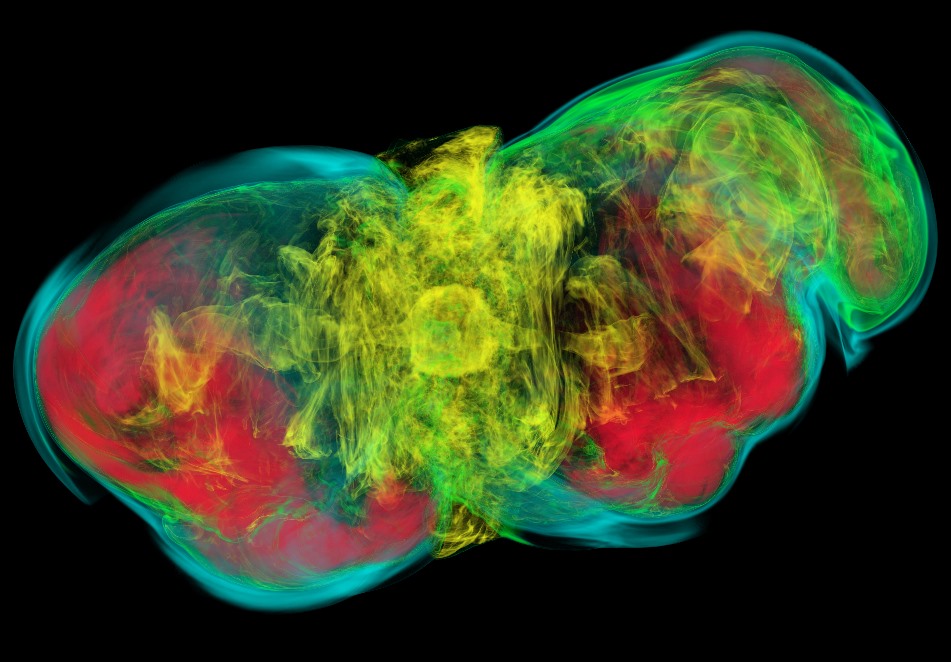

Or tracking the entropy of every pseudoparticle (?) in this 2,000 cubic-kilometer general relativistic magnetohydrodynamic rendering of a supernova progenitor!

“The kind of large-scale computing and data capabilities systems like Stampede and Stampede 2 provide are crucial for innovation in almost every area of research and development,” said Texas Advanced Computing Center director Dan Stanzione in a UT press release. “Stampede has been used for everything from determining earthquake risks to help set building codes for homes and commercial buildings, to computing the largest mathematical proof ever constructed.”

Stampede 2 provides about twice the power of the original Stampede, which was activated in March of 2013. Both systems are funded by grants from the National Science Foundation, and built at the University of Texas at Austin.

They didn’t just double the core count for the sequel, though. The 22nm fabrication tech behind the original is being retired, with 14nm Xeon Phi chips codenamed “Knights Landing” (and some “future-generation” processors, as well) forming 72 cores versus the first system’s 61.

RAM, storage and data bandwidth are also being doubled; after all, you can’t crunch the data if you can’t move it. Stampede 2 can shift up to 100 gigabits per second, and its DDR4 RAM is fast enough to work as an enormous third-level cache as well as fulfill ordinary memory roles.

It will also employ 3D Xpoint non-volatile memory, which is claimed to be faster than NAND but cheaper than DRAM, a sort of holy grail for high-performance storage use cases. Stampede 2 will be the first serious deployment of the stuff, so let’s hope that means it’s trickling down for us desktop users.

But enough spec porn (more here), even though it’s all for a good cause. After all, scientists around the country are voracious consumers of this type of resource. The last decade, the UT press release points out, has seen institution numbers double, principal investigators triple and active users quintuple. And why should that growth slow down, as we find ever more ways to employ deep data analysis tasks in both the investigation of the natural world and the creation of new tools and services?

There’s no indication of when Stampede 2 will power up, but given that the funding was just lined up, it’ll be a year or more at least — during which time, of course, that Top 5 supercomputer list could get even more crowded.